LWN.net Weekly Edition for May 5, 2016

In search of a home for Thunderbird

After nearly a decade of trying, Mozilla is finally making the move of formally spinning off ownership of the Thunderbird email client to a third party. The identity of the new owner is still up for debate; Simon Phipps prepared a report [PDF] analyzing several possible options. But Mozilla does seem intent on divesting itself of the project for real this time. Whoever does take over Thunderbird development, though, will likely face a considerable technical challenge, since much of the application is built on frameworks and components that Mozilla will soon stop developing.

Bird versus fox

To say that Mozilla has had a difficult relationship with Thunderbird would be putting things mildly. The first release was in 2003, with version 1.0 following in late 2004. As soon as 2007, though, Mozilla's Mitchell Baker announced that Mozilla wished to rid itself of Thunderbird and find a new home for the project. Instead, Mozilla ended up separating Thunderbird off into a distinct unit (Mozilla Messaging) under the Mozilla Foundation umbrella. It then reabsorbed that unit in 2011, with Baker noting:

But, in July 2012, Mozilla began pulling paid developers from Thunderbird and left its development primarily in the hands of community volunteers, with a few Mozilla employees performing QA and build duties to support the Extended Support Release (ESR) program. At the time, Baker offered this justification:

By 2014, Mozilla had ramped down its involvement to the point where the Thunderbird team lacked any clear leadership, so the developer community voted to establish a Thunderbird Council made up of volunteers.

Most recently, Baker announced in December 2015 that Thunderbird would be formally separated from Mozilla. Phipps was engaged to research the options that he later published in the aforementioned report. In April 2016, Gervase Markham announced that the search for a new home for the project was underway, with Phipps's recommendations serving as a guide.

Lizard tech

For fans of Thunderbird, the repeated back-and-forth from Mozilla leadership can be a source of frustration on its own, but it probably does not help that Mozilla has started multiple other non-browser projects (such as ChatZilla, Raindrop, Grendel, and Firefox Hello) over the years while insisting that Thunderbird was a distraction from Firefox. Although it might seem like Mozilla management displays an inconsistent attitude toward messaging and other non-web application projects, each call for Mozilla to rid itself of Thunderbird has also highlighted the difficulty of maintaining Thunderbird and Firefox in the same engineering and release infrastructure.

In recent years, due in no small part to pressure coming from the rapid release schedule of Google's Chrome, the Firefox development process has shifted considerably. There are new stable releases made approximately every six weeks, and development builds are provided for the next two releases in separate release channels.

In addition, the Firefox codebase itself is changing. The XUL and XPCOM frameworks are on their way out, to be replaced with components and add-ons written in JavaScript. The Gecko rendering engine is also marked for replacement by Servo, and the entire Firefox architecture may be replaced with the multi-process Electrolysis model.

While these changes are exciting news for Firefox, none of them have made their way into Thunderbird. In April, Mozilla's Mark Surman highlighted the divergence issue in a blog post, noting:

Surman also pointed to a new job listing posted by Mozilla for a contractor who would oversee the transition. The posting describes two key responsibilities: to list all significant technical issues facing Thunderbird (including impact assessments) and to compile an outline of the options available to address those issues to move Thunderbird forward.

Former Mozilla developer Daniel Glazman responded to Surman's post on his own blog, with a more blunt assessment of the technical challenges facing Thunderbird developers. He pointed to the job posting's mention of XUL and XPCOM deprecation and said:

- rewrite the whole UI and the whole JS layer with it

- most probably rewrite the whole SMTP/MIME/POP/IMAP/LDAP/... layer

- most probably have a new Add-on layer or, far worse, no more Add-ons

Glazman concluded that it is too soon to select a new host for the Thunderbird project, given that a decision has yet to be made about how to rewrite the application. Furthermore, he pointed out, Mozilla has not yet begun the transition away from XUL and XPCOM in the Firefox codebase. Only when that process starts, he said, will it be possible to assess the complexity of such a move for Thunderbird.

As far as the build infrastructure goes, Markham sent a proposal to the Thunderbird Council in March suggesting a path forward for separating Thunderbird from the Firefox engineering infrastructure. It did not spawn much discussion, but there did not seem to be any objection either.

Out of the nest

For now, Mozilla seems set on finding a new fiscal and organizational sponsor for Thunderbird, with The Document Foundation and the Software Freedom Conservancy (both highlighted in Phipps's report) currently the leading candidates. But the discussion has only just begun on the technical aspects of maintaining and evolving Thunderbird as a standalone application.

Surman contended that the needs of Firefox and Thunderbird are simply too different today for them to be tied to the same codebase and release process. Essentially, the web changes rapidly, while email changes slowly. It is hard to argue with that assertion (setting aside discussions of how email should change), but Thunderbird fans might contend that Mozilla not contributing developer time to the Thunderbird codebase only exacerbates any inherent difference between the browser and email client.

Whether one thinks Mozilla has not adequately supported Thunderbird over the years or has done its level best, the Thunderbird and Firefox projects today are moving in different directions. Given their shared history, it may seem sad to watch them part ways, but perhaps the Thunderbird community can make the most of the opportunity and drive the application forward where Mozilla could (or would) not.

Caravel data visualization

One aspect of the heavily hyped Internet of Things (IoT) that can easily get overlooked is that each of the Things one hooks up to the Internet invariably spews out a near non-stop stream of data. While commercial IoT users—such as utility companies—generally have a well-established grasp of what data interests them and how to process it, the DIY crowd is better served by flexible tools that make exploring and transforming data easy. Airbnb maintains an open-source Python utility called Caravel that provides such tools. There are many alternatives, of course, but Caravel does a good job at ingesting data and smoothly molding it into nice-looking interactive graphs—with a few exceptions.

My own interest in data-visualization tools stems from IoT projects

(namely home-automation and automotive sensors), but Caravel itself is

in no way limited to such uses. Like most contemporary web-based

service providers, Airbnb

![Caravel dashboard [A Caravel dashboard]](https://static.lwn.net/images/2016/05-caravel-dashboard-sm.png) collects a lot of data about its users and their transactions (in this

case, short-term

housing rentals, renters, and property owners). The company also prides itself on

having a slick-looking web interface, and Caravel reflects that: it

sports modern charts and graphs—no crusty old PNGs with jagged

lines generated by Graphviz here; everything is done in JavaScript.

collects a lot of data about its users and their transactions (in this

case, short-term

housing rentals, renters, and property owners). The company also prides itself on

having a slick-looking web interface, and Caravel reflects that: it

sports modern charts and graphs—no crusty old PNGs with jagged

lines generated by Graphviz here; everything is done in JavaScript.

In a nutshell, what Caravel provides is a connection layer supporting a variety of database types, the tools to configure the metrics of interest for any tables one wishes to explore, and an interactive utility for creating data visualizations. Several dozen visualization options are built in, and all of the charts the user creates can be saved and put into convenient "dashboards" for regular usage.

On top of all that, Caravel's interface is web-based and is almost entirely point-and-click. Perhaps the closest parallel would be to a tool like Orange, where the goal is to mask over the complexities of SQL and statistics. Caravel does not quite walk the user through adding new data sources or defining metrics, but it does take care of as many of the repetitive steps as it can.

For example, when you add a database table to your Caravel

![Tables in Caravel [Tables in Caravel]](https://static.lwn.net/images/2016/05-caravel-tables-sm.png) work space, there are rows of checkboxes by every field. If you want

to track the minima, maxima, or sums for certain fields, you check

them at load time, and those metrics are automatically available on

the relevant pages of the application from then on. Similar

checkboxes are available for selecting which fields should be used as

categorical groups and which should be available for filtering the

data set.

work space, there are rows of checkboxes by every field. If you want

to track the minima, maxima, or sums for certain fields, you check

them at load time, and those metrics are automatically available on

the relevant pages of the application from then on. Similar

checkboxes are available for selecting which fields should be used as

categorical groups and which should be available for filtering the

data set.

The first public release of Caravel was in September 2015. The most recent is version 0.8.9, from April 2016. The code is hosted at GitHub and packages are also available on the Python Package Index (PyPI). For the moment, only 2.7 is supported. On Linux, installation also requires the development packages for libssl and libffi. When Caravel is installed, one only needs to initialize the database and create an administrator account to get started.

A Caravel instance is multi-user, and the system supports an array

of permissions and access controls. For testing, though, that is not

necessary. Out of the box, the system provides a local web UI and

comes pre-loaded with a demo data set. SQLite support is built in,

and any other database (local or remote) with SQLAlchemy support can be used

![Databases in Caravel [Databases in Caravel]](https://static.lwn.net/images/2016/05-caravel-db-sm.png) as well. Druid database clusters are also supported, and users

can define a custom schema for any database that requires one. For

those working with large data sets, the good news is that Caravel also

supports a number of open-source caching layers, although none of them

are required. All of these configuration options are presented in the web UI's

"add a database" screen.

as well. Druid database clusters are also supported, and users

can define a custom schema for any database that requires one. For

those working with large data sets, the good news is that Caravel also

supports a number of open-source caching layers, although none of them

are required. All of these configuration options are presented in the web UI's

"add a database" screen.

The birds-eye view of Caravel usage is that the user adds a new database, then selects and adds each table of interest. From then on, working with Caravel is a matter of using the visualization builder to hone in on a chart or graph that presents some meaningful information. The visualizations include everything from line charts to bubble graphs, box plots to directed graphs, and heatmaps to Sankey diagrams. There are also less scientific options, such as word clouds.

A visualization can be saved as a

"slice," and any number of slices can be collected onto the same page

as a "dashboard." Dashboards are updated regularly as the database is

refreshed, so they can be deployed for internal or public

consumption. Finally, although dashboard graphics are interactive

JavaScript (with additional information shown where the mouse hovers),

![Building visualizations in Caravel [Building visualizations in Caravel]](https://static.lwn.net/images/2016/05-caravel-visualization-sm.png) all charts and graphs can also be exported as image files.

all charts and graphs can also be exported as image files.

This set of features is fairly complete, but one might well ask whether the implementation is up to snuff. For the most part, the answer to that question is yes.

Adding new databases and choosing which tables to use borders on trivial, thanks to the well-optimized add-and-edit pages. There are a few caveats, such as the fact that the user cannot simply add all of the tables of interest from a database at once—each table requires a separate round trip through the "add a table" page. And when Caravel does not like something about a table, it is hard to debug.

For example, Caravel includes special treatment for time-series data; the user can mark any field in a table as being of the datetime type and it will be automatically plugged into various time-series charts in the visualization tool. But Caravel could not make sense out of the timestamps in one example data set I downloaded from datahub.io, and there is no easy way to inspect the data directly, nor does there seem to be any way in the UI to transform the timestamps into an acceptable datetime format. Nor even to see what Caravel thinks is wrong with them.

Clearly, this issue falls under a "you must know your data" warning, which is a fair expectation. But the error reporting that Caravel presents yanks the user right out of the UI, displaying a generic, low-level exception warning and a traceback from the Python interpreter.

And this sometimes happens through no fault of the user, like when

the user selects a new graph type from the drop-down menu in the

![Exceptions in Caravel [Exceptions in Caravel]](https://static.lwn.net/images/2016/05-caravel-exception-sm.png) visualization builder and the

newly-selected graph takes a different number of parameters. By and

large, the visualization tool is quite handy—the point-and-click

settings and controls are not merely a coat of "UI paint" on the top;

they help the user play around with their data sets to find the

visualization settings that work best. Thus, it is more disappointing

when that friendly interactivity breaks down.

visualization builder and the

newly-selected graph takes a different number of parameters. By and

large, the visualization tool is quite handy—the point-and-click

settings and controls are not merely a coat of "UI paint" on the top;

they help the user play around with their data sets to find the

visualization settings that work best. Thus, it is more disappointing

when that friendly interactivity breaks down.

There are a couple of troubling technical limitations to mention. First, users must construct any new metrics of interest (other than sample counts, sums, and minima/maxima) by entering raw SQL expressions. Some additional statistical tools would be handy. Perhaps more fundamental is the fact that Caravel cannot join or query multiple tables; all of the visualizations are therefore limited to what information one can extract from a single table.

It might be interesting to pair Caravel with a tool like OpenRefine that specializes in data transformation, but I suspect that for a great many users, what Caravel can do already will serve them well. It handles the database connectivity in the background, putting the emphasis on exploring and manipulating visualizations. The visualizations and dashboards it provides are top-notch by modern standards, but the fact that they are easy for the user to create is Caravel's real advantage.

Security

Replacing /dev/urandom

The kernel's random-number generator (RNG) has seen a great deal of attention over the years; that is appropriate, given that its proper functioning is vital to the security of the system as a whole. During that time, it has acquitted itself well. That said, there are some concerns about the RNG going forward that have led to various patches aimed at improving both randomness and performance. Now there are two patch sets that significantly change the RNG's operation to consider.The first of these comes from Stephan Müller, who has two independent sets of concerns that he is trying to address:

- The randomness (entropy) in the RNG, in the end, comes from sources of

physical entropy in the outside world. In practice, that means the

timing of disk-drive operations, human-input events, and interrupts in

general. But the solid-state drives deployed in current systems are

far more predictable than rotating drives, many systems are deployed

in settings where there are no human-input events at all, and, in any

case, the entropy gained from those events duplicates the entropy from

interrupts in general. The end result, Stephan fears, is that the

current RNG is unable to pick up enough entropy to be truly random,

especially early in the bootstrap process.

- The RNG has shown some scalability problems on large NUMA systems, especially when faced with workloads that consume large amounts of random data from the kernel. There have been various attempts to improve RNG scalability over the last year, but none have been merged to this point.

Stephan tries to address both problems by throwing out much of the current RNG and replacing it with "a new approach"; see this page for a highly detailed explanation of the goals and implementation of this patch set. It starts by trying to increase the amount of useful entropy that can be obtained from the environment, and from interrupt timing in particular. The current RNG assumes that the timing of a specific interrupt carries little entropy — less than one bit. Stephan's patch, instead, accounts a full bit of entropy from each interrupt. Thus, in a sense, this is an accounting change: there is no more entropy flowing into the system than before, but it is being recognized at a higher rate, allowing early-boot users of random data to proceed.

Other sources of entropy are used as well when they are available; these include a hardware RNG attached to the system or built into the CPU itself (though little entropy is credited for the latter source). Earlier versions of the patch used the CPU jitter RNG (also implemented by Stephan) as another source of entropy, but that was removed at the request of RNG maintainer Ted Ts'o, who is not convinced that differences in execution time are a trustworthy source of entropy.

The hope is that interrupt timings, when added to whatever other sources of entropy are available, will be sufficient to quickly fill the entropy pool and allow the generation of truly random numbers. As with current systems, data read from /dev/random will remove entropy directly from that pool and will not complete until sufficient entropy accumulates there to satisfy the request. The actual random numbers are generated by running data from the entropy pool through the SP800-90A deterministic random bit generator (DRBG).

For /dev/urandom, another SP800-90A DRBG is fed from the primary DRBG described above and used to generate pseudo-random data. Every so often (ten minutes at the outset), this secondary generator is reseeded from the primary. On NUMA systems, there is one secondary generator for each node, keeping the random-data generation node-local and increasing scalability.

There has been a certain amount of discussion of Stephan's proposal, which is now in its third iteration, but Ted has said little beyond questioning the use of the CPU jitter technique. Or, at least, that was true until May 2, when he posted a new RNG of his own. Ted's work takes some clear inspiration from Stephan's patches (and from Andi Kleen's scalability work from last year) but it is, nonetheless, a different approach.

Ted's patch, too, gets rid of the separate entropy pool for /dev/urandom; this time, though, it is replaced by the ChaCha20 stream cipher seeded from the random pool. ChaCha20 is deemed to be secure and, it is thought, will perform better than SP800-9A. There is one ChaCha20 instance for each NUMA node, again, hopefully, helping to improve the scalability of the RNG (though Ted makes it clear that he sees this effort as being beyond the call of duty). There is no longer any attempt to track the amount of entropy stored in the (no-longer-existing) /dev/urandom pool, but each ChaCha20 instance is reseeded every five minutes.

When the system is booting, the new RNG will credit each interrupt's timing data with one bit of entropy, as does Stephan's RNG. Once the RNG is initialized with sufficient entropy, though, the RNG switches to the current system, which accounts far less entropy for each interrupt. This policy reflects Ted's unease with assuming that there is much entropy in interrupt timings; the timing of interrupts might be more predictable than one might think, especially on virtualized systems with no direct connection to real hardware.

Stephan's response to this posting has been

gracious: "In general, I have no concerns with this approach

either. And thank you that some of my concerns are addressed.

"

That, along with the fact that Ted is the ultimate decision-maker in this

case, suggests that his patch set is the one that is more likely to make it

into the mainline; it probably will not come down to flipping a coin. It

would be most surprising to see that merging happen for 4.7

— something as sensitive as the RNG needs some review and testing time —

but it could happen not too long thereafter.

Brief items

Security quotes of the week

Linux Kernel BPF JIT Spraying (grsecurity forums)

Over at the grsecurity forums, Brad Spengler writes about a recently released proof of concept attack on the kernel using JIT spraying. "What happened next was the hardening of the BPF interpreter in grsecurity to prevent such future abuse: the previously-abused arbitrary read/write from the interpreter was now restricted only to the interpreter buffer itself, and the previous warn on invalid BPF instructions was turned into a BUG() to terminate execution of the exploit. I also then developed GRKERNSEC_KSTACKOVERFLOW which killed off the stack overflow class of vulns on x64. A short time later, there was work being done upstream to extend the use of BPF in the kernel. This new version was called eBPF and it came with a vastly expanded JIT. I immediately saw problems with this new version and noticed that it would be much more difficult to protect -- verification was being done against a writable buffer and then translated into another writable buffer in the extended BPF language. This new language allowed not just arbitrary read and write, but arbitrary function calling." The protections in the grsecurity kernel will thus prevent this attack. In addition, the newly released RAP feature for grsecurity, which targets the elimination of return-oriented programming (ROP) vulnerabilities in the kernel, will also ensure that "

the fear of JIT spraying goes away completely", he said.

May Android security bulletin

The Android security bulletin for May is available. It lists 40 different CVE numbers addressed by the May over-the-air update; the bulk of those are at a severity level of "high" or above. "Partners were notified about the issues described in the bulletin on April 04, 2016 or earlier. Source code patches for these issues will be released to the Android Open Source Project (AOSP) repository over the next 48 hours. We will revise this bulletin with the AOSP links when they are available. The most severe of these issues is a Critical security vulnerability that could enable remote code execution on an affected device through multiple methods such as email, web browsing, and MMS when processing media files."

New vulnerabilities

botan: side channel attack

| Package(s): | botan1.10 | CVE #(s): | CVE-2015-7827 | ||||||||||||||||||||

| Created: | May 2, 2016 | Updated: | May 4, 2016 | ||||||||||||||||||||

| Description: | From the Debian advisory:

Use constant time PKCS #1 unpadding to avoid possible side channel attack against RSA decryption. | ||||||||||||||||||||||

| Alerts: |

| ||||||||||||||||||||||

botan: insufficient randomness

| Package(s): | botan1.10 | CVE #(s): | CVE-2014-9742 | ||||

| Created: | May 2, 2016 | Updated: | May 4, 2016 | ||||

| Description: | From the Debian LTS advisory:

A bug in Miller-Rabin primality testing was responsible for insufficient randomness. | ||||||

| Alerts: |

| ||||||

chromium-browser: multiple vulnerabilities

| Package(s): | chromium-browser | CVE #(s): | CVE-2016-1660 CVE-2016-1661 CVE-2016-1662 CVE-2016-1663 CVE-2016-1664 CVE-2016-1665 CVE-2016-1666 | ||||||||||||||||||||||||||||||||||||||||

| Created: | May 2, 2016 | Updated: | May 4, 2016 | ||||||||||||||||||||||||||||||||||||||||

| Description: | From the Red Hat advisory:

Multiple flaws were found in the processing of malformed web content. A web page containing malicious content could cause Chromium to crash, execute arbitrary code, or disclose sensitive information when visited by the victim. | ||||||||||||||||||||||||||||||||||||||||||

| Alerts: |

| ||||||||||||||||||||||||||||||||||||||||||

i7z: denial of service

| Package(s): | i7z | CVE #(s): | |||||

| Created: | April 29, 2016 | Updated: | May 4, 2016 | ||||

| Description: | From the Fedora advisory: i7z-gui: Print_Information_Processor(): i7z_GUI killed by SIGSEGV | ||||||

| Alerts: |

| ||||||

java: three vulnerabilities

| Package(s): | java-1.6.0-ibm | CVE #(s): | CVE-2016-0264 CVE-2016-0363 CVE-2016-0376 | ||||||||||||||||||||||||||||||||||||||||||||||||||||

| Created: | May 2, 2016 | Updated: | May 4, 2016 | ||||||||||||||||||||||||||||||||||||||||||||||||||||

| Description: | From the Red Hat advisory:

CVE-2016-0264 IBM JDK: buffer overflow vulnerability in the IBM JVM CVE-2016-0363 IBM JDK: insecure use of invoke method in CORBA component, incorrect CVE-2013-3009 fix CVE-2016-0376 IBM JDK: insecure deserialization in CORBA, incorrect CVE-2013-5456 fix | ||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Alerts: |

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||

jq: two vulnerabilities

| Package(s): | jq | CVE #(s): | CVE-2015-8863 CVE-2016-4074 | ||||||||||||||||||||||||||||

| Created: | May 4, 2016 | Updated: | December 8, 2016 | ||||||||||||||||||||||||||||

| Description: | From the openSUSE bug report:

CVE-2015-8863: heap buffer overflow in tokenadd() function http://seclists.org/oss-sec/2016/q2/134 CVE-2016-4074: stack exhaustion using jv_dump_term() function http://seclists.org/oss-sec/2016/q2/140 | ||||||||||||||||||||||||||||||

| Alerts: |

| ||||||||||||||||||||||||||||||

kernel: two vulnerabilities

| Package(s): | kernel | CVE #(s): | CVE-2016-3961 CVE-2016-3955 | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Created: | April 28, 2016 | Updated: | May 4, 2016 | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Description: | From the Xen advisory:

CVE-2016-3961: Huge (2Mb) pages are generally unavailable to PV guests. Since x86 Linux pvops-based kernels are generally multi purpose, they would normally be built with hugetlbfs support enabled. Use of that functionality by an application in a PV guest would cause an infinite page fault loop, and an OOPS to occur upon an attempt to terminate the hung application. Depending on the guest kernel configuration, the OOPS could result in a kernel crash (guest DoS). From the Red Hat bugzilla entry: CVE-2016-3955: Linux kernel built with the USB over IP(CONFIG_USBIP_*) support is vulnerable to a buffer overflow issue. It could occur while receiving USB/IP packets, when the size value in the packet is greater actual transfer buffer. A user/process could use this flaw to crash the remote host via kernel memory corruption or potentially execute arbitrary code. | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Alerts: |

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

mercurial: code execution

| Package(s): | mercurial | CVE #(s): | CVE-2016-3105 | ||||||||||||||||||||||||||||

| Created: | May 3, 2016 | Updated: | May 18, 2016 | ||||||||||||||||||||||||||||

| Description: | From the Slackware advisory:

This update fixes possible arbitrary code execution when converting Git repos. Mercurial prior to 3.8 allowed arbitrary code execution when using the convert extension on Git repos with hostile names. This could affect automated code conversion services that allow arbitrary repository names. This is a further side-effect of Git CVE-2015-7545. Reported and fixed by Blake Burkhart. | ||||||||||||||||||||||||||||||

| Alerts: |

| ||||||||||||||||||||||||||||||

minissdpd: denial of service

| Package(s): | minissdpd | CVE #(s): | CVE-2016-3178 CVE-2016-3179 | ||||

| Created: | May 4, 2016 | Updated: | May 4, 2016 | ||||

| Description: | From the Debian LTS advisory:

The minissdpd daemon contains a improper validation of array index vulnerability (CWE-129) when processing requests sent to the Unix socket at /var/run/minissdpd.sock the Unix socket can be accessed by an unprivileged user to send invalid request causes an out-of-bounds memory access that crashes the minissdpd daemon. | ||||||

| Alerts: |

| ||||||

ntp: multiple vulnerabilities

| Package(s): | ntp | CVE #(s): | CVE-2015-8139 CVE-2015-8140 | ||||||||||||||||||||||||||||||||||||||||

| Created: | April 29, 2016 | Updated: | May 4, 2016 | ||||||||||||||||||||||||||||||||||||||||

| Description: | From the SUSE bug reports: CVE-2015-8139: To prevent off-path attackers from impersonating legitimate peers, clients require that the origin timestamp in a received response packet match the transmit timestamp from its last request to a given peer. Under assumption that only the recipient of the request packet will know the value of the transmit timestamp, this prevents an attacker from forging replies. CVE-2015-8140: The ntpq protocol is vulnerable to replay attacks. The sequence number being included under the signature fails to prevent replay attacks for two reasons. Commands that don't require authentication can be used to move the sequence number forward, and NTP doesn't actually care what sequence number is used so a packet can be replayed at any time. If, for example, an attacker can intercept authenticated reconfiguration commands that would. for example, tell ntpd to connect with a server that turns out to be malicious and a subsequent reconfiguration directive removed that malicious server, the attacker could replay the configuration command to re-establish an association to malicious server. | ||||||||||||||||||||||||||||||||||||||||||

| Alerts: |

| ||||||||||||||||||||||||||||||||||||||||||

ntp: multiple vulnerabilities

| Package(s): | ntp | CVE #(s): | CVE-2016-1551 CVE-2016-1549 CVE-2016-2516 CVE-2016-2517 CVE-2016-2518 CVE-2016-2519 CVE-2016-1547 CVE-2016-1548 CVE-2016-1550 | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Created: | May 2, 2016 | Updated: | May 16, 2016 | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Description: | From the Slackware advisory:

CVE-2016-1551: Refclock impersonation vulnerability, AKA: refclock-peering CVE-2016-1549: Sybil vulnerability: ephemeral association attack, AKA: ntp-sybil - MITIGATION ONLY CVE-2016-2516: Duplicate IPs on unconfig directives will cause an assertion botch CVE-2016-2517: Remote configuration trustedkey/requestkey values are not properly validated CVE-2016-2518: Crafted addpeer with hmode > 7 causes array wraparound with MATCH_ASSOC CVE-2016-2519: ctl_getitem() return value not always checked CVE-2016-1547: Validate crypto-NAKs, AKA: nak-dos CVE-2016-1548: Interleave-pivot - MITIGATION ONLY CVE-2016-1550: Improve NTP security against buffer comparison timing attacks, authdecrypt-timing, AKA: authdecrypt-timing | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Alerts: |

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

openssl: multiple vulnerabilities

| Package(s): | openssl | CVE #(s): | CVE-2016-2108 CVE-2016-2107 CVE-2016-2105 CVE-2016-2106 CVE-2016-2109 | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Created: | May 3, 2016 | Updated: | June 1, 2016 | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Description: | From the Ubuntu advisory:

Huzaifa Sidhpurwala, Hanno Böck, and David Benjamin discovered that OpenSSL incorrectly handled memory when decoding ASN.1 structures. A remote attacker could use this issue to cause OpenSSL to crash, resulting in a denial of service, or possibly execute arbitrary code. (CVE-2016-2108) Juraj Somorovsky discovered that OpenSSL incorrectly performed padding when the connection uses the AES CBC cipher and the server supports AES-NI. A remote attacker could possibly use this issue to perform a padding oracle attack and decrypt traffic. (CVE-2016-2107) Guido Vranken discovered that OpenSSL incorrectly handled large amounts of input data to the EVP_EncodeUpdate() function. A remote attacker could use this issue to cause OpenSSL to crash, resulting in a denial of service, or possibly execute arbitrary code. (CVE-2016-2105) Guido Vranken discovered that OpenSSL incorrectly handled large amounts of input data to the EVP_EncryptUpdate() function. A remote attacker could use this issue to cause OpenSSL to crash, resulting in a denial of service, or possibly execute arbitrary code. (CVE-2016-2106) Brian Carpenter discovered that OpenSSL incorrectly handled memory when ASN.1 data is read from a BIO. A remote attacker could possibly use this issue to cause memory consumption, resulting in a denial of service. (CVE-2016-2109) As a security improvement, this update also modifies OpenSSL behaviour to reject DH key sizes below 1024 bits, preventing a possible downgrade attack. | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Alerts: |

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

openssl: information leak

| Package(s): | lib32-openssl openssl | CVE #(s): | CVE-2016-2176 | ||||||||||||||||||||||||

| Created: | May 4, 2016 | Updated: | May 12, 2016 | ||||||||||||||||||||||||

| Description: | From the Arch Linux advisory:

ASN1 Strings that are over 1024 bytes can cause an overread in applications using the X509_NAME_oneline() function on EBCDIC systems. This could result in arbitrary stack data being returned in the buffer. | ||||||||||||||||||||||||||

| Alerts: |

| ||||||||||||||||||||||||||

openvas: cross-site scripting

| Package(s): | openvas | CVE #(s): | CVE-2016-1926 | ||||||||||||||||||||||||||||||||||||||||

| Created: | May 2, 2016 | Updated: | May 9, 2016 | ||||||||||||||||||||||||||||||||||||||||

| Description: | From the Red Hat bugzilla:

It was reported that openvas-gsa is vulnerable to cross-site scripting due to improper handling of parameters of get_aggregate command. If the attacker has access to a session token of the browser session, the cross site scripting can be executed. Affects versions >= 6.0.0 and < 6.0.8. | ||||||||||||||||||||||||||||||||||||||||||

| Alerts: |

| ||||||||||||||||||||||||||||||||||||||||||

oxide-qt: code execution

| Package(s): | oxide-qt | CVE #(s): | CVE-2016-1578 | ||||

| Created: | April 28, 2016 | Updated: | May 4, 2016 | ||||

| Description: | From the Ubuntu advisory:

A use-after-free was discovered when responding synchronously to permission requests. An attacker could potentially exploit this to cause a denial of service via application crash, or execute arbitrary code with the privileges of the user invoking the program. (CVE-2016-1578) | ||||||

| Alerts: |

| ||||||

php: multiple vulnerabilities

| Package(s): | php | CVE #(s): | CVE-2016-4537 CVE-2016-4538 CVE-2016-4539 CVE-2016-4540 CVE-2016-4541 CVE-2016-4542 CVE-2016-4543 CVE-2016-4544 | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Created: | May 2, 2016 | Updated: | May 19, 2016 | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Description: | The php package has been updated to version 5.6.21, which fixes several security issues and other bugs. See the upstream ChangeLog for more details. | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Alerts: |

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

php-ZendFramework: multiple vulnerabilities

| Package(s): | php-ZendFramework | CVE #(s): | |||||

| Created: | May 2, 2016 | Updated: | May 4, 2016 | ||||

| Description: | From the Mageia advisory:

The php-ZendFramework package has been updated to version 1.12.18 to fix a potential information disclosure and insufficient entropy vulnerability in the word CAPTCHA (ZF2015-09) and several other functions (ZF2016-01). | ||||||

| Alerts: |

| ||||||

roundcubemail: three vulnerabilities

| Package(s): | roundcubemail | CVE #(s): | CVE-2015-8864 CVE-2016-4068 CVE-2016-4069 | ||||||||||||||||||||||||||||||||

| Created: | May 2, 2016 | Updated: | May 4, 2016 | ||||||||||||||||||||||||||||||||

| Description: | From the Red Hat bugzilla:

(CVE-2015-8864, CVE-2016-4068) Fix XSS issue in SVG images handling (CVE-2016-4069) Protect download urls against CSRF using unique request tokens | ||||||||||||||||||||||||||||||||||

| Alerts: |

| ||||||||||||||||||||||||||||||||||

subversion: multiple vulnerabilities

| Package(s): | subversion | CVE #(s): | CVE-2016-2167 CVE-2016-2168 | ||||||||||||||||||||||||||||||||||||

| Created: | April 29, 2016 | Updated: | June 8, 2016 | ||||||||||||||||||||||||||||||||||||

| Description: | From the Debian advisory:

CVE-2016-2167 - Daniel Shahaf and James McCoy discovered that an implementation error in the authentication against the Cyrus SASL library would permit a remote user to specify a realm string which is a prefix of the expected realm string and potentially allowing a user to authenticate using the wrong realm. CVE-2016-2168 - Ivan Zhakov of VisualSVN discovered a remotely triggerable denial of service vulnerability in the mod_authz_svn module during COPY or MOVE authorization check. An authenticated remote attacker could take advantage of this flaw to cause a denial of service (Subversion server crash) via COPY or MOVE requests with specially crafted header. | ||||||||||||||||||||||||||||||||||||||

| Alerts: |

| ||||||||||||||||||||||||||||||||||||||

tardiff: two vulnerabilities

| Package(s): | tardiff | CVE #(s): | CVE-2015-0857 CVE-2015-0858 | ||||||||

| Created: | May 2, 2016 | Updated: | July 28, 2016 | ||||||||

| Description: | From the Debian advisory:

CVE-2015-0857: Rainer Mueller and Florian Weimer discovered that tardiff is prone to shell command injections via shell meta-characters in filenames in tar files or via shell meta-characters in the tar filename itself. CVE-2015-0858: Florian Weimer discovered that tardiff uses predictable temporary directories for unpacking tarballs. A malicious user can use this flaw to overwrite files with permissions of the user running the tardiff command line tool. | ||||||||||

| Alerts: |

| ||||||||||

ubuntu-core-launcher: code execution

| Package(s): | ubuntu-core-launcher | CVE #(s): | CVE-2016-1580 | ||||

| Created: | May 2, 2016 | Updated: | May 4, 2016 | ||||

| Description: | From the Ubuntu advisory:

Zygmunt Krynicki discovered that ubuntu-core-launcher did not properly sanitize its input and contained a logic error when determining the mountpoint of bind mounts when using snaps on Ubuntu classic systems (eg, traditional desktop and server). If a user were tricked into installing a malicious snap with a crafted snap name, an attacker could perform a delayed attack to steal data or execute code within the security context of another snap. This issue did not affect Ubuntu Core systems. | ||||||

| Alerts: |

| ||||||

xen: three vulnerabilities

| Package(s): | xen | CVE #(s): | CVE-2016-4001 CVE-2016-4002 CVE-2016-4037 | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Created: | May 2, 2016 | Updated: | May 4, 2016 | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Description: | From the Red Hat bugzilla:

CVE-2016-4001: Qemu emulator built with the Luminary Micro Stellaris Ethernet Controller is vulnerable to a buffer overflow issue. It could occur while receiving network packets in stellaris_enet_receive(), if the guest NIC is configured to accept large(MTU) packets. A remote user/process could use this flaw to crash the Qemu process on a host, resulting in DoS. CVE-2016-4002: Qemu emulator built with the MIPSnet controller emulator is vulnerable to a buffer overflow issue. It could occur while receiving network packets in mipsnet_receive(), if the guest NIC is configured to accept large(MTU) packets. A remote user/process could use this flaw to crash Qemu resulting in DoS; OR potentially execute arbitrary code with privileges of the Qemu process on a host. CVE-2016-4037: Qemu emulator built with the USB EHCI emulation support is vulnerable to an infinite loop issue. It occurs during communication between host controller interface(EHCI) and a respective device driver. These two communicate via a split isochronous transfer descriptor list(siTD) and an infinite loop unfolds if there is a closed loop in this list. A privileges used inside guest could use this flaw to consume excessive CPU cycles & resources on the host. | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Alerts: |

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

xerces-j2: denial of service

| Package(s): | xerces-j2 | CVE #(s): | |||||||||

| Created: | May 4, 2016 | Updated: | May 24, 2016 | ||||||||

| Description: | From the openSUSE advisory:

bsc#814241: Fixed possible DoS through very long attribute names | ||||||||||

| Alerts: |

| ||||||||||

Page editor: Jake Edge

Kernel development

Brief items

Kernel release status

The current development kernel is 4.6-rc6, released on May 1. Linus said: "Things continue to be fairly calm, although I'm pretty sure I'll still do an rc7 in this series." As of this prepatch the code name has been changed to "Charred Weasel."

Stable updates: 4.5.3, 4.4.9, and 3.14.68 were released on May 4.

Quotes of the week

Instead, what I'm seeing now is a trend towards forcing existing filesystems to support the requirements and quirks of DAX and pmem, without any focus on pmem native solutions. i.e. I'm hearing "we need major surgery to existing filesystems and block devices to make DAX work" rather than "how do we make this efficient for a pmem native solution rather than being bound to block device semantics"?

Kernel development news

In pursuit of faster futexes

Futexes, the primitives provided by Linux for fast user-space mutex support, have been explored many times in these pages. They have gained various improvements over the years such as priority inheritance and robustness in the face of processes dying. But it appears that there is still at least one thing they lack: a recent patch set from Thomas Gleixner, along with a revised version, aims to correct the unfortunate fact that they just aren't fast enough.

The claim that futexes are fast (as advertised by the "f" in the name) is primarily based on their behavior when there is no contention on any specific futex. Claiming a futex that no other task holds, or releasing a futex that no other task wants, is extremely quick; the entire operation happens in user space with no involvement from the kernel. The claims that futexes are not fast enough, instead, focus on the contended case: waiting for a busy lock, or sending a wakeup while releasing a lock that others are waiting for. These operations must involve calls into the kernel as sleep/wakeup events and communication between different tasks are involved. It is expected that this case won't be as fast as the uncontended case, but hoped that it can be faster than it is, and particularly that the delays caused can be more predictable. The source of the delays has to do with shared state managed by the kernel.

Futex state: not everything is a file (descriptor)

Traditionally in Unix, most of the state owned by a process is represented by file descriptors, with memory mappings being the main exception. Uniformly using file descriptors provides a number of benefits: the kernel can find the state using a simple array lookup, the file descriptor limit stops processes from inappropriately overusing memory, state can easily be released with a simple close(), and everything can be cleaned up nicely when the process exits.

Futexes do not make use of file descriptors (for general state management) so none of these benefits apply. They use such a tiny amount of kernel space, and then only transiently, that it could be argued that the lack of file descriptors is not a problem. Or at least it could until the discussion around Gleixner's first patch set, where exactly this set of benefits was found to be wanting. While this first attempt has since been discarded in favor of a much simpler approach, exploring it further serves to highlight the key issues and shows what a complete solution might look like.

If we leave priority inheritance out of the picture for simplicity, there are two data structures that the kernel uses to support futexes. struct futex_q is used to represent a task that is waiting on a futex. There is at most one of these for each task and it is only needed while the task is waiting in the futex(FUTEX_WAIT) system call, so it is easily allocated on the stack. This piece of kernel state doesn't require any extra management.

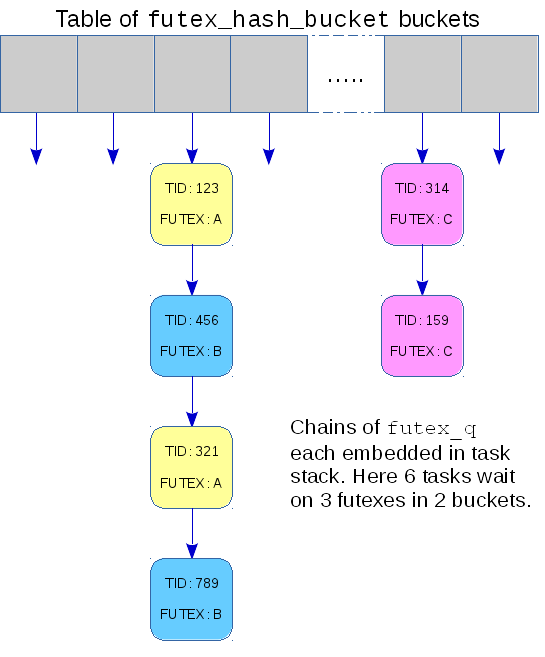

The second data structure is a fixed sized hash table comprising an array of struct futex_hash_bucket; it looks something like this:

Each bucket has a linked list of futex_q structures representing waiting tasks together with a spinlock and some accounting to manage that list. When a FUTEX_WAIT or FUTEX_WAKE request is made, the address of the futex in question is hashed and the resulting number is used to choose a bucket in the hash table, either to attach the waiting task or to find tasks to wake up.

The performance issues arise when accessing this hash table, and they are issues that would not affect access in a file-descriptor model. First, the "address" of a futex can, in the general case, be either an offset in memory or an offset in a file and, to ensure that the correct calculation is made, the fairly busy mmap_sem semaphore must be claimed. A more significant motivation for the current patches is that a single bucket can be shared by multiple futexes. This makes the process of walking the linked list of futex_q structures to find tasks to wake up non-deterministic since the length could vary depending on the extent of sharing. For realtime workloads determinism is important; those loads would benefit from the hash buckets not being shared.

The single hash table causes a different sort of performance problem that affects NUMA machines. Due to their non-uniform memory architecture, some memory is much faster to access than other memory. Linux normally allocates memory resources needed by a task from the memory that is closest to the CPU that the task is running on, so as to make use of the faster access. Since the hash table is all at one location, the memory will probably be slow for most tasks.

Gleixner, the realtime tree maintainer, reported that these problems

can be measured and that in real world applications the hash

collisions "cause performance or determinism issues

".

This is not a particularly new observation: Darren Hart reported in

a summary of the state of futexes in 2009 that "the futex hash

table is shared across all processes and is protected by spinlocks

which can lead to real overhead, especially on large systems.

"

What does seem to be new is that Gleixner has a proposal to fix the

problems.

Buckets get allocated instead of shared

The core of Gleixner's initial proposal was to replace use of the global table of buckets, shared by all futexes, with dynamically allocated buckets — one for each futex. This was an opt-in change: a task needed to explicitly request an attached futex to get one that has its own private bucket in which waiting tasks are queued.

If we return to the file descriptor model mentioned earlier, kernel state is usually attached via some system call like open(), socket(), or pipe(). These calls create a data structure — a struct file — and return a file descriptor, private to the process, that can be used to access it. Often there will be a common namespace so that two processes can access the same thing: a shared inode might be found by name and referenced by two private files each accessed through file descriptors.

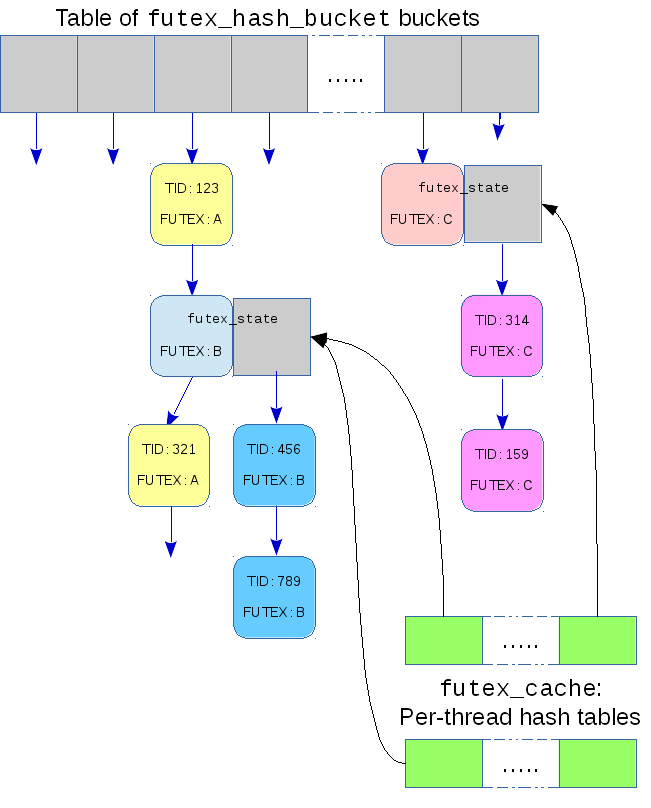

Each of these ideas are present in Gleixner's implementation, though with different names. In place of a file descriptor there is the task-local address of the futex that is purely a memory address, never a file offset. It is hashed for use as a key to a new per-task hash table — the futex_cache. In place of the struct file, the hash table has a futex_cache_slot that contains information about the futex. Unlike most hash tables in the kernel, this one doesn't allow chaining: if a potential collision is ever detected the size of the hash table is doubled.

In place of the shared inode, attached futexes have a shared futex_state structure that contains the global bucket for that futex. Finally, to serve as a namespace, the existing global hash table is used. Each futex_state contains a futex_q that can be linked into that table.

With this infrastructure in place, a task that wants to work with an attached futex must first attach it:

sys_futex(FUTEX_ATTACH | FUTEX_ATTACHED, uaddr, ....);

and can later detach it:

sys_futex(FUTEX_DETACH | FUTEX_ATTACHED, uaddr, ....);

All operations on the attached futex would include the FUTEX_ATTACHED flag to make it clear they expect an attached futex, rather than a normal on-demand futex.

The FUTEX_ATTACH behaves a little like open() and finds or creates a futex_state by performing a lookup in the global hash table, and then attaches it to the task-local futex_cache. All future accesses to that futex will find the futex_state with a lookup in the futex_cache which will be a constant-time lockless access to memory that is probably on the same NUMA node as the task. There is every reason to expect that this would be faster and Gleixner has some numbers to prove it, though he admitted they were a synthetic benchmark rather than a real-world load.

It's always about the interface

The main criticisms that Gleixner's approach received were not that he was re-inventing the file-descriptor model, but that he was changing the interface at all.

Having these faster futexes was not contentious. Requiring, or even allowing, the application programmer to choose between the old and the new behavior is where the problem lies. Linus Torvalds in particular didn't think that programmers would make the right choice, primarily because they wouldn't have the required perspective or the necessary information to make an informed choice. The tradeoffs are really at the system level rather than the application level: large memory systems, particularly NUMA systems, and those designed to support realtime loads would be expected to benefit. Smaller systems or those with no realtime demands are unlikely to notice and could suffer from the extra memory allocations. So while a system-wide configuration might be suitable, a per-futex configuration would not. This seems to mean that futexes would need to automatically attach without requiring an explicit request from the task.

Torvald Riegel supported this conclusion from the different

perspective provided by glibc. When using futexes to implement, for

example, C11 mutexes, "there's no good place to add the

attach/detach calls

" and no way to deduce whether it is worth

attaching at all.

It is worth noting that the new FUTEX_ATTACH interface makes the mistake of conflating two different elements of functionality. A task that issues this request is both asking for the faster implementation and agreeing to be involved in resource management, implicitly stating that it will call FUTEX_DETACH when the futex is no longer needed. Torvalds rejected the first of these as inappropriate and Riegel challenged the second as being hard to work with in practice. This effectively rang the death knell for explicit attachment.

Automatic attachment

Gleixner had already considered automatic attachment but had rejected it because of problems with, to use his list:

- Memory consumption

- Life time issues

- Performance issues due to the necessary allocations

Starting with the lifetime issues, it is fairly clear that the lifetime of the futex_state and futex_cache_slot structures would start when a thread needed to wake or wait on a futex. When the lifetime ends is the interesting question and, while it wasn't discussed, there seem to be two credible options. The easy option is for these structures to remain until the thread(s) using them exits, or at least until the memory containing the futex is unmapped. This could be long after the futex is no longer of interest, and so is wasteful.

The more conservative option would be to keep the structures on some sort of LRU (least-recently used) list and discard the state for old futexes when the total allocated seems too high. As this would introduce a new source of non-determinism in access speed, the approach is likely a non-starter, so wastefulness is the only option.

This brings us to memory consumption. Transitioning from the current implementation to attached futexes changes the kernel memory used per idle futex from zero to something non-zero. This may not be a small change. It is easy to imagine an application embedding a futex in every instance of some object that is allocated and de-allocated in large numbers. Every time such a futex suffers contention, an extra in-kernel data structure would be created. The number of such would probably not grow quickly, but it could just keep on growing. This would particularly put pressure on the futex_cache which could easily become too large to manage.

The performance issues due to extra allocations are not a problem with

explicit attachment for, as Gleixner later clarified:

"Attach/detach is not really critical.

" With implicit

attachment they would happen at first contention which would introduce

new non-determinism. A realtime task working with automatically

attached futexes would probably avoid this by issuing some no-op

operation on a futex just to trigger the attachment at a predictable

time.

All of these problems effectively rule out implicit attachment, meaning that, despite the fact that they remove nearly all the overhead for futex accesses, attached futexes really don't have a future.

Version two: no more attachment

Gleixner did indeed determine that attachment has no future and came up with an alternate scheme. The last time that futex performance was a problem, the response was to increase the size of the global hash table and enforce an alignment of buckets to cache lines to improve SMP behavior. Gleixner's latest patch set follows the same idea with a bit more sophistication. Rather than increase the single global hash table a "sharding" approach is taken, creating multiple distinct hash tables for multiple distinct sets of futexes.

Futexes can be declared as private, in which case they avoid the mmap_sem semaphore and can be only be used by threads in a single process. The set of private futexes for a given process form the basis for sharding and can, with the new patches, have exclusive access to a per-process hash table of futex buckets. All shared futexes still use the single global hash table. This approach addresses the NUMA issue by having the hash table on the same NUMA node as the process, and addresses the collision issue by dedicating more hash-table space per process. It only helps with private futexes, but these seem to be the style most likely to be used by applications needing predictable performance.

The choice of whether to allocate a per-process hash table is left as a system-wide configuration which is where the tradeoffs can best be assessed. The application is allowed a small role in the choice of when that table is allocated: a new futex command can request immediate allocation and suggest a preferred size. This avoids the non-determinism that would result from the default policy of allocation on first conflict.

It seems likely that this is the end of the story for now. There has been no distaste shown for the latest patch set and Gleixner is confident that it solves his particular problem. There would be no point aiming for a greater level of perfection until another demonstrated concrete need comes along.

Network filesystem topics

Steve French and Michael Adam led a session in the filesystem-only track at the 2016 Linux Storage, Filesystem, and Memory-Management Summit on network filesystems and some of the pain points for them on Linux. One of the main topics was case-insensitive file-name lookups.

French said that doing case-insensitive lookups was a "huge performance issue in Samba". The filesystem won't allow the creation of files with the wrong case, but files created outside of Samba can have mixed case or file names that collide in a case-insensitive comparison. That could lead to deleting the wrong file, for example.

![Steve French [Steve French]](https://static.lwn.net/images/2016/lsf-french-sm.jpg)

Ric Wheeler suggested that what was really being sought is case-insensitive lookups but preserving the case on file creation. Ted Ts'o said that he has never been interested in handling case-insensitive lookups because Unicode changes the case-folding algorithm with some frequency, which would lead to having to update the kernel code to match that. Al Viro noted that preserving the case can lead to problems in the directory entry (dentry) cache; if both foo.h and FOO.H have been looked up, they will hash to different dentries.

Ts'o said that they would need to hash to the same dentry. Wheeler suggested that the dentry could always be lower case and that the file could have an extended attribute (xattr) that contains the real case-preserved name. That could be implemented by Samba, but there is a problem, as Ts'o pointed out: the Unix side wants to see the file names with the case preserved.

David Howells wondered if the case could simply be folded before the hash is calculated. But the knowledge of case and case insensitivity is not a part of the VFS, Viro said, and the hash is calculated by the filesystems themselves. Ts'o said that currently case insensitivity is not a first-class feature; it is instead just some hacks here and there. If case insensitivity is going to be added to filesystems like ext4, there are some hurdles to clear. For example, there are on-disk hashes in ext4 and he is concerned that changes to the case-folding rules could cause the hash to change, resulting in lost files.

Adam said that handling the case problem is interesting, but there are other problems for network filesystems. He noted that NFS is becoming more like Samba over time. That means that some of the problems that Samba is handling internally will be need to be solved for NFS, as well, though there will be subtle differences between them.

Both the "birth time" attribute for files and rich ACLs were mentioned as areas where

standard access mechanisms are needed, though there are plenty of others.

![Michael Adam [Michael Adam]](https://static.lwn.net/images/2016/lsf-adam-sm.jpg) The problem is that filesystems provide different ways to get these pieces

of information, such as ioctl() commands or from xattrs. French

said there should be some kind of system call to hide those differences.

The problem is that filesystems provide different ways to get these pieces

of information, such as ioctl() commands or from xattrs. French

said there should be some kind of system call to hide those differences.

The perennially discussed xstat() system call was suggested as that interface, but discussions of xstat() always result in lots of bikeshedding about which attributes it should handle, Viro said. Ts'o said that "people try to do too much" with xstat(). In fact, there was a short session on xstat() later in the day that tried to reduce the scope of the system call with an eye toward getting something merged.

If there are twenty problems that can't be solved for network filesystems and five that can, even getting three of those solved would be a nice start, French said. There are issues for remote DMA (RDMA) and how to manage a direct copy from a device, for example. There are also device characteristics (e.g. whether it is an SSD) that applications want to know. Windows applications want to be able to determine attributes like alignment and seek penalty, but there is no consistent way to get that information. In addition, French said he doesn't want to have to decide whether a filesystem is integrity protected, but wants to query for it in some standard way.

Christoph Hellwig has been suggesting that filesystems move away from xattrs and to standardized filesystem ioctl() commands, French said. Ts'o said that the problem with xattrs is that they have become a kind of ASCII ioctl(); filesystems are parsing and creating xattrs that don't live on disk. At that point, the time for the session expired.

xstat()

The proposed xstat() system call, which is meant to extend the functionality of the stat() call to get additional file-status information, has been discussed quite a bit over the years, but has never been merged. The main impediment seems to be a lot of bikeshedding about how much information—and which specific pieces—will be returned. David Howells led a short filesystem-only discussion on xstat() at the 2016 Linux Storage, Filesystem, and Memory-Management Summit.

Howells presented a long list of possibilities that could be added to the structure for additional file status information to be returned by a call like xstat()—things like larger timestamps, the creation (or birth) time for a file, data version number (for some filesystems), inode generation number, and more. In general, there are more fields, with some that have grown larger, for xstat().

![David Howells [David Howells]](https://static.lwn.net/images/2016/lsf-howells-sm.jpg)

There is also space at the end of the structure for growth. There are ways for callers to indicate what information they are interested in, as well as ways for the filesystem to indicate which pieces of valid information have been returned.

Howells noted that Dave Chinner wanted more I/O parameters (e.g. preferred read and write sizes, erase block size). There were five to seven different numbers that Chinner wanted, but those could always be added later, he said.

There are also some useful information flags that will be returned. Those will indicate if the file is a kernel file (e.g. in /proc or /sys), if it is compressed (and thus will result in extra latency when accessed), if it is encrypted, or if it is a sparse file. Windows has many of these indications.

But Ted Ts'o complained that there are two different definitions of a compressed file. It could mean that the file is not compressible, because it has already been done, or it could mean that the filesystem has done something clever and a read will return the real file contents. It is important to clearly define what the flag means. The FS_IOC_GETFLAGS ioctl() command did not do so, he said, so he wanted to ensure that the same mistake is not made with xstat().

There are other pieces of information that xstat() could return, Howells said. For example, whether accessing the file will cause an automount action or getting "foreign user ID" information for filesystems that don't have Unix-style UIDs or that have UIDs that do not map to the local system. There are also the Windows file attributes (archive, hidden, read-only, and system) that could be returned.

Ts'o suggested leaving out anything that did not have a clear definition of what it meant. That might help get xstat() merged. Others can be added later, he said.

Howells then described more of the functionality in his current version. There are three modes of operation. The standard mode would work the same way that stat() works today; it would force a sync of the file and retrieve an update from the server (if there is one). The second would be a "sync if we need to" mode; if only certain information that is stored locally is needed, it would simply be returned, but if the information requested required an update from the server (e.g. atime), that will be done. The third, "no sync" mode, means that only local values will be used; "it might be wrong, but it will be fast". For local filesystems, all three modes work the same way.

Jeff Layton asked: "How do we get it in without excessive bikeshedding?" He essentially answered his own question by suggesting that Howells start small and simply add "a few things that people really want". Joel Becker suggested that only parameters with "actual users in some upstream" be supported. That could help trim the list, he said.

Howells said that he asked for comments from various upstreams, but that only Samba had responded. Becker reiterated that whatever went in should be guided by actual users, since it takes work to support adding these bits of information. Howells agreed, noting that leaving extra space and having the masks and flags will leave room for expansion.

As it turns out, Howells posted a new patch set after LSFMM that reintroduces xstat() as the statx() system call.

Stream IDs and I/O hints

I/O hints are a way to try to give storage devices information that will allow them to make better decisions about how to store the data. One of the more recent hints is to have multiple "streams" of data that is associated in some way, which was mentioned in a storage standards update session the previous day. Changho Choi and Martin Petersen led a session at the 2016 Linux Storage, Filesystem, and Memory-Management Summit to flesh out more about streams, stream IDs, and I/O hints in general.

![Changho Choi [Changho Choi]](https://static.lwn.net/images/2016/lsf-choi-sm.jpg)

Choi said that he is leading the multi-stream specification and software-development work at Samsung. There is no mechanism for storage devices to expose their internal organization to the host, which can lead to inefficient placement of data and inefficient background operations (e.g. garbage collection). Streams are an attempt to provide better collaboration between the host and the device. The host gives hints to the device, which will then place the data in the most efficient way. That leads to better endurance as well as improved and consistent performance and latency, he said.

A stream ID would be associated with operations for data that is expected to have the same lifetime. For example, temporary data, metadata, and user data could be separated into their own streams. The ID would be passed down to the device using the multi-stream interface and the device could put the data in the same erase blocks to avoid copying during garbage collection.

For efficiency, proper mapping of data to streams is essential, Choi said. Keith Packard noted that filesystems try to put writes in logical block address (LBA) order for rotating media and wondered if that was enough of a hint. Choi said that more information was needed. James Bottomley suggested that knowing the size and organization of erase blocks on the device could allow the kernel to lay out the data properly.

But there are already devices shipping with the multi-stream feature, from Samsung and others, Choi said. It is also part of the T10 (SCSI) standard and will be going into T13 (ATA) and NVM Express (NVMe) specifications.

![Martin Petersen [Martin Petersen]](https://static.lwn.net/images/2016/lsf-petersen-sm.jpg)

Choi suggested exposing an interface for user space that would allow applications to set the stream IDs for writes. But Bottomley asked if there was really a need for a user-space interface. In the past, hints exposed to application developers went largely unused. It would be easier if the stream IDs were all handled by the kernel itself. He was also concerned that there would not be enough stream IDs available, so the kernel would end up using them all; none would be available to offer to user space.

Martin Petersen said that he was not against a user-space interface if one was needed, but suggested that it would be implemented with posix_fadvise() or something like that rather than directly exposing the IDs to user space. Choi thought that applications might have a better idea of the lifetime of their data than the kernel would, however.

At that point, Petersen took over to describe some research he had done on hints: how they are used and which are effective. There are several conduits for hints in the kernel, including posix_fadvise(), ioprio (available using ioprio_set()), the REQ_META flag for metadata, NFS v4.2, SCSI I/O advice hints, and so on. There are tons of different hints available; vendors implement different subsets of them.

So he wanted to try to figure out which hints actually make a difference. He asked internally (at Oracle) and externally about available hints, which resulted in a long list. From that, he pared the list back to hints that actually work. That resulted in half a dozen hints that characterize the data:

- Transaction - filesystem or database journals

- Metadata - filesystem metadata

- Paging - swap

- Realtime - audio/video streaming

- Data - normal application I/O

- Background - backup, data migration, RAID resync, scrubbing

The original streams proposal requires that the block layer request a stream ID from a device by opening a stream. Eventually those streams would need to be closed as well. For NVMe, streams are closely tied to the hardware write channels, which are a scarce resource. The explicit stream open/close is not popular and is difficult to do in some configurations (e.g. multipath).

So Petersen is proposing a combination of hints and streams. Device hints would be set based on knowledge the kernel has about the I/O. The I/O priority would be used to set the background I/O class hint (though it might move to a REQ_BG request flag), other request flags (REQ_META, REQ_JOURNAL, and REQ_SWAP) would set those hints, and posix_fadvise() flags would also set the appropriate hints.

Stream IDs would be based on files, which would allow sending the file to different devices and getting roughly the same behavior, he said. The proposal would remove the requirement to open and close streams and would provide a common model for all device types, so flash controllers, storage arrays, and shingled magnetic recording (SMR) devices could all make better decisions about data placement. This solution is being proposed to the standards groups as a way to resolve the problems with the existing hints and multi-stream specifications.

Background writeback

The problems with background writeback in Linux have been known for quite some time. Recently, there has been an effort to apply what was learned by network developers solving the bufferbloat problem to the block layer. Jens Axboe led a filesystem and storage track session at the 2016 Linux Storage, Filesystem, and Memory-Management Summit to discuss this work.

The basic problem is that flushing block data from memory to storage

(writeback) can flood the device queues to the point where any other reads and

![Jens Axboe [Jens Axboe]](https://static.lwn.net/images/2016/lsf-axboe-sm.jpg) writes experience high latency. He has posted several versions of a patch

set to

address the problem and believes it is getting close to its final form.

There are fewer tunables and it all just basically works, he said.

writes experience high latency. He has posted several versions of a patch

set to

address the problem and believes it is getting close to its final form.

There are fewer tunables and it all just basically works, he said.

The queues are managed on the device side in ways that are "very loosely based on CoDel" from the networking code. The queues will be monitored and write requests will be throttled when the queues get too large. He thought about dropping writes instead (as CoDel does with network packets), but decided "people would be unhappy" with that approach.

The problem is largely solved at this point. Both read and write latencies are improved, but there is still some tweaking needed to make it work better. The algorithm is such that if the device is fast enough, it "just stays out of the way". It also narrows in on the right queue size quickly and if there are no reads contending for the queues, it "does nothing at all". He did note that he had not yet run the "crazy Chinner test case" again.

Ted Ts'o asked about the interaction with the I/O controller for control groups that is trying to do proportional I/O. Axboe said he was not particularly concerned about that. Controllers for each control group will need to be aware of each other, but it should all "probably be fine".

David Howells asked about writeback that is going to multiple devices. Axboe said that still needs work. Someone else asked about background reads, which Axboe said could be added. Nothing is inherently blocking that, but the work still needs to be done.

Multipage bio_vecs

In the block layer, larger I/O operations tend to be more efficient, but current kernels limit how large those operations can be. The bio_vec structure, which describes the buffer for an I/O operation, can only store a single page of tuples (of page, offset, and length) to describe the I/O buffer. There have been efforts over the years to allow multiple pages of array entries, so that even larger I/O operations can be held in a single bio_vec. Ming Lei led a session at the 2016 Linux Storage, Filesystem, and Memory-Management Summit to discuss patches to support bio_vec structures with multiple pages for the arrays.

Multipage bio_vec structures would consist of multiple, physically

contiguous

pages that could hold a larger array. It is the correct

thing to do, Lei said. It will save memory as there will be fewer

bio_vec structures needed and it will increase the transfer size

![Ming Lei [Ming Lei]](https://static.lwn.net/images/2016/lsf-lei-sm.jpg) for each

struct bio (which contains a pointer to a bio_vec).

Currently, the single-page nature of a bio_vec means that only one

megabyte of I/O can be contained in a single bio_vec; adding

support for multiple pages will remove that limit.

for each

struct bio (which contains a pointer to a bio_vec).

Currently, the single-page nature of a bio_vec means that only one

megabyte of I/O can be contained in a single bio_vec; adding

support for multiple pages will remove that limit.

Jens Axboe agreed that there are benefits to larger bio_vec arrays, but was concerned about requesters getting physically contiguous pages. That would have to be done when the bio is created. Lei said that it is not hard to figure out how many pages will be needed before creating the bio, though.

All of the "magic" is in the bio_vec and bio iterators, one developer in the audience said. So there would be a need to introduce new helpers to iterate over the multipage bio_vec. The new name for the helper would require that all callers change, which would provide a good opportunity to review all of the users of the helpers, Christoph Hellwig said.

The patches also clean up direct access to some fields in bio structures: bi_vcnt, which tracks the number of entries in the bio_vec array, and the pointer to the bio_vec itself (bi_io_vec).