LWN.net Weekly Edition for June 11, 2009

The LGPL and video codecs

Video for the web is generally a contentious topic—the HTML 5 specification does not, yet, have a required codec—but browser developers have begun making their own codec decisions. Google has included support for the patent-encumbered MPEG-4 codecs in its Chrome browser, which has caused some consternation among those who are working on HTML 5. The underlying concern is for web video interoperability, especially for browsers who cannot or will not license codecs, but it has also manifested itself as a question about free software licensing and its relationship to patents.

The Web Hypertext Application

Technology Working Group has been working on HTML 5, and a posting

from Josh Cogliati to its mailing list was the jumping-off point for a

wide-ranging discussion. Cogliati suggested using an MPEG-1 subset as the

required video codec for the HTML 5 <video> tag, but, since MPEG-1 is

rather old and is considered technically

inferior, the idea was not met with much support. Ian Fette noted

that Google Chrome has chosen to "support H.264 + AAC as well as

Ogg (Theora + Vorbis) for <video>

", so MPEG-1 support is not

likely to be of interest for Chrome. But the mention of H.264, which is

covered by multiple patents and licensed by the MPEG Licensing Authority

(MPEG-LA), caused a rather negative reaction among many of the other

mailing list participants—some of whom are notably affiliated with

competing browsers.

Chrome added support for H.264 by way of FFmpeg, which is a free software implementation of various multimedia formats, and is covered by the LGPL version 2.1 (though some optional parts are GPLv2). This led some, including Anne van Kesteren of Opera Software, to question the relationship of the patents to the LGPL. As he, and others saw things, Google couldn't license those patents without passing them on to anyone else who distributed FFmpeg. This is because of LGPL Section 11, which reads, in part, as follows:

Google's position, as outlined by Chris

DiBona and Daniel

Berlin, is that it has a license from MPEG-LA which licenses the

patents for use in Chrome. That license does not say anything about

FFmpeg, so there is nothing that "would not permit royalty-free

redistribution

" of FFmpeg. Thus, from Google's perspective, its

patent license does not interact with Section 11 at all.

Others are less sure about that interpretation. Opera CTO Håkon Wium Lie asks:

What am I missing?

Berlin makes the argument that the example in Section 11 must be interpreted with the rest of that section, not in isolation:

But others have interpreted the LGPL differently. Miguel de Icaza said that Moonlight would have preferred to use FFmpeg, along with a license from MPEG-LA, rather than the proprietary codecs it ended up using. He wonders if anyone has checked with the Free Software Foundation (FSF) for their interpretation. Berlin points out that the FSF's opinion is not particularly relevant in this case as only the FFmpeg project has any standing to complain about LGPL violations of its code. So far, FFmpeg has not, publicly at least, complained.

In the end, as DiBona states, Google—along with its lawyers, presumably—is comfortable that it is complying with the terms of the LGPL. Whether that might cause others to re-examine their decisions about using FFmpeg remains to be seen. But the LGPL compliance question is really a bit of a distraction from the real underlying issue regarding patented codecs for web video. Both Mozilla and Opera employees are quite obviously unhappy with Google's decision to support H.264 for the <video> tag.

Mozilla's Robert

Sayre points

out that the licensing issue, if any, can easily be dealt with by

Google, but: "The incredibly sucky outcome is that Chrome ships patent-encumbered

'open web' features, just like Apple. That is reprehensible.

"

DiBona takes

exception to that label:

DiBona's argument is that H.264 is demanded by users because of quality

issues. Lie is not

so sure, noting: "YouTube is very popular despite the fact that its

video clips resemble the transmission from the moon landing in

1969.

" He continues:

[...] The web is based on free and open formats. Google would not have existed without the web. It will be a terrible tragedy if you tip the scales in favor of patent-encumbered formats on the web. We expect higher standards from you.

That is really the root cause of the dispute. To many, the "open web" demands open codecs, while Google doesn't necessarily disagree with the intent, they are not so idealistic as to ignore a de facto video standard for Chrome. In addition, YouTube, which is owned by Google, has an enormous collection of H.264-encoded video that Google would undoubtedly like to support directly in Chrome.

Slowly but surely, though, the Theora codec is building momentum. Chrome, Opera, and Mozilla will all be supporting it for the <video> tag, the Thusnelda encoder is becoming competitive with H.264, and more sites are starting to carry Theora video. The mailing list thread contained multiple mentions of Dailymotion and Wikipedia as supporters—and, more importantly, adopters—of Theora.

While Mozilla, Opera, and probably others are not forcing Theora as the only choice for their users, they are making it the only default video codec. Plugins or extensions will most certainly support H.264 (along with other codecs), but the idea is that web site owners can be sure that their visitors browser will support Theora. Currently, web owners are often forced to rely on Adobe Flash plugins as their video delivery system; an in-browser solution will clearly be a step up.

There are those—Nokia and Apple among them—that are unconvinced about the unencumbered nature of Theora, and consider it an open question whether there will be patent suits against deep-pocketed implementers. Google would seem to fit that bill, so, with luck, the Chrome implementation will flush out any submarine patents that some patent troll believes Theora infringes. Given that Google was willing to pay for a license from MPEG-LA, one might guess they would be willing to do the same for Theora, but at least that would bring the submarines to the surface. Perhaps that is not the perfect outcome, but is certainly better than the uncertainty that exists today.

OpenMoko: its present and future

OpenMoko has had a tough time. Since announcing in 2006 that it was creating an open, Linux-powered mobile phone, it has been plagued with hardware problems and immature, buggy software (the software stack has been rewritten at least twice). The problems with the product has resulted in poor sales which caused smart, motivated staff to leave (or be laid off), thus creating a vicious circle.

LWN has chronicled OpenMoko's progress and problems over the years, culminating in another recent round of lay-offs and OpenMoko (OM) refocusing on a mysterious "Project B" about which little is known except that it is not a mobile phone.

Ironically, just at the time OM is (temporarily?) refocusing away from phones, its phone: the Neo FreeRunner (aka GTA02) is just becoming a stable, usable phone suitable for use by the typical LWN reader.

There are a number of distributions available for the FreeRunner. The majority of those that have ongoing development are based on a telephony stack called freesmartphone.org (FSO) developed by (now-)former OM employees. This stack provides a number of D-BUS interfaces for controlling various phone components including GPRS, wifi, and GPS, as well as the GSM phone functionality. Different distributions can then build on top of the FSO stack.

Stable Hybrid Release (SHR) was one of the earliest FSO distributions; it originally aimed to be a hybrid (hence the name) of the best bits from the various official OM distributions. It has evolved into a general purpose, community-driven distribution that has regular testing releases (and a continuously updating unstable release) that incorporate new software and features frequently.

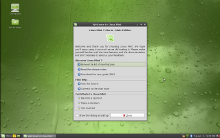

![[SHR Dialer]](https://static.lwn.net/images/om/dialer_sm.png) OM2009 is OM's

officially blessed distribution and, like SHR, it is based on FSO and OpenEmbedded.

OM2009 is minimal in the features and software it provides; OM wanted to

concentrate on creating a working mobile phone before trying to create a

"smart" phone. This is reflected in the choice of the Paroli application, which is a

GUI for controlling basic phone functionality such as a dialer and SMS.

Not all the FSO distributions use OpenEmbedded, for example Hackable:1 (which has

created a number of popular applications) is based on Debian and there are

also Gentoo and Slackware distributions. There are also a number of non-FSO

based software stacks including a port of Android.

OM2009 is OM's

officially blessed distribution and, like SHR, it is based on FSO and OpenEmbedded.

OM2009 is minimal in the features and software it provides; OM wanted to

concentrate on creating a working mobile phone before trying to create a

"smart" phone. This is reflected in the choice of the Paroli application, which is a

GUI for controlling basic phone functionality such as a dialer and SMS.

Not all the FSO distributions use OpenEmbedded, for example Hackable:1 (which has

created a number of popular applications) is based on Debian and there are

also Gentoo and Slackware distributions. There are also a number of non-FSO

based software stacks including a port of Android.

All the FSO based distributions are still relatively immature; Neither SHR or OM2009 has yet to make a formal stable release though the "testing" images are widely used. Looking at mailing list traffic and IRC, most users seem to have switched to using them (I've been using SHR for about a month as my daily phone).

Using SHR as a day-to-day phone

![[SHR Main Screen]](https://static.lwn.net/images/om/illumelauncher_sm.png)

SHR contains all of the features you'd expect in a basic phone: a dialer, messaging, and contacts applications. They all work well, but are rudimentary. Call quality is noticeably worse than that of my Nokia in noisy environments, but is still acceptable. The phone is configured to suspend in order to save battery life, but reliably wakes up on incoming calls and texts. With a fairly typical usage pattern, the phone battery lasts a couple of days before needing to be recharged; though recharging it every night is not a bad idea.

Wifi, GPRS (the FreeRunner is a 2G phone), and GPS all work and can be configured via the GUI. The default browser (Midori) is more suited to a traditional desktop - it lacks finger scrolling and zooming which makes using it quite cumbersome. Other browsers are available, in particular, a Hackable:1 community member has created a WebKit-based browser named Woosh which is designed for the FreeRunner. Woosh is still in its infancy but if it, or another similar project mature it will be a big boon for the OpenMoko platform.

![[SHR Settings]](https://static.lwn.net/images/om/settings_sm.png)

A variety of other software is available, most is available in the repositories for the various distributions but opkg.org exists as a useful showcase. Some popular apps that I regularly use include Navit (GPS car navigation system that requires some fiddling with text files to install and configure, Intone (music player) and MokoMaze (simple but addictive accelerometer based ball-in-maze game). You can also use applications like Dictator to transform your phone into a basic dictation machine or to record phone calls.

There are a large number of keyboards/software input methods available (e.g. Hackable:1's Xkbd and qwo) but partly because the screen is inset from the case (and partly just because it is a touch-screen) entering lots of text can be a laborious operation. Because it's an open phone based on a recent Linux kernel, though, it has support for Bluetooth keyboards which make text entry much more efficient.

In short, it has all the basic requirements for a simple phone. In addition, there is a community creating and refining software for it and you have the ability to do things like ssh in and use vi (or to make use of a simple D-BUS interface to determine your location). There are plenty of rough edges, though, for example, the speaker phone button doesn't seem to work in the dialer, there is currently no GUI for altering the volume during a phone call, and connecting to a wireless network requires two applications: one to turn the wifi power on, the other as a GUI for wpa_supplicant.

There are OM distributors around the world who will happily sell you a FreeRunner, however it is worth noting that there have been a number of hardware revisions. Those prior to the most recent (A7) revision were susceptible to "buzz" on the line during phone calls with certain combinations of GSM frequencies and carriers. OM has supported distributors (e.g. Golden Delicious and SDG) in providing hardware buzz fixes to earlier models, but confirming which revision of the FreeRunner you are purchasing could prevent a lot of hassle after purchase.

The future is uncertain for OM and its relationship with mobile phones. OM has opened the hardware schematics for the FreeRunner and a community project is making a number of updates. Whether we will ever see new phones and major new software revisions coming from OM itself is an open question, but, given the open, community-oriented nature of both the hardware and the software, that is not necessarily a death knell for the phone. OM has plenty of stock of FreeRunners and can continue to produce more if the demand is there.

Despite the rough edges, the Neo FreeRunner is a usable phone. What sets it apart from other phones is its openness; unlike others, the user rather than the phone vendor or the carrier are in control. All the software that runs on the phone's main CPU is open source (the separate GSM module is closed) and hardware schematics are available. However, given the perilous state of OM's finances, if you want open phone hardware with a growing community, now might be a good time to buy one.PiTiVi video editor previews rewritten core

Video editing stands to get significantly easier for Linux users this year. The GStreamer-based nonlinear video editor (NLE) PiTiVi has released its first public build since undergoing a substantial rewrite. Version 0.13.1 was released May 27, 2009, and adds a handful of user-visible changes but makes substantial improvements under the hood, laying down groundwork for the next development cycle.

Video editing continues to be an unsettled topic among Linux users. Among the handful of applications currently available, each has its share of limitations, user interface quirks, and stability problems. Kino, for example, is very capable at capturing DV video from cameras, but uses an unfamiliar UI for an NLE, without the familiar editing timeline, and requiring a rendering step for every title, transition, or effect. Kdenlive and Cinelerra offer broader feature sets, but are plagued by widely-reported installation and stability problems. For its part, the chief criticism of PiTiVi has always been missing functionality. The rewrite of the 0.13 series, begun in 2008, promises to change that.

PiTiVi (pronounced "P-T-V") uses Python for its user interface and top-level logic, but all audio and video processing — from playback to filters — takes place in GStreamer. That gives the application better performance, the ability to take advantage of GStreamer's multi-threading, and independence of codec and file formats. Any format supported by GStreamer is supported by PiTiVi, which is convenient for users, and distributions can include the application without introducing a package dependency on potentially legally-risky media engines such as FFmpeg. GStreamer's plugin-based abstraction allows PiTiVi to function regardless of whether any particular codec pack — patented, commercial, proprietary, or free — is installed, and does not require maintaining a specially-tailored playback engine to avoid legal conflicts.

![[PiTiVi 0.11.3]](https://static.lwn.net/images/pitivi-0.11.3_sm.png)

Users will notice that PiTiVi 0.13.1 supports multiple layers in the timeline, permitting simple overlay of multiple video and audio sources, support for still images as video tracks, and the ability to trim audio and video clips in the editor. As the before (at left) and after (below, at right) screenshots depict, audio waveforms and video thumbnails are now used to represent tracks in the timeline, making it easier to distinguish between clips while editing. In addition to the visible changes, however, the more substantial work of the 0.13.x series is the refactoring of the core, which will eventually make the editor more powerful.

Refactoring

![[PiTiVi 0.13.1]](https://static.lwn.net/images/pitivi-0.13.1_sm.png)

Lead developer Edward Hervey said that the refactored application

core reflects several years of feedback from users and discussions with

professional video editors. "The previous design was more the

evolution of a certain view I had of video editing and its workflow back in

2003.

" The old design included a lot of hard-coded features that

held the application back in real-world workflows, such as limits on the

number of audio and video tracks, a limit on the number of layers, and only

being able to have one project open at a time.

One example Hervey cites is the internal representation of the editing timeline. The old PiTiVi core was tied directly to the timeline provided by the Gnonlin library (a dependency of PiTiVi that implements NLE features as GStreamer plugins), which limited it to one object per stream and track. The new core adds an intermediary layer, permitting multiple objects and tracks.

The rewrite is also considerably more modular, making it easier for the developers to add additional features. Features planned for the 0.13.x cycle include critical editing tasks like titling, transitions, and effects — operations which are already possible in GStreamer plugins. Hervey explained:

Most of the user interface work has been taken on by new team addition Brandon Lewis, with assistance from Jean-Francois Tam. As is often the case, the team is trying to maintain a clean separation between the application core and its user interface, but for PiTiVi this choice has implications for its customizability. Hervey explained that the goal is to keep the UI as simple as possible in order to make the program usable by novices, but to leave room for building additional functionality on top of it through the use of plugins.

GStreamer

PiTiVi development is intrinsically tied to GStreamer development. As Hervey explained in an interview with Gnomedesktop.org, he, Lewis, and core developer Alessandro Decina are all employed by Collabora Multimedia, which sponsors ongoing GStreamer work alongside other open source projects. Some of Collabora's revenue comes from consulting jobs that involve building custom GStreamer processing and editing solutions, so building a dependable NLE is an important task, which influences the direction in which GStreamer itself moves.

One such influence was the notion of "segments"

that was added in GStreamer 0.10. Segments allow PiTiVi to track in- and

out-markers for each video and audio buffer without altering the buffer

itself, greatly speeding up the process of rearranging clips in the

timeline. "Previously in order to do time-shifting we'd have to

modify the timestamps of all buffers, whereas with segments we can give

information as to when buffers that follow that event will be displayed, at

what speed, etc...

" In addition, Hervey added, PiTiVi development

tests all GStreamer plugins to ensure that they fully conform to the API,

such as making sure that all decoders can do sample-accurate

decoding.

Furthermore, as with any GStreamer project, the modular nature of the media framework means that other projects can share in the improvements that originate in PiTiVi, and vice-versa. Because PiTiVi represents a different usage of GStreamer than the considerably more common "media player" paradigm, it stress-tests plugins in different ways.

Up next on PiTiVi

The next milestone in PiTiVi development is scheduled to be released in July, adding essential features like undo/redo, blending and compositing, and video capture. Because it is officially designated a development branch, the team has not pushed the 0.13.x series for inclusion in Linux distributions. Until then, users interested in testing the development releases can either download and compile source code packages, or if using Debian or Ubuntu, access development binaries through the project's Personal Package Archive (PPA). The PPA includes updated packages for GStreamer, GStreamer plugins, Gnonlin, and other key dependencies. Building from source also requires satisfying these dependencies, so instructions are provided on the PiTiVi wiki.

NLEs are notoriously complex beasts, and a stable, capable NLE remains a missing piece of the free software desktop for many users. Because it can leverage GStreamer for the heavy lifting of media processing, PiTiVi stands a better chance than most young projects of reaching dependable usability, and if the first release of the newly-rewritten core in 0.13.1 is any indication, the application is well on its way.

Sugar moves from the shadow of OLPC

Fourteen months ago, when One Laptop per Child (OLPC) announced that it was preparing to work with Windows, the free software community treated the news as a betrayal. However, nowhere was the reaction stronger than within OLPC itself. Within days, Walter Bender, who oversaw the development of Sugar, OLPC's graphical interface, had resigned and announced the creation of Sugar Labs, a non-profit organization for ensuring Sugar's continuation. Now, looking back over the year since then, Bender considers that Sugar Labs has progressed steadily towards its main goals: getting organized, taking advantage of the nature of free software to enhance education, and developing a community of teachers and students to help direct Sugar's development.

"It turns out that One Laptop per Child didn't really go down the

Windows path

", Bender admitted. "

They're still shipping Linux and Sugar

with every laptop, and they've just announced that their 1.5 machine

is also going to be Sugar-based.

" However, he had no way of knowing

that last year.

At any rate, the proposed change of operating systems was not the only

reason for the creation of Sugar Labs. "At the same time, I was

thinking that Sugar should be broader than just one particular hardware

platform,

" Bender added. "We spent a lot of the first year

undoing any specific dependencies on the One Laptop per Child

hardware. What we've done is made Sugar run pretty much everywhere.

"

Sugar is now available in most major distributions, and Sugar Labs is currently in the middle of developing Sugar on a Stick, a USB installation. In addition, Sugar Labs has been discussing Sugar deployment with several netbook manufacturers.

"I think we've got a lot of ways to go in terms of raising

awareness about Sugar

", Bender said. "

I don't think the world knows that

Sugar's out there, and that it's a separate thing that can run outside of

One Laptop per Child. That will take time. Our marketing budget is the same

as all the rest of our budgets — zero — but we'll get

there.

"

At the same time, Sugar Labs has established a structure more in

keeping with the free software and interactive learning ideals of its

members. Modeling itself after free software organizations like the GNOME

Foundation, Sugar Labs is run by an elected Oversight Board, which is

deliberately designed "to be pretty toothless. About the only power

that the Oversight Board has is to appoint a committee to solve

problems.

"

Instead, it is the project's various teams that make most of the decisions, with all discussions occurring on the same IRC channel. As with most free software projects, this organization is born out of necessity, since Sugar Labs does not actually have any office space, but Bender suggested that it is also an advantage in building the type of community that project members desire.

By contrast, he suggested that OLPC, which does have a physical

location, "was struggling a bit to maintain communication with the

global community. Conversations were not deliberately obfuscated, but,

because they were happening in a room as opposed to online, a lot of people

weren't hearing the conversation, and a lot of people felt they weren't

part of the project because of that. We don't have one physical center of

activity, and that plays out to our advantage, I think.

"

All in all, Bender stated, "Things have gone remarkably

smoothly. We've been a pretty disciplined bunch. For instance, if you look

at our release map, we're not letting features get ahead of our ability to

deliver something that is robust and on time. For the most part, it's been

a great year.

"

Free software ideals and education

However, for Bender, Sugar Lab's greatest accomplishments have not been in its organization, but in the advancement of its educational goals — goals that Bender views as meshing remarkably well with the ideals of free software

From the start, Sugar has been intended as more than an

interface. Instead, Sugar Labs prefers to describe the software it develops

as a learning platform. "We're not interested in anything except

learning,

" Bender said. "as we make decisions about what we

release and what we do, the first question we always ask is, 'How does this

impact learning?' But 'platform' is important, too, because Sugar is not

just a finished product that we give you to use. The implication is that

Sugar is the platform on which you are going to build as well. We give you

some scaffolding, but that scaffolding is there for you to build upon, as

opposed to something you just use.

"

In this respect, Bender views Sugar as radically different from

proprietary learning systems. "Often times, the tendency is to give

children toy versions of a program, so that they don't hurt

themselves. Also, the professional versions are expensive. Sugar takes a

different approach. We want to give them tools that are real, and don't

limit them in any way. But, at the same time, we're very cognizant that we

have a path that doesn't require them to be an expert to get

started. Really, that's an approach that is possible to achieve in free

software, but quite difficult to achieve in any other way.

"

For example, Sugar's music activities begin with "tools that are

literally accessible by a two year old — a sort of

pound-on-the-keyboard activity. They can take that tool and use it over the

network to make a band, and use it for sequencing and to compose

music. then they can go into a synthesizer and start to play with wave

forms, random number generators and envelope curves. Then they can go into

a scripting language called cSounds

and start to understand how music is scripted in the machine — and

that's the same language that the pros use in Hollywood for scripting

special effects. Then they can go into our View Source editor and hack the

Python code that's underlying all these tools. They have the ability to go

deeper into anything — and anything is determined by the child's

interests, [or] by the teacher. It's not determined by the people writing

the code.

"

In much the same way, Bender considers the collaboration framework that

is part of the basic Sugar interface as a direct invitation for open-ended

critical discussion. "This has a very direct connection to free

software

", he said. "

One of the things about free software is that not only

do you share, but you also engage in a critical dialogue about what you're

sharing. The idea that ideas are there to be critiqued is one that a child

has got to learn, and Sugar collaboration is as much about being engaged in

criticism as it is about sharing.

" In other words, Sugar Labs

considers the collaborative development found throughout free software

projects as a model of exactly the sort of interaction that is ideal in

education.

Community Building

Another area of success in the past year has been the increased participation of teachers and students in Sugar development.

"We've been really persistent about constantly going back to the

teachers and saying, 'You've got to participate. You've got to give us

feedback,'

" Bender said. "'If you don't give us feedback,

we're not going to learn, and you're not going to get the kinds of tools

you need.'

"

Bender acknowledged that finding the right channel for this feedback is

difficult. He would personally prefer IRC, "because on IRC you've got

this discussion among experts [who are] solving problems,

" as well

as access at any time of the day. However, experience has taught him that

IRC is not "a tool that teachers are going to be comfortable with, at

least initially.

" Instead, teachers seem to prefer email, despite

the fact it is not instantaneous and that communication is asynchronous.

But, regardless of the medium, teachers and students are starting to let their views be known. For instance, in Uruguay, teachers using the Turtle Art activity (Sugar's name for an application) requested a square root function they could use to teach the Pythagorean Theorem. At first, Bender told them to write the function themselves. But, eventually he realized that such a contribution was beyond most teacher's ability. He added an extension that gave them several different ways to add to the code, including some that did not require programming expertise, and the square root function got done.

What was more important than the specific function, though, was the

lesson Bender learned about coding styles in general. "I wasn't

thinking how I could make things extendable by teachers when I [wrote]

it,

" Bender said. "Now, that's at the forefront of my

mind.

"

The last year does not seem to have produced any example of student involvement comparable to one Bender remembered from 2007, when Igbo-speaking students in Nigeria produced their own spell-check dictionary for Sugar's Abiword-based Write activity. However, Bender did mention that he was scheduled to be interviewed soon by students in Boston about Sugar, and an upcoming project to have students write Sugar documentation, so Sugar Labs is trying to engage students in discussion as well.

Still another mechanism for receiving feedback is the relatively new

practice of establishing Sugar Labs wherever the interest exists. The goal,

Bender said, is to "have those local centers be responsible for local

support and localization. Those centers can be pretty much structured in

any way that's appropriate to that region. We aren't going to impose a

structure on them. So long as they share our core values, they can be Sugar

Labs. That's beginning to happen now.

"

A Sugar Labs now exists in Colombia, and others are being organized in

Washington, D.C. and Lima, Peru. "Each of them has its own set of

issues they're trying to focus on, but all of them at the same time are

participating in the global dialog,

" Bender said.

Building for a pedagogical future

Fourteen months after Sugar Labs was established, OLPC machines remain the major deployment for Sugar. However, Bender is encouraged by signs that the educational ideas behind Sugar are starting to be adopted elsewhere in free software.

Sugar has a long relationship with Fedora, the basis for the original OLPC operating system and an active participant in everything from Sugar on a Stick to the Oversight Board. But, in the last year, Sugar Labs has started to work more closely with distributions that are specifically geared towards education. For example, Bender suggests that his discussions with the developers of Skolelinux, an educational distribution based on Debian, may have helped them move their planning beyond the mechanics of installation and system maintenance or of software selection.

"I think they were only just beginning to think deeply on how

Linux can impact on learning

", Bender said. "

I think that's why there's a

lot more interest in Sugar recently, because we've been thinking about that

question right from the beginning. I'm convinced that there's not just

technical merit in Linux for learning — there's cultural and

pedagogical merit.

"

Increasingly, Bender hoped, other parts of the free software community

will take over some of the technical aspects of Sugar, such as solving

their own problems with adapting Sugar to specific distributions. If that

happens, Bender said, "that means our attention can be elsewhere,

focusing on the dialog with teachers and with students, and making sure

that deployment is specific to the needs of schools is being done.

"

Concluding, Bender said, "This is important stuff. We've got to

do something about giving every child an opportunity to learn and be a

critical thinker. There's all these problems that the wold is facing right

now, and the only way that these problems are going to be solved is by

putting more minds around them. Whether it's Sugar or something else, I

think that the community really needs to be working hard at engaging more

people in problem-solving and engaging them in learning. And I think that's

the strength of the Linux community.

"

At a time when discussions about free and open source software tend to be centered on business, such comments may sound outdated in their idealism. But, after listening to Bender, the only conclusion one can come to is that is why Sugar Labs was created in the first place: To provide an alternative perspective that is harder to maintain in a commercial venture like OLPC. By that criteria, Sugar Lab's first year can be counted as a solid success.

Security

Passive OS fingerprinting added to netfilter

The Linux packet filtering framework, netfilter, recently added a new capability: passive operating system fingerprinting (OSF). By observing the initial packet of a TCP/IP connection, the OSF module can often determine the operating system at the other end. Putting that capability into netfilter will allow administrators to use OS information as part of the rules they specify (for a firewall or other packet filtering application) with iptables.

Evgeniy Polyakov announced on his weblog that his implementation of OSF had been added to the netfilter tree. Some six years in the making for Linux, the feature has long been available for OpenBSD. The basic idea is that the network packets sent by a particular OS use different values for various TCP parameters. These values along with the order and value of the TCP options field, are unique enough to identify the OS and which version of the OS is running (generally within a range of versions).

This is considered passive fingerprinting because normal network traffic is examined, so there is nothing for the other end to notice—possibly changing its behavior. Nmap and other tools can do active fingerprinting, which means they generate traffic of various kinds to get a more accurate picture of the remote system. Active fingerprinting can be detected, but either kind of fingerprinting can be fooled by a system that takes steps to obscure its fingerprint—or emulate a different OS entirely.

Currently, in order to use OSF, one must patch the kernel and build user-space tools, but that will likely change with the 2.6.31 kernel—at least for the xt_osf.ko kernel module. The user-space tools (an iptables which is OSF-aware as well as a utility to dynamically load fingerprint information) may lag, depending on the distribution. A fingerprint file is available from OpenBSD, and can be used directly by the nfnl_osf utility to load the fingerprints into the kernel.

Packet filtering based on the remote OS has a number of potential uses, from defending against a virus or denial of service attack that only comes from a particular OS to recognizing vulnerable OS installations on the network. As with most security tools, it can be used for good or ill, but it is a capability that mainline Linux has long lacked. It is nice to see that change.

New vulnerabilities

apr-util: denial of service

| Package(s): | apr-util | CVE #(s): | CVE-2009-0023 | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Created: | June 5, 2009 | Updated: | December 4, 2009 | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Description: | From the Debian advisory:

"kcope" discovered a flaw in the handling of internal XML entities in the apr_xml_* interface that can be exploited to use all available memory. This denial of service can be triggered remotely in the Apache mod_dav and mod_dav_svn modules. (No CVE id yet) Matthew Palmer discovered an underflow flaw in the apr_strmatch_precompile function that can be exploited to cause a daemon crash. The vulnerability can be triggered (1) remotely in mod_dav_svn for Apache if the "SVNMasterURI"directive is in use, (2) remotely in mod_apreq2 for Apache or other applications using libapreq2, or (3) locally in Apache by a crafted ".htaccess" file. (CVE-2009-0023) | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Alerts: |

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

apr-util: multiple vulnerabilities

| Package(s): | apr-util | CVE #(s): | CVE-2009-1955 CVE-2009-1956 | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Created: | June 8, 2009 | Updated: | May 10, 2010 | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Description: | From the Mandriva advisory: The expat XML parser in the apr_xml_* interface in xml/apr_xml.c in Apache APR-util before 1.3.7, as used in the mod_dav and mod_dav_svn modules in the Apache HTTP Server, allows remote attackers to cause a denial of service (memory consumption) via a crafted XML document containing a large number of nested entity references, as demonstrated by a PROPFIND request, a similar issue to CVE-2003-1564 (CVE-2009-1955). Off-by-one error in the apr_brigade_vprintf function in Apache APR-util before 1.3.5 on big-endian platforms allows remote attackers to obtain sensitive information or cause a denial of service (application crash) via crafted input (CVE-2009-1956). | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Alerts: |

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

ecryptfs-utils: passphrase leak

| Package(s): | ecryptfs-utils | CVE #(s): | CVE-2009-1296 | ||||||||||||

| Created: | June 9, 2009 | Updated: | September 16, 2009 | ||||||||||||

| Description: | From the Ubuntu advisory: Chris Jones discovered that the eCryptfs support utilities would report the mount passphrase into installation logs when an eCryptfs home directory was selected during Ubuntu installation. The logs are only readable by the root user, but this still left the mount passphrase unencrypted on disk, potentially leading to a loss of privacy. | ||||||||||||||

| Alerts: |

| ||||||||||||||

file: heap-based buffer overflow

| Package(s): | file | CVE #(s): | CVE-2009-1515 | ||||

| Created: | June 5, 2009 | Updated: | June 10, 2009 | ||||

| Description: | From the Mandriva advisory: Heap-based buffer overflow in the cdf_read_sat function in src/cdf.c in Christos Zoulas file 5.00 allows user-assisted remote attackers to execute arbitrary code via a crafted compound document file, as demonstrated by a .msi, .doc, or .mpp file. | ||||||

| Alerts: |

| ||||||

gstreamer0.10-plugins-good: arbitrary code execution

| Package(s): | gstreamer0.10-plugins-good | CVE #(s): | CVE-2009-1932 | ||||||||||||||||||||||||||||

| Created: | June 8, 2009 | Updated: | December 4, 2009 | ||||||||||||||||||||||||||||

| Description: | From the Mandriva advisory: Multiple integer overflows in the (1) user_info_callback, (2) user_endrow_callback, and (3) gst_pngdec_task functions (ext/libpng/gstpngdec.c) in GStreamer Good Plug-ins (aka gst-plugins-good or gstreamer-plugins-good) 0.10.15 allow remote attackers to cause a denial of service and possibly execute arbitrary code via a crafted PNG file, which triggers a buffer overflow (CVE-2009-1932). | ||||||||||||||||||||||||||||||

| Alerts: |

| ||||||||||||||||||||||||||||||

imagemagick: integer overflow

| Package(s): | imagemagick | CVE #(s): | CVE-2009-1882 | ||||||||||||||||||||||||||||||||||||||||||||||||||||

| Created: | June 9, 2009 | Updated: | November 19, 2013 | ||||||||||||||||||||||||||||||||||||||||||||||||||||

| Description: | From the CVE entry: Integer overflow in the XMakeImage function in magick/xwindow.c in ImageMagick 6.5.2-8 allows remote attackers to cause a denial of service (crash) and possibly execute arbitrary code via a crafted TIFF file, which triggers a buffer overflow. NOTE: some of these details are obtained from third party information. | ||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Alerts: |

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||

kernel: denial of service

| Package(s): | kernel | CVE #(s): | CVE-2009-1961 | ||||||||||||||||||||||||||||||||

| Created: | June 8, 2009 | Updated: | July 29, 2009 | ||||||||||||||||||||||||||||||||

| Description: | From the National Vulnerability Database entry: The inode double locking code in fs/ocfs2/file.c in the Linux kernel 2.6.30 before 2.6.30-rc3, 2.6.27 before 2.6.27.24, 2.6.29 before 2.6.29.4, and possibly other versions down to 2.6.19 allows local users to cause a denial of service (prevention of file creation and removal) via a series of splice system calls that trigger a deadlock between the generic_file_splice_write, splice_from_pipe, and ocfs2_file_splice_write functions. | ||||||||||||||||||||||||||||||||||

| Alerts: |

| ||||||||||||||||||||||||||||||||||

kernel: denial of service

| Package(s): | kernel | CVE #(s): | CVE-2009-1360 | ||||||||||||

| Created: | June 9, 2009 | Updated: | July 2, 2009 | ||||||||||||

| Description: | From the CVE entry: The __inet6_check_established function in net/ipv6/inet6_hashtables.c in the Linux kernel before 2.6.29, when Network Namespace Support (aka NET_NS) is enabled, allows remote attackers to cause a denial of service (NULL pointer dereference and system crash) via vectors involving IPv6 packets. | ||||||||||||||

| Alerts: |

| ||||||||||||||

Page editor: Jake Edge

Kernel development

Brief items

Kernel release status

The 2.6.30 kernel is out, released by Linus on June 9. Some of the bigger changes in 2.6.30 include a number of filesystem improvements, the integrity measurement patches, the TOMOYO Linux security module, reliable datagram socket protocol support, object storage device support, the FS-Cache filesystem caching layer, the nilfs filesystem, threaded interrupt handler support, and much more. For more information, see the KernelNewbies 2.6.30 page, the long-format changelog, and LWN's statistics for this development cycle.

As of this writing, no changes have been merged for 2.6.31.

There have been no stable kernel updates in the last week. The 2.6.29.5 update is in the review process (along with a 2.6.27 update), though, with an expected release on or after June 11.

Kernel development news

Quotes of the week

In brief

Performance counters. Version 8 of the performance counters patch has been posted. This code has come a long way since its beginnings; among other things, there is now a user-space utility which makes the full functionality of performance counters available in a single application. See the announcement for more details on what's happening in this area.Host protected area. The HPA patches for the IDE subsystem were mentioned here last week. Since then, Bartlomiej Zolnierkiewicz has updated the patches and posted them, under the title "IDE fixes," for merging into the 2.6.30 kernel. This posting raised some eyebrows, seeing as the 2.6.30 release was imminent at the time. A number of developers objected, noting that it was the wrong part of the release cycle for the addition of new features.

Bartlomiej stuck to his request, though, claiming that the addition of HPA support was really a bug fix. He went as far as to suggest that the real reason for opposition to merging the patches in 2.6.30 was a fear that they would allow the IDE layer to surpass the libata code in functionality. In the end, needless to say, these arguments did not prevail. The HPA code should go in for 2.6.31, but 2.6.30 was released without it.

halt_delay. A crashing kernel often prints a fair amount of useful information on its way down. That information is often key to diagnosing and fixing the problem. It also, often, is sufficiently voluminous that the most important part scrolls off the console before it can possibly be read. In the absence of a debugging console, that information is forevermore lost - annoying, to say the least.

This patch by Dave Young takes an interesting approach to this problem. A new sysfs parameter (/sys/module/printk/parameters/halt_delay specifies a delay value in milliseconds. If the kernel is currently halting or restarting, every line printed to the console will be accompanied by the requested amount of delay. Set halt_delay high enough, and it should be possible to grab the most important information from an oops listing before it scrolls off the screen. It's a bit of a blunt tool, but sometimes blunt is what is needed to get the job done when more precise implements are not available.

r8169 ping of death. A longstanding bug in the RTL8169 NIC driver would lead to an immediate crash when receiving a packet larger than 1500 bytes in size as reported by Michael Tokarev. It turns out that the NIC was being informed that frames up to 16K in size could be received, but the skb provided for the receive ring buffer was only 1536 bytes long. This bug goes back to somewhere before 2.6.10, thus into the mists of pre-git time. The problem was fixed by a patch from Eric Dumazet, which was one of the final patches merged before the release 2.6.30.

Runtime power management

For most general-purpose Linux deployments, power management really only comes into play at suspend and resume time. Embedded systems tend to have dedicated code which keeps the device's power usage to a minimum, but there's no real equivalent for Linux as obtained via a standard distribution installation. A new patch from Rafael Wysocki may change that situation, though, and, in the process, yield lower power usage and more reliable power management on general-purpose machines.Rafael's patch adds infrastructure supporting a seemingly uncontroversial goal: let the kernel power down devices which are not currently being used. To that end, struct dev_pm_ops gains two new function pointers:

int (*autosuspend)(struct device *dev);

int (*autoresume)(struct device *dev);

The idea is simple: if the kernel decides that a specific device is not in use, and that it can be safely powered down, the autosuspend() function will be called. At some future point, autoresume() is called to bring the device back up to full functionality. The driver should, of course, take steps to save any necessary device state while the device is sleeping.

The value in this infrastructure should be reasonably clear. If the kernel can decide that system operation will not suffer if a given device is powered down, it can call the relevant autosuspend() function and save some power. But there's more to it than that; a kernel which uses this infrastructure will exercise the suspend/resume functionality in specific drivers in a regular way. That, in turn, should turn up obscure suspend/resume bugs in ways which make them relatively easy to identify and, hopefully fix. If something goes wrong when suspending or resuming a system as a whole, determining the culprit can be hard to do. If suspending a single idle device creates trouble, the source of the problem will be rather more obvious. So this infrastructure should lead to more reliable power management in general, which is a good thing.

A reading of the patch shows that one thing is missing: the code which decides when to suspend and resume specific devices. Rafael left that part as an exercise for the reader. As it turns out, different readers had rather different ideas of how this decision needs to be made.

Matthew Garrett quickly suggested that there would need to be a mechanism by which user space could set the policy to be used when deciding whether to suspend a specific device. In his view, it is simply not possible for the kernel to know, on its own, that suspending a specific device is safe. One example he gives is eSATA; an eSATA interface is not distinguishable (by the kernel) from an ordinary SATA interface, but it supports hotplugging of disks. If the kernel suspends an "unused" eSATA controller, it will fail to notice when the user attaches a new device. Another example is keyboards; it may make sense to suspend a keyboard while the screen saver is running - unless the user is running an audio player and expects the volume keys to work. Situations like this are hard for the kernel to figure out.

Ingo Molnar has taken a different approach, arguing that this kind of decision really belongs in the kernel. The kernel should, he says, suspend devices by default whenever it is safe to do so; there's no point in asking user space first.

Sane kernel defaults are important and the kernel sure knows what kind of hardware it runs on. This 'let the user decide policy' for something as fundamental (and also as arcane) as power saving mode is really a disease that has caused a lot of unnecessary pain in Linux in the past 15 years.

The discussion went back and forth without much in the way of seeming agreement. But the difference between the two positions is, perhaps, not as large as it seems. Ingo argues for automatically suspending devices where this operation is known to be safe, and he acknowledges that this may not be true for the majority of devices out there. That is not too far from Matthew's position that a variety of devices cannot be automatically suspended in a safe way. But, Ingo says, we should go ahead and apply automatic power management in the cases where we know it can be done, and we should expect that the number of devices with sophisticated (and correct) power management functionality will grow over time.

So the only real disagreement is over how the kernel can know whether a given device can be suspended or not. Ingo would like to see the kernel figure it out from the information which is already available; Matthew thinks that some sort of new policy-setting interface will be required. Answering that question may not be possible until somebody has tried to implement a fully in-kernel policy, and that may take a while. In the mean time, though, at least we will have the infrastructure which lets developers begin to play with potential solutions.

Poke-a-hole and friends

Reducing the memory footprint of a binary is important for improving performance. Poke-a-hole (pahole) and other binary object file analysis programs developed by Arnaldo Carvalho de Melo help in analyzing the object files for finding inefficiencies such as holes in data structures, or functions declared inlined being eventually un-inlined functions in the object code.

Poke-a-hole

Poke-a-hole (pahole) is an object-file analysis tool to find the size of the data structures, and the holes caused due to aligning the data elements to the word-size of the CPU by the compiler. Consider a simple data structure:

struct sample {

char a[2];

long l;

int i;

void *p;

short s;

};

Adding the size of individual elements of the structure, the expected size of the sample data structure is:

2*1 (char) + 4 (long) + 4 (int) + 4 (pointer) + 2 (short) = 16 bytes

Compiling this on a 32-bit architecture (ILP32, or Int-Long-Pointer 32 bits) reveals that the size is actually 20 bytes. The additional bytes are inserted by the compiler to make the data elements aligned to word size of the CPU. In this case, two bytes padding is added after char a[2], and another two bytes are added after short s. Compiling the same program on a 64-bit machine (LP64, or Long-Pointer 64 bits) results in struct sample occupying 40 bytes. In this case, six bytes are added after char a[2], four bytes after int i, and six bytes after short 2. Pahole was developed to narrow down on such holes created by word-size alignment by the compiler. To analyze the object files, the source must be compiled with the debugging flag "-g". In the kernel, this is activated by CONFIG_DEBUG_INFO, or "Kernel Hacking > Compile the kernel with debug info".

Analyzing the object file generated by the program with struct sample on a i386 machine results in:

i386$ pahole sizes.o

struct sample {

char c[2]; /* 0 2 */

/* XXX 2 bytes hole, try to pack */

long int l; /* 4 4 */

int i; /* 8 4 */

void * p; /* 12 4 */

short int s; /* 16 2 */

/* size: 20, cachelines: 1, members: 5 */

/* sum members: 16, holes: 1, sum holes: 2 */

/* padding: 2 */

/* last cacheline: 20 bytes */

};

Each data element of the structure has two numbers listed in C-style comments. The first number represents the offset of the data element from the start of the structure and the second number represents the size in bytes. At the end of the structure, pahole summarizes the details of the size and the holes in the structure.

Similarly, analyzing the object file generated by the program with struct sample on a x86_64 machine results in:

x86_64$ pahole sizes.o

struct sample {

char c[2]; /* 0 2 */

/* XXX 6 bytes hole, try to pack */

long int l; /* 8 8 */

int i; /* 16 4 */

/* XXX 4 bytes hole, try to pack */

void * p; /* 24 8 */

short int s; /* 32 2 */

/* size: 40, cachelines: 1, members: 5 */

/* sum members: 24, holes: 2, sum holes: 10 */

/* padding: 6 */

/* last cacheline: 40 bytes */

};

Notice that there is a new hole introduced after int i, which was not present in the object compiled for the 32-bit machine. Compiling a source code developed on i386 but compiled on x86_64 might be wasting more space because of such alignment problems because long and pointer graduated to being eight bytes wide while integer remained as four bytes. Ignoring data structure re-structuring is a common mistake developers do when porting applications from i386 to x86_64. This results in larger memory footprint of the program than expected. A larger data structure leads to more cacheline reads than required and hence decreasing performance.

Pahole is capable of suggesting an alternate compact data structure reorganizing the data elements in the data structure, by using the --reorganize option. Pahole also accepts an optional --show_reorg_steps to show the steps taken to compress the data structure.

x86_64$ pahole --show_reorg_steps --reorganize -C sample sizes.o

/* Moving 'i' from after 'l' to after 'c' */

struct sample {

char c[2]; /* 0 2 */

/* XXX 2 bytes hole, try to pack */

int i; /* 4 4 */

long int l; /* 8 8 */

void * p; /* 16 8 */

short int s; /* 24 2 */

/* size: 32, cachelines: 1, members: 5 */

/* sum members: 24, holes: 1, sum holes: 2 */

/* padding: 6 */

/* last cacheline: 32 bytes */

}

/* Moving 's' from after 'p' to after 'c' */

struct sample {

char c[2]; /* 0 2 */

short int s; /* 2 2 */

int i; /* 4 4 */

long int l; /* 8 8 */

void * p; /* 16 8 */

/* size: 24, cachelines: 1, members: 5 */

/* last cacheline: 24 bytes */

}

/* Final reorganized struct: */

struct sample {

char c[2]; /* 0 2 */

short int s; /* 2 2 */

int i; /* 4 4 */

long int l; /* 8 8 */

void * p; /* 16 8 */

/* size: 24, cachelines: 1, members: 5 */

/* last cacheline: 24 bytes */

}; /* saved 16 bytes! */

The --reorganize algorithm tries to compact the structure by moving the data elements from the end of the struct to fill holes. It makes an attempt to move the padding at the end of the struct. Pahole demotes the bit fields to a smaller basic type when the type being used has more bits that required by the element in the bit field. For example, int flag:1 will be demoted to char.

Being over-zealous in compacting a data structure sometimes may reduce performance. Writes to data elements may flush the cachelines of other data elements being read from the same cacheline. So, some structures are defined with ____cacheline_aligned in order to force them to start from the beginning of a fresh cacheline. An example output of structure which used ____cacheline_aligned from drivers/net/e100.c is:

struct nic {

/* Begin: frequently used values: keep adjacent for cache

* effect */

u32 msg_enable ____cacheline_aligned;

struct net_device *netdev;

struct pci_dev *pdev;

struct rx *rxs ____cacheline_aligned;

struct rx *rx_to_use;

struct rx *rx_to_clean;

struct rfd blank_rfd;

enum ru_state ru_running;

spinlock_t cb_lock ____cacheline_aligned;

spinlock_t cmd_lock;

<output snipped>

Analyzing the nic structure using pahole results in holes just before

the cacheline boundary, the data elements before rxs and cb_lock.

x86_64$ pahole -C nic /space/kernels/linux-2.6/drivers/net/e100.o

struct nic {

u32 msg_enable; /* 0 4 */

/* XXX 4 bytes hole, try to pack */

struct net_device * netdev; /* 8 8 */

struct pci_dev * pdev; /* 16 8 */

/* XXX 40 bytes hole, try to pack */

/* --- cacheline 1 boundary (64 bytes) --- */

struct rx * rxs; /* 64 8 */

struct rx * rx_to_use; /* 72 8 */

struct rx * rx_to_clean; /* 80 8 */

struct rfd blank_rfd; /* 88 16 */

enum ru_state ru_running; /* 104 4 */

/* XXX 20 bytes hole, try to pack */

/* --- cacheline 2 boundary (128 bytes) --- */

spinlock_t cb_lock; /* 128 4 */

spinlock_t cmd_lock; /* 132 4 */

<output snipped>

Besides finding holes, pahole can be used for the data field sitting at a particular offset from the start of the data structure. Pahole can also list the sizes of all the data structures:

x86_64$ pahole --sizes linux-2.6/vmlinux | sort -k3 -nr | head -5

tty_struct 1328 10

vc_data 432 9

request_queue 2272 8

net_device 1536 8

mddev_s 792 8

The first field represents data structure name, the second represents the current size of the data structure and the final field represents the number of holes present in the structure.

Similarly, to get the summary of possible data structure that can be packed to save the size of the data structure:

x86_64$ pahole --packable sizes.o

sample 40 24 16

The first field represents the data structure, the second represents the current size, the third represents the packed size and the fourth field represents the total number of bytes saved by packing the holes.

Pfunct

The pfunct tool shows the function aspects in the object code. It is capable of showing the number of goto labels used, number of parameters to the functions, the size of the functions etc. Most popular usage however is finding the number of functions declared inline but not inlined, or the number of function declared uninlined but are eventually inlined. The compiler tends to optimize the functions by inlining or uninlining the functions depending on the size.

x86_64$ pfunct --cc_inlined linux-2.6/vmlinux | tail -5

run_init_process

do_initcalls

zap_identity_mappings

clear_bss

copy_bootdata

The compiler may also choose to uninline functions which have been specifically declared inline. This may be caused by multiple reasons, such as recursive functions for which inlining will cause infinite regress. pfunct --cc_uninlined shows functions which are declared inline but have been uninlined by the compiler. Such functions are good candidates for a second look, or for removing the inline declaration altogether. Fortunately, pfunct --cc_uninlined on vmlinux (only) did not list any functions.

Debug Info

The utilities rely on the debug_info section of the object file, when the source code is compiled using the debug option. These utilities rely on the DWARF standard or Compact C-Type Format (CTF) which are common debugging file format used by most compilers. Gcc uses the DWARF format.

The debugging data is organized under the debug_info section of ELF (Executable and Linkage Format), in the form of tags with values such as representing variables, parameters of a function, placed in hierarchical nested format. To read raw information, you may use readelf provided by binutils, or eu-readelf provided by elfutils. Common standard distributions do not compile the packages with debuginfo because it tends to make the binaries pretty big. Instead they include this information as debuginfo packages, which contain the debuginfo information which can be analyzed through these tools or gdb.

Utilities discussed in this article were initially developed to analyze kernel object files. However, these utilities are not limited to kernel object files and can be used with any userspace programs generating debug information. The source code of pahole utilities are maintained at git://git.kernel.org/pub/scm/linux/kernel/git/acme/pahole.git More information about pahole and other utilities to analyze debug object files can be found in the PDF about 7 dwarves.

Linux kernel design patterns - part 1

One of the topics of ongoing interest in the kernel community is that of maintaining quality. It is trivially obvious that we need to maintain and even improve quality. It is less obvious how best to do so. One broad approach that has found some real success is to increase the visibility of various aspects of the kernel. This makes the quality of those aspects more apparent, so this tends to lead to an improvement of the quality.This increase in visibility takes many forms:

- The checkpatch.pl script highlights many deviations

from accepted code formatting style. This encourages people (who

remember to use the script) to fix those style errors. So, by

increasing the visibility of the style guide, we increase the

uniformity of appearance and so, to some extent, the quality.

- The "lockdep" system (when enabled) dynamically measures

dependencies between locks (and related states such as whether

interrupts are enabled). It then reports anything that looks odd.

These oddities will not always mean a deadlock or similar problem is

possible, but in many cases they do, and the deadlock possibility

can be removed. So by increasing the visibility of the lock

dependency graph, quality can be increased.

- The kernel contains various other enhancements to visibility such

as the "poisoning" of unused areas of memory so invalid access will

be more apparent, or simply the use of symbolic names rather than

plain hexadecimal addresses in stack traces so that bug reports are more

useful.

- At a much higher level, the "git" revision tracking software that is used for tracking kernel changes makes it quite easy to see who did what and when. The fact that it encourages a comment to be attached to each patch makes it that much easier to answer the question "Why is the code this way". This visibility can improve understanding and that is likely to improve quality as more developers are better informed.

There are plenty of other areas where increasing visibility does, or could, improve quality. In this series we will explore one particular area where your author feels visibility could be increased in a way that could well lead to qualitative improvements. That area is the enunciation of kernel-specific design patterns.

Design Patterns

A "design pattern" is a concept that was first expounded in the field of Architecture and was brought to computer engineering, and particularly the Object Oriented Programming field, though the 1994 publication Design Patterns: Elements of Reusable Object-Oriented Software. Wikipedia has further useful background information on the topic.

In brief, a design pattern describes a particular class of design problem, and details an approach to solving that class of problem that has proven effective in the past. One particular benefit of a design pattern is that it combines the problem description and the solution description together and gives them a name. Having a simple and memorable name for a pattern is particularly valuable. If both developer and reviewer know the same names for the same patterns, then a significant design decision can be communicated in one or two words, thus making the decision much more visible.

In the Linux kernel code base there are many design patterns that have been found to be effective. However most of them have never been documented so they are not easily available to other developers. It is my hope that by explicitly documenting these patterns, I can help them to be more widely used and, thus, developers will be able to achieve effective solutions to common problems more quickly.

In the remainder of this series we will be looking at three problem domains and finding a variety of design patterns of greatly varying scope and significance. Our goal in doing so is to not only to enunciate these patterns, but also to show the range and value of such patterns so that others might make the effort to enunciate patterns that they have seen.

A number of examples from the Linux kernel will be presented throughout this series as examples are an important part of illuminating any pattern. Unless otherwise stated they are all from 2.6.30-rc4.

Reference Counts

The idea of using a reference counter to manage the lifetime of an object is fairly common. The core idea is to have a counter which is incremented whenever a new reference is taken and decremented when a reference is released. When this counter reaches zero any resources used by the object (such as the memory used to store it) can be freed.

The mechanisms for managing reference counts seem quite straightforward. However there are some subtleties that make it quite easy to get the mechanisms wrong. Partly for this reason, the Linux kernel has (since 2004) a data type known as "kref" with associated support routines (see Documentation/kref.txt, <linux/kref.h>, and lib/kref.c). These encapsulate some of those subtleties and, in particular, make it clear that a given counter is being used as a reference counter in a particular way. As noted above, names for design patterns are very valuable and just providing that name for kernel developers to use is a significant benefit for reviewers.

In the words of Andrew Morton:

This inclusion of kref in the Linux kernel gives both a tick and a cross to the kernel in terms of explicit support for design patterns. A tick is deserved as the kref clearly embodies an important design pattern, is well documented, and is clearly visible in the code when used. It deserves a cross however because the kref only encapsulates part of the story about reference counting. There are some usages of reference counting that do not fit well into the kref model as we will see shortly. Having a "blessed" mechanism for reference counting that does not provide the required functionality can actually encourage mistakes as people might use a kref where it doesn't belong and so think it should just work where in fact it doesn't.

A useful step to understanding the complexities of reference counting is to understand that there are often two distinctly different sorts of references to an object. In truth there can be three or even more, but that is very uncommon and can usually be understood by generalizing the case of two. We will call the two types of references "external" and "internal", though in some cases "strong" and "weak" might be more appropriate.

An "external" reference is the sort of reference we are probably most accustomed to think about. They are counted with "get" and "put" and can be held by subsystems quite distant from the subsystem that manages the object. The existence of a counted external reference has a strong and simple meaning: This object is in use.

By contrast, an "internal" reference is often not counted, and is only held internally to the system that manages the object (or some close relative). Different internal references can have very different meanings and hence very different implications for implementation.

Possibly the most common example of an internal reference is a cache which provides a "lookup by name" service. If you know the name of an object, you can apply to the cache to get an external reference, providing the object actually exists in the cache. Such a cache would hold each object on a list, or on one of a number of lists under e.g. a hash table. The presence of the object on such a list is a reference to the object. However it is likely not a counted reference. It does not mean "this object is in use" but only "this object is hanging around in case someone wants it". Objects are not removed from the list until all external references have been dropped, and possibly they won't be removed immediately even then. Clearly the existence and nature of internal references can have significant implications on how reference counting is implemented.

One useful way to classify different reference counting styles is by the required implementation of the "put" operation. The "get" operation is always the same. It takes an external reference and produces another external reference. It is implemented by something like:

assert(obj->refcount > 0) ; increment(obj->refcount);

or, in Linux-kernel C:

BUG_ON(!atomic_read(&obj->refcnt)) ; atomic_inc(&obj->refcnt);

Note that "get" cannot be used on an unreferenced object. Something else is needed there.

The "put" operation comes in three variations. While there can be some overlap in use cases, it is good to keep them separate to help with clarity of the code. The three options, in Linux-C, are:

1 atomic_dec(&obj->refcnt);

2 if (atomic_dec_and_test(&obj->refcnt)) { ... do stuff ... }

3 if (atomic_dec_and_lock(&obj->refcnt, &subsystem_lock)) {

..... do stuff ....

spin_unlock(&subsystem_lock);

}

The "kref" style

Starting in the middle, option "2" is the style used for a kref. This style is appropriate when the object does not outlive its last external reference. When that reference count becomes zero, the object needs to be freed or otherwise dealt with, hence the need to test for the zero condition with atomic_dec_and_test().

Objects that fit this style often do not have any internal references to worry about, as is the case with most objects in sysfs, which is a heavy user of kref. If, instead, an object using the kref style does have internal references, it cannot be allowed to create an external reference from an internal reference unless there are known to be other external references. If this is necessary, a primitive is available:

atomic_inc_not_zero(&obj->refcnt);

which increments a value providing it isn't zero, and returns a result indicating success or otherwise. atomic_inc_not_zero() is a relatively recent invention in the linux kernel, appearing in late 2005 as part of the lockless page cache work. For this reason it isn't widely used and some code that could benefit from it uses spinlocks instead. Sadly, the kref package does not make use of this primitive either.

An interesting example of this style of reference that does not use kref, and does not even use atomic_dec_and_test() (though it could and arguably should) are the two ref counts in struct super: s_count and s_active.

s_active fits the kref style of reference counts exactly. A superblock starts life with s_active being 1 (set in alloc_super()), and, when s_active becomes zero, further external references cannot be taken. This rule is encoded in grab_super(), though this is not immediately clear. The current code (for historical reasons) adds a very large value (S_BIAS) to s_count whenever s_active is non-zero, and grab_super() tests for s_count exceeding S_BIAS rather than for s_active being zero. It could just as easily do the latter test using atomic_inc_not_zero(), and avoid the use of spinlocks.

s_count provides for a different sort of reference which has both "internal" and "external" aspects. It is internal in that its semantic is much weaker than that of s_active-counted references. References counted by s_count just mean "this superblock cannot be freed just now" without asserting that it is actually active. It is external in that it is much like a kref starting life at 1 (well, 1*S_BIAS actually), and when it becomes zero (in __put_super()) the superblock is destroyed.

So these two reference counts could be replaced by two krefs, providing:

- S_BIAS was set to 1

- grab_super() used atomic_inc_not_zero() rather than testing against S_BIAS

and a number of spinlock calls could go away. The details are left as an exercise for the reader.

The "kcref" style

The Linux kernel doesn't have a "kcref" object, but that is a name that seems suitable to propose for the next style of reference count. The "c" stands for "cached" as this style is very often used in caches. So it is a Kernel Cached REFerence.

A kcref uses atomic_dec_and_lock() as given in option 3 above. It does this because, on the last put, it needs to be freed or checked to see if any other special handling is needed. This needs to be done under a lock to ensure no new reference is taken while the current state is being evaluated.

A simple example here is the i_count reference counter in struct inode. The important part of iput() reads:

if (atomic_dec_and_lock(&inode->i_count, &inode_lock))

iput_final(inode);

where iput_final() examines the state of the inode and decides if it can be destroyed, or left in the cache in case it could get reused soon.

Among other things, the inode_lock prevents new external references being created from the internal references of the inode hash table. For this reason converting internal references to external references is only permitted while the inode_lock is held. It is no accident that the function supporting this is called iget_locked() (or iget5_locked()).

A slightly more complex example is in struct dentry, where d_count is managed like a kcref. It is more complex because two locks need to be taken before we can be sure no new reference can be taken - both dcache_lock and de->d_lock. This requires that either we hold one lock, then atomic_dec_and_lock() the other (as in prune_one_dentry()), or that we atomic_dec_and_lock() the first, then claim the second and retest the refcount - as in dput(). This is good example of the fact that you can never assume you have encapsulated all possible reference counting styles. Needing two locks could hardly be foreseen.

An even more complex kcref-style refcount is mnt_count in struct vfsmount. The complexity here is the interplay of the two refcounts that this structure has: mnt_count, which is a fairly straightforward count of external references, and mnt_pinned, which counts internal references from the process accounting module. In particular it counts the number of accounting files that are open on the filesystem (and as such could use a more meaningful name). The complexity comes from the fact that when there are only internal references remaining, they are all converted to external references. Exploring the details of this is again left as an exercise for the interested reader.

The "plain" style

The final style for refcounting involves just decrementing the reference count (atomic_dec()) and not doing anything else. This style is relatively uncommon in the kernel, and for good reason. Leaving unreferenced objects just lying around isn't a good idea.

One use of this style is in struct buffer_head, managed by fs/buffer.c and <linux/buffer_head.h>. The put_bh() function is simply:

static inline void put_bh(struct buffer_head *bh)

{

smp_mb__before_atomic_dec();

atomic_dec(&bh->b_count);

}

This is OK because buffer_heads have lifetime rules that are closely tied to a page. One or more buffer_heads get allocated to a page to chop it up into smaller pieces (buffers). They tend to remain there until the page is freed at which point all the buffer_heads will be purged (by drop_buffers() called from try_to_free_buffers()).

In general, the "plain" style is suitable if it is known that there will always be an internal reference so that the object doesn't get lost, and if there is some process whereby this internal reference will eventually get used to find and free the object.

Anti-patterns