LWN.net Weekly Edition for November 15, 2007

Google's phone initiative: Android

![[ Android Logo ]](https://static.lwn.net/images/android/android_logo.png)

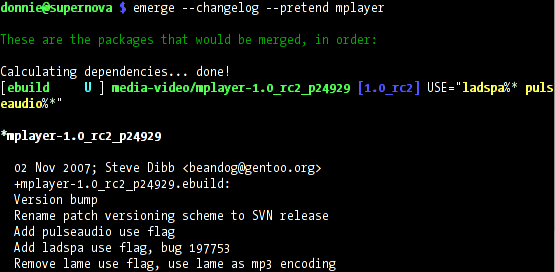

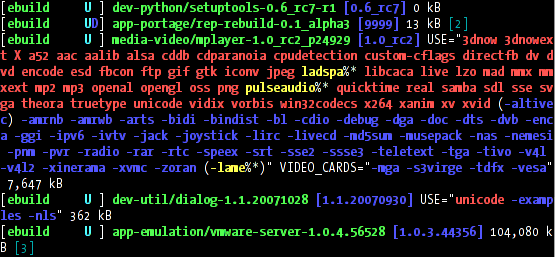

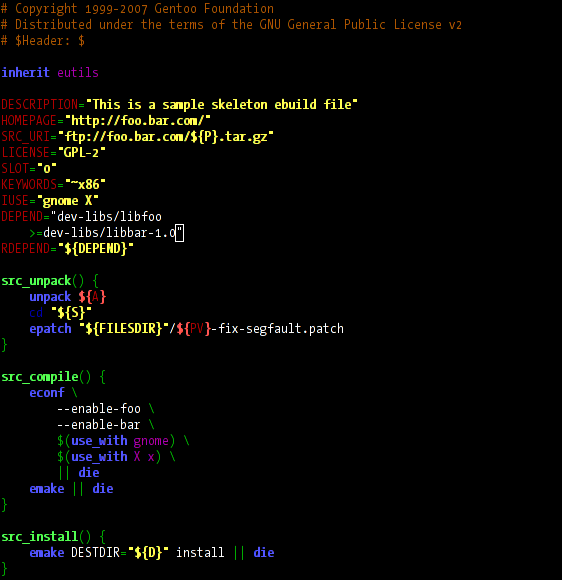

The media frenzy over Google's Android announcement has subsided, a bit, with actual details of the platform and strategy starting to emerge. Android is the long-rumored gPhone in a very different guise; instead of creating a phone, Google has created the Open Handset Alliance (OHA) – bringing together more than 30 different hardware and software companies – to develop a platform for mobile phones. That platform is Android, a Linux-based, Java-programmed framework for developing mobile applications. With this week's release of the Software Development Kit (SDK), we can now see some sample code as well as seeing how Google intends to attract developers to the platform.

Google has a stated intent to release "most of the components" of Android under the Apache v2 license, but the "early look" SDK has a much more stringent license. The license terms appear to give the OHA and Google some wiggle room regarding pieces of the software that they may not be willing or able to release under the Apache license, but a strict reading will worry free software folks. Some of the components of Android, Linux in particular, are released under other licenses, so the most charitable interpretation is that "most" is referring to those non-Apache-licensed components, but it will be a while before we know.

The SDK comes with lots of sample code for applications, but none for the tools that come with it; that will presumably come later. Probably the most interesting piece of Android is the Dalvik virtual machine (VM), which handles running the Java applications, but is not a Java virtual machine (JVM). Instead, Dalvik takes the .class and .jar files produced by the Java compiler and turns them into Dalvik executable (.dex) files.

![[ Android Emulator ]](https://static.lwn.net/images/android/android3_sm.png)

Dalvik is a register-based VM – unlike Java's stack-based implementation – that was created with a focus on minimizing the memory footprint. There may be optimizations possible with Dalvik's model that are not possible for JVM bytecode, but there may also be another motive for Android having its own VM: it is not subject to the Sun-controlled Java Community Process. Google is trying to leverage developer knowledge and understanding of the Java language and class libraries, without, necessarily, rigorously following the Java "standard".

Android uses the Apache Harmony class libraries to provide compatibility with Java Standard Edition (Java SE). There is an ongoing dispute between the Apache foundation and Sun regarding certification of the Harmony code as Java SE compatible, but that appears not to be much of a concern for Google and the OHA. It will be interesting to see if Sun's recent patent beliefs extend to implementations of Java SE that might infringe their patents.

Java Micro Edition (Java ME) runs on a large number of mobile phones today, but has been fragmented by the various phone vendors, breaking the (mostly illusory) "write once, run anywhere" promise that Java popularized. Though an Apache license won't prohibit that kind of fragmentation, the OHA requires its members to agree not to fragment the Android platform. That agreement has not been made public and likely lacks any teeth to punish violators, but it does serve as a statement of intent. It is clear that Google and the other OHA members have learned from the Java ME implementations in current phones and have something fairly different in mind.

The key to success of the Android platform will be in the applications that get built for it. If Android phones have enough unique and interesting tools, customers will seek it out – at least in theory. The early release of the SDK, long before real hardware is available, is part of the strategy to attract developers. Google has also put up $10 million for bounties as part of the Android Developer Challenge. Developers can enter their applications until March 3, with the best 50 receiving $25,000 each. Those winners will then be eligible for even larger, six-figure, awards after handsets are available in the second half of 2008.

![[ Android Emulator ]](https://static.lwn.net/images/android/android1_sm.png)

Money is certain to motivate some to write Android applications, but an easy-to-program interface based on an open platform will be enough for others. Android is definitely being touted as being easy to develop for, with one of the introductory videos showing a few sample programs that were claimed to be completed in a day. The SDK comes with an emulator that allows developing and debugging without access to phone hardware. Screenshots of different emulator "skins", which represent different hypothetical handset models, accompany this article.

The project community is, unsurprisingly, hosted at code.google.com. The site is already active, just a few days after the release, with an impressive amount of documentation available. The discussion group has quite a bit of traffic with questions, bug reports, and ideas for additional functionality. One recent poster had ported busybox to Android, making all of the normal UNIX command-line tools available in the emulator.

Android is a bold move that strikes at the heart of some entrenched interests, most notably Sun, but Apple (perhaps only recently entrenched) as well. Other mobile phone OS vendors, Symbian and Microsoft for example, could be hurt by widespread Android adoption as well. There is the ever-present threat of patent litigation getting in the way, but the backing of Google and the other OHA members should help to blunt that kind of attack. The real question is whether Android offers something compelling to both developers and users. The handset makers and cellular carriers will also need to do more than just join an alliance too; phones will need to be created and sold.

Unfortunately, the real losers in this could be OpenMoko and Qtopia. Both are free software platforms for mobile phones, but neither has been able to generate the publicity that Google has. At a fundamental level, Android takes a different approach, requiring all applications to run atop Dalvik, whereas OpenMoko and Qtopia both allow for native code, compiled from C or C++. Each allows the possibility of porting applications from other platforms, but it is likely that there are far more mobile phone Java applications than the others, which might give Android a leg up. It is certainly possible to run a Java VM (or even a Dalvik VM) on OpenMoko or Qtopia, which might open those platforms up to more applications, but the Android initiative has the appearance of a juggernaut – at least in the early going.

Java may offer some security advantages as well. Native code cannot be sandboxed easily so it will be easier to write malware for platforms that support it. The mobile phone malware "industry" is still in its infancy, but we can expect to see more of it as more sophisticated phones get into the hands of more security-unconscious users.

While iPhone users still wait for the ability to build applications for their phone, free software users have multiple choices of development platforms. The Neo 1973 hardware from OpenMoko is getting closer to availability for end users, with at least two software stacks available. It certainly wouldn't be too surprising to see Android ported to the Neo as well, though no official word has been heard as of yet. It is an exciting time for free software and for mobile phone users as we have just begun to see what the community can do in this space. With luck, there will be room for multiple free mobile platforms, avoiding a monoculture, as well.

Getting the (Share)Point About Document Formats

The OpenDocument Foundation was formed in 2005, with the mission "to provide a conduit for funding and support for individual contributors to participate in ODF development" at the standards body OASIS. So, at a time when backing for the ODF format seems to be gaining in strength around the world, eyebrows were naturally raised when Sam Hiser, the Foundation's Vice President and Director of Business Affairs, wrote on October 16 that it was no longer supporting ODF:

Microsoft's Jason Matusow naturally allowed himself a little Schadenfreude, Mary Jo Foley waxed apocalyptic, speculating that "the ODF camp might unravel before Microsoft's rival Office Open XML (OOXML) comes up for final international vote early next year," and IBM's Rob Weir provided a characteristically witty point-by-point criticism of the group's reasoning behind its move, dubbing the OpenDocument Foundation "two guys without a garage", in a nod to the "mythology of Silicon Valley" and its history of "two guys in a garage founding great enterprises."

Meanwhile, in an attempt to understand what was going on, standards expert Andy Updegrove tried applying Occam's Razor:

That seems a little hard to believe, though, given the years of hard work put in by Foundation members in support of ODF. For example, Hiser notes that he worked on the OpenOffice.org project "from 2001 through its 20-millionth download. I was OpenOffice.org's Marketing Project Lead...back when people said 'Open What?'" Moreover, if the Foundation had really wanted to wield a sharp stick, it could have done so far more effectively by announcing its break earlier. As Hiser points out: "I was supportive of ODF into the summer in order to avoid negative attention for ODF leading up to the September ISO vote on OOXML."

The roots of the Foundation's decision to abandon the ODF format in favour of the little-known Compound Document Formats (CDF) from the W3C go back to one of the most fraught and painful episodes in the history of open source and open standards: the attempt by the Commonwealth of Massachusetts to adopt the ODF format. Hiser was in the thick of it:

Sun's Chief Open Source Officer, Simon Phipps, begs to differ:

The main bone of contention between the OpenDocument Foundation and the rest of the community supporting ODF comes down to the issue of whether "100%", full-fidelity interoperability with Microsoft Office is achievable or even desirable. For example, Weir wrote:

The Foundation's position was explained by its President, Gary Edwards:

If however we could achieve full fidelity conversions of legacy Microsoft binary documents to ODF, and were able to guarantee the roundtrip process of these newly christened ODF documents in a mixed desktop environment, one comprised of ODF-enabled Microsoft Office, OpenOffice.org, Novell Office, WorkPlace, and KOffice, the existing MSOffice bound business processes could continue being used even as new ODF ready workstations were added to the workflow. Massachusetts could migrate to non-Microsoft Office software in manageable phases, restoring competition -- and sanity -- to the Commonwealth's software procurement program.

If on the other hand, there is no full fidelity conversion to ODF of legacy documents available at the head point of the migration -- Microsoft Office -- then the business process will break under the weight of users having to stop everything to fix and repair the artifacts of lossy file conversions. What Massachusetts discovered is that users will immediately revert to a Microsoft-only process wherever the business process system breaks down due to conversion fidelity problems. It is a productivity killer and a show stopper for migration to ODF-supporting software.

This line of thinking probably explains the widespread incomprehension that greeted the Foundation's decision to abandon ODF. Supporters of the latter believe that it is by far the best document format, one that provides numerous benefits to users, notably freedom from lock-in. Hiser couldn't agree more: "We don't want OOXML to ever see the light of day, and certainly we feel deeply that it needs to be rejected by ISO finally and conclusively." But he adds:

In companies running the Microsoft enterprise stack, those "sticky business processes" are defined and stored in a program that has a surprisingly low profile, but which may well turn out to be the biggest emerging threat to open source: Sharepoint. One perceptive observer, Alfresco's Matt Asay, had already spotted that threat 18 months ago, and is just as worried today:

Even worse, this same lock-in applies to any ODF documents the user might have. As Asay explains:

In other words, the lock-in occurs not at the document level - the one the ODF community is most focused on - but at the level of the workflow. If companies can't export their documents with ease and with perfect fidelity, the Foundation believes, they will simply opt for the default Microsoft solution - Sharepoint - and become trapped by workflow lock-in. This is why the Hiser and his colleagues have shifted their support away from ODF to CDF, which they think could allow companies to export Microsoft Office documents with perfect fidelity into other, truly open workflow systems. Asay's explains the difference with reference to his own company's product:

The Foundation believes that the ODF format could have addressed this issue had it added certain extensions to the standard to provide perfect interoperability with Microsoft's Office documents. Some, like Weir, doubt this:

Given the reluctance of the ODF community to take that route, the Foundation hopes to blunt the Sharepoint threat by combining the CDF format with some code it had already written for use with ODF files, called the "da Vinci" plugin. Hiser explains where the name came from:

It is the da Vinci plugin, the Foundation claims, that will allow perfect interoperability with Microsoft's Office files, which in turn will allow such documents to be taken out of the Microsoft ecosystem into open workflow software without users seeing any loss of fidelity. Partly because of the grand claims made for it, the plugin has been the cause of much of the skepticism surrounding the Foundation's ideas and future plans. As Weir wrote:

Hiser explains that in fact they had intended to release it as open source: "we actually agreed to open source it, and Massachusetts said, 'oh, great, OK'. And then the vendors that Louis [Gutierrez] asked to fund it walked away. When Louis resigned, we stopped doing work."

With the start of the CDF project, Hiser and Edwards have new priorities: "The reason for not opening sourcing it [now] is not because we're going to make a million dollars, although that's one of our goals too. We are business people: we need to fund the business that sells products, and we have to do that in about that magnitude to sustain our developing capabilities, and to feed our families." They will also be coming up with a new name for the now-defunct OpenDocument Foundation: "It takes time to do a total brand and corporate make-over," Hiser says, "but that's underway. I'm leaning toward 'Two Guys Without a Garage LLC'."

Whether or not CDF is the right format, whether the da Vinci plugin can provide 100% interoperability, and whether the new company of Hiser and Edwards will flourish, remains to be seen. In any case, the dramatic decision to break with the ODF community, and the attendant publicity it has garnered, may have already achieved something beneficial, for it has helped to direct attention towards the hitherto largely under-appreciated threat that Microsoft's Sharepoint represents for open source, open documents and open standards in the enterprise.

Asay has some thoughts on what must be done to meet that threat:

Weir agrees, and even sees an downside to Microsoft's huge installed base of Office users:

Clearly, then, now is a good time for two guys with - or even without - a garage to get coding, and found that great open source enterprise.

Glyn Moody writes about open source at opendotdotdot.

Memory part 8: Future technologies

[Editor's note: Here, at last, is the final segment of Ulrich Drepper's "What every programmer should know about memory." This eight-part series began back in September. The conclusion of this document looks at how future technologies may help to improve performance as the memory bottleneck continues to worsen.We would like to thank Ulrich one last time for giving LWN the opportunity to help shape this document and bring it to our readers. Ulrich plans to post the entire thing in PDF format sometime in the near future; we'll carry an announcement when that happens.]

8 Upcoming Technology

In the preceding sections about multi-processor handling we have seen that significant performance problems must be expected if the number of CPUs or cores is scaled up. But this scaling-up is exactly what has to be expected in the future. Processors will get more and more cores, and programs must be ever more parallel to take advantage of the increased potential of the CPU, since single-core performance will not rise as quickly as it used to.

8.1 The Problem with Atomic Operations

Synchronizing access to shared data structures is traditionally done in two ways:

- through mutual exclusion, usually by using functionality of the

system runtime to achieve just that;

- by using lock-free data structures.

The problem with lock-free data structures is that the processor has to provide primitives which can perform the entire operation atomically. This support is limited. On most architectures support is limited to atomically read and write a word. There are two basic ways to implement this (see Section 6.4.2):

- using atomic compare-and-exchange (CAS) operations;

- using a load lock/store conditional (LL/SC) pair.

It can be easily seen how a CAS operation can be implemented using LL/SC instructions. This makes CAS operations the building block for most atomic operations and lock free data structures.

Some processors, notably the x86 and x86-64 architectures, provide a far more elaborate set of atomic operations. Many of them are optimizations of the CAS operation for specific purposes. For instance, atomically adding a value to a memory location can be implemented using CAS and LL/SC operations, but the native support for atomic increments on x86/x86-64 processors is faster. It is important for programmers to know about these operations, and the intrinsics which make them available when programming, but that is nothing new.

The extraordinary extension of these two architectures is that they have double-word CAS (DCAS) operations. This is significant for some applications but not all (see [dcas]). As an example of how DCAS can be used, let us try to write a lock-free array-based stack/LIFO data structure. A first attempt using gcc's intrinsics can be seen in Figure 8.1.

struct elem { data_t d; struct elem *c; }; struct elem *top; void push(struct elem *n) { n->c = top; top = n; } struct elem *pop(void) { struct elem *res = top; if (res != NULL) top = res->c; return res; }Figure 8.1: Not Thread-Safe LIFO

This code is clearly not thread-safe. Concurrent accesses in different threads will modify the global variable top without consideration of other thread's modifications. Elements could be lost or removed elements can magically reappear. It is possible to use mutual exclusion but here we will try to use only atomic operations.

The first attempt to fix the problem uses CAS operations when installing or removing list elements. The resulting code looks like Figure 8.2.

#define CAS __sync_bool_compare_and_swap struct elem { data_t d; struct elem *c; }; struct elem *top; void push(struct elem *n) { do n->c = top; while (!CAS(&top, n->c, n)); } struct elem *pop(void) { struct elem *res; while ((res = top) != NULL) if (CAS(&top, res, res->c)) break; return res; }Figure 8.2: LIFO using CAS

At first glance this looks like a working solution. top is never modified unless it matches the element which was at the top of the LIFO when the operation started. But we have to take concurrency at all levels into account. It might be that another thread working on the data structure is scheduled at the worst possible moment. One such case here is the so-called ABA problem. Consider what happens if a second thread is scheduled right before the CAS operation in pop and it performs the following operation:

- l = pop()

- push(newelem)

- push(l)

The end effect of this operation is that the former top element of the LIFO is back at the top but the second element is different. Back in the first thread, because the top element is unchanged, the CAS operation will succeed. But the value res->c is not the right one. It is a pointer to the second element of the original LIFO and not newelem. The result is that this new element is lost.

In the literature [lockfree] you find suggestions to use a feature found on some processors to work around this problem. Specifically, this is about the ability of the x86 and x86-64 processors to perform DCAS operations. This is used in the third incarnation of the code in Figure 8.3.

#define CAS __sync_bool_compare_and_swap struct elem { data_t d; struct elem *c; }; struct lifo { struct elem *top; size_t gen; } l; void push(struct elem *n) { struct lifo old, new; do { old = l; new.top = n->c = old.top; new.gen = old.gen + 1; } while (!CAS(&l, old, new)); } struct elem *pop(void) { struct lifo old, new; do { old = l; if (old.top == NULL) return NULL; new.top = old.top->c; new.gen = old.gen + 1; } while (!CAS(&l, old, new)); return old.top; }Figure 8.3: LIFO using double-word CAS

Unlike the other two examples, this is (currently) pseudo-code since gcc does not grok the use of structures in the CAS intrinsics. Regardless, the example should be sufficient understand the approach. A generation counter is added to the pointer to the top of the LIFO. Since it is changed on every operation, push or pop, the ABA problem described above is no longer a problem. By the time the first thread is resuming its work by actually exchanging the top pointer, the generation counter has been incremented three times. The CAS operation will fail and, in the next round of the loop, the correct first and second element of the LIFO are determined and the LIFO is not corrupted. Voilà.

Is this really the solution? The authors of [lockfree] certainly make it sound like it and, to their credit, it should be mentioned that it is possible to construct data structures for the LIFO which would permit using the code above. But, in general, this approach is just as doomed as the previous one. We still have concurrency problems, just now in a different place. Let us assume a thread executes pop and is interrupted after the test for old.top == NULL. Now a second thread uses pop and receives ownership of the previous first element of the LIFO. It can do anything with it, including changing all values or, in case of dynamically allocated elements, freeing the memory.

Now the first thread resumes. The old variable is still filled with the previous top of the LIFO. More specifically, the top member points to the element popped by the second thread. In new.top = old.top->c the first thread dereferences a pointer in the element. But the element this pointer references might have been freed. That part of the address space might be inaccessible and the process could crash. This cannot be allowed for a generic data type implementation. Any fix for this problem is terribly expensive: memory must never be freed, or at least it must be verified that no thread is referencing the memory anymore before it is freed. Given that lock-free data structures are supposed to be faster and more concurrent, these additional requirements completely destroy any advantage. In languages which support it, memory handling through garbage collection can solve the problem, but this comes with its price.

The situation is often worse for more complex data structures. The same paper cited above also describes a FIFO implementation (with refinements in a successor paper). But this code has all the same problems. Because CAS operations on existing hardware (x86, x86-64) are limited to modifying two words which are consecutive in memory, they are no help at all in other common situations. For instance, atomically adding or removing elements anywhere in a double-linked list is not possible. {As a side note, the developers of the IA-64 did not include this feature. They allow comparing two words, but replacing only one.}

The problem is that more than one memory address is generally involved, and only if none of the values of these addresses is changed concurrently can the entire operation succeed. This is a well-known concept in database handling, and this is exactly where one of the most promising proposals to solve the dilemma comes from.

8.2 Transactional Memory

In their groundbreaking 1993 paper [transactmem] Herlihy and Moss propose to implement transactions for memory operations in hardware since software alone cannot deal with the problem efficiently. Digital Equipment Corporation, at that time, was already battling with scalability problems on their high-end hardware, which featured a few dozen processors. The principle is the same as for database transactions: the result of a transaction becomes visible all at once or the transaction is aborted and all the values remain unchanged.

This is where memory comes into play and why the previous section bothered to develop algorithms which use atomic operations. Transactional memory is meant as a replacement for—and extension of—atomic operations in many situations, especially for lock-free data structures. Integrating a transaction system into the processor sounds like a terribly complicated thing to do but, in fact, most processors, to some extent, already have something similar.

The LL/SC operations implemented by some processors form a transaction. The SC instruction aborts or commits the transaction based on whether the memory location was touched or not. Transactional memory is an extension of this concept. Now, instead of a simple pair of instructions, multiple instructions take part in the transaction. To understand how this can work, it is worthwhile to first see how LL/SC instructions can be implemented. {This does not mean it is actually implemented like this.}

8.2.1 Load Lock/Store Conditional Implementation

If the LL instruction is issued, the value of the memory location is loaded into a register. As part of that operation, the value is loaded into L1d. The SC instruction later can only succeed if this value has not been tampered with. How can the processor detect this? Looking back at the description of the MESI protocol in Figure 3.18 should make the answer obvious. If another processor changes the value of the memory location, the copy of the value in L1d of the first processor must be revoked. When the SC instruction is executed on the first processor, it will find it has to load the value again into L1d. This is something the processor must already detect.

There are a few more details to iron out with respect to context switches (possible modification on the same processor) and accidental reloading of the cache line after a write on another processor. This is nothing that policies (cache flush on context switch) and extra flags, or separate cache lines for LL/SC instructions, cannot fix. In general, the LL/SC implementation comes almost for free with the implementation of a cache coherence protocol like MESI.

8.2.2 Transactional Memory Operations

For transactional memory to be generally useful, a transaction must not be finished with the first store instruction. Instead, an implementation should allow a certain number of load and store operations; this means we need separate commit and abort instructions. In a bit we will see that we need one more instruction which allows checking on the current state of the transaction and whether it is already aborted or not.

There are three different memory operations to implement:

- Read memory

- Read memory which is written to later

- Write memory

When looking at the MESI protocol it should be clear how this special second type of read operation can be useful. The normal read can be satisfied by a cache line in the `E' and `S' state. The second type of read operation needs a cache line in state `E'. Exactly why the second type of memory read is necessary can be glimpsed from the following discussion, but, for a more complete description, the interested reader is referred to literature about transactional memory, starting with [transactmem].

In addition, we need transaction handling which mainly consists of the commit and abort operation we are already familiar with from database transaction handling. There is one more operation, though, which is optional in theory but required for writing robust programs using transactional memory. This instruction lets a thread test whether the transaction is still on track and can (perhaps) be committed later, or whether the transaction already failed and will in any case be aborted.

We will discuss how these operations actually interact with the CPU cache and how they match to bus operation. But before we do that we take a look at some actual code which uses transactional memory. This will hopefully make the remainder of this section easier to understand.

8.2.3 Example Code Using Transactional Memory

For the example we revisit our running example and show a LIFO implementation which uses transactional memory.

struct elem { data_t d; struct elem *c; }; struct elem *top; void push(struct elem *n) { while (1) { n->c = LTX(top); ST(&top, n); if (COMMIT()) return; ... delay ... } } struct elem *pop(void) { while (1) { struct elem *res = LTX(top); if (VALIDATE()) { if (res != NULL) ST(&top, res->c); if (COMMIT()) return res; } ... delay ... } }Figure 8.4: LIFO Using Transactional Memory

This code looks quite similar to the not-thread-safe code, which is an additional bonus as it makes writing code using transactional memory easier. The new parts of the code are the LTX, ST, COMMIT, and VALIDATE operations. These four operations are the way to request accesses to transactional memory. There is actually one more operation, LT, which is not used here. LT requests non-exclusive read access, LTX requests exclusive read access, and ST is a store into transactional memory. The VALIDATE operation is the operation which checks whether the transaction is still on track to be committed. It returns true if this transaction is still OK. If the transaction is already marked as aborting, it will be actually aborted and the next transactional memory instruction will start a new transaction. For this reason, the code uses a new if block in case the transaction is still going on.

The COMMIT operation finishes the transaction; if the transaction is finished successfully the operation returns true. This means that this part of the program is done and the thread can move on. If the operation returns a false value, this usually means the whole code sequence must be repeated. This is what the outer while loop is doing here. This is not absolutely necessary, though, in some cases giving up on the work is the right thing to do.

The interesting point about the LT, LTX, and ST operations is that they can fail without signaling this failure in any direct way. The way the program can request this information is through the VALIDATE or COMMIT operation. For the load operation, this can mean that the value actually loaded into the register might be bogus; that is why it is necessary in the example above to use VALIDATE before dereferencing the pointer. In the next section, we will see why this is a wise choice for an implementation. It might be that, once transactional memory is actually widely available, the processors will implement something different. The results from [transactmem] suggest what we describe here, though.

The push function can be summarized as this: the transaction is started by reading the pointer to the head of the list. The read requests exclusive ownership since, later in the function, this variable is written to. If another thread has already started a transaction, the load will fail and mark the still-born transaction as aborted; in this case, the value actually loaded might be garbage. This value is, regardless of its status, stored in the next field of the new list member. This is fine since this member is not yet in use, and it is accessed by exactly one thread. The pointer to the head of the list is then assigned the pointer to the new element. If the transaction is still OK, this write can succeed. This is the normal case, it can only fail if a thread uses some code other than the provided push and pop functions to access this pointer. If the transaction is already aborted at the time the ST is executed, nothing at all is done. Finally, the thread tries to commit the transaction. If this succeeds the work is done; other threads can now start their transactions. If the transaction fails, it must be repeated from the beginning. Before doing that, however, it is best to insert an delay. If this is not done the thread might run in a busy loop (wasting energy, overheating the CPU).

The pop function is slightly more complex. It also starts with reading the variable containing the head of the list, requesting exclusive ownership. The code then immediately checks whether the LTX operation succeeded or not. If not, nothing else is done in this round except delaying the next round. If the top pointer was read successfully, this means its state is good; we can now dereference the pointer. Remember, this was exactly the problem with the code using atomic operations; with transactional memory this case can be handled without any problem. The following ST operation is only performed when the LIFO is not empty, just as in the original, thread-unsafe code. Finally the transaction is committed. If this succeeds the function returns the old pointer to the head; otherwise we delay and retry. The one tricky part of this code is to remember that the VALIDATE operation aborts the transaction if it has already failed. The next transactional memory operation would start a new transaction and, therefore, we must skip over the rest of the code in the function.

How the delay code works will be something to see when implementations of transactional memory are available in hardware. If this is done badly system performance might suffer significantly.

8.2.4 Bus Protocol for Transactional Memory

Now that we have seen the basic principles behind transactional memory, we can dive into the details of the implementation. Note that this is not based on actual hardware. It is based on the original design of transactional memory and knowledge about the cache coherency protocol. Some details are omitted, but it still should be possible to get insight into the performance characteristics.

Transactional memory is not actually implemented as separate memory; that would not make any sense given that transactions on any location in a thread's address space are wanted. Instead, it is implemented at the first cache level. The implementation could, in theory, happen in the normal L1d but, as [transactmem] points out, this is not a good idea. We will more likely see the transaction cache implemented in parallel to L1d. All accesses will use the higher level cache in the same way they use L1d. The transaction cache is likely much smaller than L1d. If it is fully associative its size is determined by the number of operations a transaction can comprise. Implementations will likely have limits for the architecture and/or specific processor version. One could easily imagine a transaction cache with 16 elements or even less. In the above example we only needed one single memory location; algorithms with a larger transaction working sets get very complicated. It is possible that we will see processors which support more than one active transaction at any one time. The number of elements in the cache then multiplies, but it is still small enough to be fully associative.

The transaction cache and L1d are exclusive. That means a cache line is in, at most, one of the caches but never in both. Each slot in the transaction cache is in, at any one time, one of the four MESI protocol states. In addition to this, a slot has a transaction state. The states are as follows (names according to [transactmem]):

- EMPTY

- the cache slot contains no data. The MESI state is always 'I'.

- NORMAL

- the cache slot contains committed data. The data could as well exist in L1d. The MESI state can be 'M', 'E', and 'S'. The fact that the 'M' state is allowed means that transaction commits do not force the data to be written into the main memory (unless the memory region is declared as uncached or write-through). This can significantly help to increase performance.

- XABORT

- the cache slot contains data which is discarded on abort. This is obviously the opposite of XCOMMIT. All the data created during a transaction is kept in the transaction cache, nothing is written to main memory before a commit. This limits the maximum transaction size but it means that, beside the transaction cache, no other memory has to be aware of the XCOMMIT/XABORT duality for a single memory location. The possible MESI states are 'M', 'E', and 'S'.

- XCOMMIT

- the cache slot contains data which is discarded on commit. This is a possible optimization processors could implement. If a memory location is changed using a transaction operation, the old content cannot be just dropped: if the transaction fails the old content needs to be restored. The MESI states are the same as for XABORT. One difference with regard to XABORT is that, if the transaction cache is full, any XCOMMIT entries in the 'M' state could be written back to memory and then, for all states, discarded.

When an LT operation is started, the processor allocates two slots in the cache. Victims are chosen by first looking for NORMAL slots for the address of the operation, i.e., a cache hit. If such an entry is found, a second slot is located, the value copied, one entry is marked XABORT, and the other one is marked XCOMMIT.

If the address is not already cached, EMPTY cache slots are located. If none can be found, NORMAL slots are looked for. The old content must then be flushed to memory if the MESI state is 'M'. If no NORMAL slot is available either, it is possible to victimize XCOMMIT entries. This is likely going to be an implementation detail, though. The maximum size of a transaction is determined by the size of the transaction cache, and, since the number of slots which are needed for each operation in the transaction is fixed, the number of transactions can be capped before having to evict XCOMMIT entries.

If the address is not found in the transactional cache, a T_READ request is issued on the bus. This is just like the normal READ bus request, but it indicates that this is for the transactional cache. Just like for the normal READ request, the caches in all other processors first get the chance to respond. If none does the value is read from the main memory. The MESI protocol determines whether the state of the new cache line is 'E' or 'S'. The difference between T_READ and READ comes into play when the cache line is currently in use by an active transaction on another processor or core. In this case the T_READ operation plainly fails, no data is transmitted. The transaction which generated the T_READ bus request is marked as failed and the value used in the operation (usually a simple register load) is undefined. Looking back to the example, we can see that this behavior does not cause problems if the transactional memory operations are used correctly. Before a value loaded in a transaction is used, it must be verified with VALIDATE. This is, in almost no cases, an extra burden. As we have seen in the attempts to create a FIFO implementation using atomic operations, the check which we added is the one missing feature which would make the lock-free code work.

The LTX operation is almost identical to LT. The one difference is that the bus operation is T_RFO instead of T_READ. T_RFO, like the normal RFO bus request, requests exclusive ownership of the cache line. The state of the resulting cache line is 'E'. Like the T_READ bus request, T_RFO can fail, in which case the used value is undefined, too. If the cache line is already in the local transaction cache with 'M' or 'E' state, nothing has to be done. If the state in the local transaction cache is 'S' the bus request has to go out to invalidate all other copies.

The ST operation is similar to LTX. The value is first made available exclusively in the local transaction cache. Then the ST operation makes a copy of the value into a second slot in the cache and marks the entry as XCOMMIT. Lastly, the other slot is marked as XABORT and the new value is written into it. If the transaction is already aborted, or is newly aborted because the implicit LTX fails, nothing is written.

Neither the VALIDATE nor COMMIT operations automatically and implicitly create bus operations. This is the huge advantage transactional memory has over atomic operations. With atomic operations, concurrency is made possible by writing changed values back into main memory. If you have read this document thus far, you should know how expensive this is. With transactional memory, no accesses to the main memory are forced. If the cache has no EMPTY slots, current content must be evicted, and for slots in the 'M' state, the content must be written to main memory. This is not different from regular caches, and the write-back can be performed without special atomicity guarantees. If the cache size is sufficient, the content can survive for a long time. If transactions are performed on the same memory location over and over again, the speed improvements can be astronomical since, in the one case, we have one or two main memory accesses in each round while, for transactional memory, all accesses hit the transactional cache, which is as fast as L1d.

All the VALIDATE and COMMIT operations do for an aborted transaction is to mark the cache slots marked XABORT as empty and mark the XCOMMIT slots as NORMAL. Similarly, when COMMIT successfully finishes a transaction, the XCOMMIT slots are marked empty and the XABORT slots are marked NORMAL. These are very fast operations on the transaction cache. No explicit notification to other processors which want to perform transactions happens; those processors just have to keep trying. Doing this efficiently is another matter. In the example code above we simply have ...delay... in the appropriate place. We might see actual processor support for delaying in a useful way.

To summarize, transactional memory operations cause bus operation only when a new transaction is started and when a new cache line, which is not already in the transaction cache, is added to a still-successful transaction. Operations in aborted transactions do not cause bus operations. There will be no cache line ping-pong due to multiple threads trying to use the same memory.

8.2.5 Other Considerations

In Section 6.4.2, we already discussed how the lock prefix, available on x86 and x86-64, can be used to avoid the coding of atomic operations in some situations. The proposed tricks falls short, though, when there are multiple threads in use which do not contend for the same memory. In this case, the atomic operations are used unnecessarily. With transactional memory this problem goes away. The expensive RFO bus requests are issued only if memory is used on different CPUs concurrently or in succession; this is only the case when they are needed. It is almost impossible to do any better.

The attentive reader might have wondered about delays. What is the expected worst case scenario? What if the thread with the active transaction is descheduled, or if it receives a signal and is possibly terminated, or decides to use siglongjmp to jump to an outer scope? The answer to this is: the transaction will be aborted. It is possible to abort a transaction whenever a thread makes a system call or receives a signal (i.e., a ring level change occurs). It might also be that aborting the transaction is part of the OS's duties when performing system calls or handling signals. We will have to wait until implementations become available to see what is actually done.

The final aspect of transactional memory which should be discussed here is something which people might want to think about even today. The transaction cache, like other caches, operates on cache lines. Since the transaction cache is an exclusive cache, using the same cache line for transactions and non-transaction operation will be a problem. It is therefore important to

- move non-transactional data off of the cache line

- have separate cache lines for data used in separate transactions

The first point is not new, the same effort will pay off for atomic operations today. The second is more problematic since today objects are hardly ever aligned to cache lines due to the associated high cost. If the data used, along with the words modified using atomic operations, is on the same cache line, one less cache line is needed. This does not apply to mutual exclusion (where the mutex object should always have its own cache line), but one can certainly make cases where atomic operations go together with other data. With transactional memory, using the cache line for two purposes will most likely be fatal. Every normal access to data {From the cache line in question. Access to arbitrary other cache lines does not influence the transaction.} would remove the cache line from the transactional cache, aborting the transaction in the process. Cache alignment of data objects will be in future not only a matter of performance but also of correctness.

It is possible that transactional memory implementations will use more precise accounting and will, as a result, not suffer from normal accesses to data on cache lines which are part of a transaction. This requires a lot more effort, though, since then the MESI protocol information is not sufficient anymore.

8.3 Increasing Latency

One thing about future development of memory technology is almost certain: latency will continue to creep up. We already discussed, in Section 2.2.4, that the upcoming DDR3 memory technology will have higher latency than the current DDR2 technology. FB-DRAM, if it should get deployed, also has potentially higher latency, especially when FB-DRAM modules are daisy-chained. Passing through the requests and results does not come for free.

The second source of latency is the increasing use of NUMA. AMD's Opterons are NUMA machines if they have more than one processor. There is some local memory attached to the CPU with its own memory controller but, on SMP motherboards, the rest of the memory has to be accessed through the Hypertransport bus. Intel's CSI technology will use almost the same technology. Due to per-processor bandwidth limitations and the requirement to keep (for instance) multiple 10Gb/s Ethernet ports busy, multi-socket motherboards will not vanish, even if the number of cores per socket increases.

A third source of latency are co-processors. We thought that we got rid of them after math co-processors for commodity processors were no longer necessary at the beginning of the 1990's, but they are making a comeback. Intel's Geneseo and AMD's Torrenza are extensions of the platform to allow third-party hardware developers to integrate their products into the motherboards. I.e., the co-processors will not have to sit on a PCIe card but, instead, are positioned much closer to the CPU. This gives them more bandwidth.

IBM went a different route (although extensions like Intel's and AMD's are still possible) with the Cell CPU. The Cell CPU consists, beside the PowerPC core, of 8 Synergistic Processing Units (SPUs) which are specialized processors mainly for floating-point computation.

What co-processors and SPUs have in common is that they, most likely, have even slower memory logic than the real processors. This is, in part, caused by the necessary simplification: all the cache handling, prefetching etc is complicated, especially when cache coherency is needed, too. High-performance programs will increasingly rely on co-processors since the performance differences can be dramatic. Theoretical peak performance for a Cell CPU is 210 GFLOPS, compared to 50-60 GFLOPS for a high-end CPU. The Graphics Processing Units (GPUs, processors on graphics cards) in use today achieve even higher numbers (north of 500 GFLOPS) and those could probably, with not too much effort, be integrated into the Geneseo/Torrenza systems.

As a result of all these developments, a programmer must conclude that prefetching will become ever more important. For co-processors it will be absolutely critical. For CPUs, especially with more and more cores, it is necessary to keep the FSB busy all the time instead of piling on the requests in batches. This requires giving the CPU as much insight into future traffic as possible through the efficient use of prefetching instructions.

8.4 Vector Operations

The multi-media extensions in today's mainstream processors implement vector operations only in a limited fashion. Vector instructions are characterized by large numbers of operations which are performed together. Compared with scalar operations, this can be said about the multi-media instructions, but it is a far cry from what vector computers like the Cray-1 or vector units for machines like the IBM 3090 did.

To compensate for the limited number of operations performed for one instruction (four float or two double operations on most machines) the surrounding loops have to be executed more often. The example in Section 9.1 shows this clearly, each cache line requires SM iterations.

With wider vector registers and operations, the number of loop iterations can be reduced. This results in more than just improvements in the instruction decoding etc.; here we are more interested in the memory effects. With a single instruction loading or storing more data, the processor has a better picture about the memory use of the application and does not have to try to piece together the information from the behavior of individual instructions. Furthermore, it becomes more useful to provide load or store instructions which do not affect the caches. With 16 byte wide loads of an SSE register in an x86 CPU, it is a bad idea to use uncached loads since later accesses to the same cache line have to load the data from memory again (in case of cache misses). If, on the other hand, the vector registers are wide enough to hold one or more cache lines, uncached loads or stores do not have negative impacts. It becomes more practical to perform operations on data sets which do not fit into the caches.

Having large vector registers does not necessarily mean the latency of the instructions is increased; vector instructions do not have to wait until all data is read or stored. The vector units could start with the data which has already been read if it can recognize the code flow. That means, if, for instance, a vector register is to be loaded and then all vector elements multiplied by a scalar, the CPU could start the multiplication operation as soon as the first part of the vector has been loaded. It is just a matter of sophistication of the vector unit. What this shows is that, in theory, the vector registers can grow really wide, and that programs could potentially be designed today with this in mind. In practice, there are limitations imposed on the vector register size by the fact that the processors are used in multi-process and multi-thread OSes. As a result, the context switch times, which include storing and loading register values, is important.

With wider vector registers there is the problem that the input and output data of the operations cannot be sequentially laid out in memory. This might be because a matrix is sparse, a matrix is accessed by columns instead of rows, and many other factors. Vector units provide, for this case, ways to access memory in non-sequential patterns. A single vector load or store can be parametrized and instructed to load data from many different places in the address space. Using today's multi-media instructions, this is not possible at all. The values would have to be explicitly loaded one by one and then painstakingly combined into one vector register.

The vector units of the old days had different modes to allow the most useful access patterns:

- using striding, the program can specify how big the gap

between two neighboring vector elements is. The gap between all

elements must be the same but this would, for instance, easily allow

to read the column of a matrix into a vector register in one

instruction instead of one instruction per row.

- using indirection, arbitrary access patterns can be created. The

load or store instruction would receive a pointer to an array which

contains addresses or offsets of the real memory locations which

have to be loaded.

It is unclear at this point whether we will see a revival of true vector operations in future versions of mainstream processors. Maybe this work will be relegated to co-processors. In any case, should we get access to vector operations, it is all the more important to correctly organize the code performing such operations. The code should be self-contained and replaceable, and the interface should be general enough to efficiently apply vector operations. For instance, interfaces should allow adding entire matrixes instead of operating on rows, columns, or even groups of elements. The larger the building blocks, the better the chance of using vector operations.

In [vectorops] the authors make a passionate plea for the revival of vector operations. They point out many advantages and try to debunk various myths. They paint an overly simplistic image, though. As mentioned above, large register sets mean high context switch times, which have to be avoided in general purpose OSes. See the problems of the IA-64 processor when it comes to context switch-intensive operations. The long execution time for vector operations is also a problem if interrupts are involved. If an interrupt is raised, the processor must stop its current work and start working on handling the interrupt. After that, it must resume executing the interrupted code. It is generally a big problem to interrupt an instruction in the middle of the work; it is not impossible, but it is complicated. For long running instructions this has to happen, or the instructions must be implemented in a restartable fashion, since otherwise the interrupt reaction time is too high. The latter is not acceptable.

Vector units also were forgiving as far as alignment of the memory access is concerned, which shaped the algorithms which were developed. Some of today's processors (especially RISC processors) require strict alignment so the extension to full vector operations is not trivial. There are big potential upsides to having vector operations, especially when striding and indirection are supported, so that we can hope to see this functionality in the future.

Appendices and bibliography

The appendices and bibliography page contains, among other things, the source code for a number of the benchmark programs used for this document, more information on oprofile, some discussion of memory types, an introduction to libNUMA, and the bibliography.

LWN comes early next week

Thursday, November 22 is the U.S. Thanksgiving holiday. As has become traditional, the LWN Weekly Edition will come out one day early next week so that your editors can focus on fasting in preparation for the Thanksgiving feast. After a few days of football, food, and avoidance of holiday shopping we'll return to our normal schedule on the 29th.

Security

Centralizing policy rules with PolicyKit

Linux security policy is very simple at its core: the root user can do anything, while all other users can do very little. Unfortunately, administrators want to be able to allow other users to do a limited subset of the things root is permitted to do. Various solutions have been implemented to try and solve this problem, with a recent one being PolicyKit.

Mounting removable filesystems, CDs, USB devices, and the like, is a classic example of a root-only task that some non-privileged users might be allowed to perform. In the past, various mechanisms using groups or mount options in /etc/fstab have been used with some success, but the mechanisms were specific to mounting and did not provide the flexibility that some administrators would like. Network configuration - particularly for wireless networking - is another common task that users might be allowed to do.

PolicyKit is an attempt to centralize these kinds of decisions into a single policy file that the administrator can use to set the kinds of access regular users should be allowed. Previous solutions required changing each utility to handle policy, each in its own unique format and file(s). PolicyKit is meant to avoid all of that, creating a single place, with a consistent syntax (the now ubiquitous XML), that details the policy rules for that system.

The PolicyKit philosophy decouples the "mechanism" of performing the privileged operation from the user interface used to request it. A GUI network configuration tool might allow a user to choose a different network to associate with, but would have no direct means to make that change. It would, instead, contact a privileged network management daemon through an interprocess communication mechanism (such as D-Bus). That daemon would use the PolicyKit API to determine whether to grant the request.

In order to make that decision, PolicyKit needs three pieces of information, the subject, object, and action. The subject is the user and process requesting the action, while the object is what entity the action is being requested on. In a network configuration example, the subject would be a uid, along with additional identifying information such as SELinux security context, the action would be to change the association, and the object would be the network device.

The mechanism code calls the PolicyKit library, passing the subject, object, and action, receiving back a decision. The decision could be a simple yes or no, or it could, instead, require that the user authenticate - either as themselves or as root - before allowing the operation. Re-authentication can be required on sensitive actions, such as those that malware or malicious users might want to perform, to provide an additional layer of security. All of this policy is governed by the entries in the PolicyKit configuration file. Once the decision has been rendered, it is up to the mechanism to enforce it. This may require coordination with the UI, especially if authentication is required.

Administrators can modify the PolicyKit configuration by way of an XML file, usually PolicyKit.conf. The man page gives a few examples of entries like the following:

<match action="org.freedesktop.hal.storage.mount-fixed">

<match user="davidz">

<return result="yes"/>

</match>

<match user="freddy">

<return result="no"/>

</match>

</match>

As should be relatively easy to see, this configuration would allow the user

"davidz" to perform the action "mount-fixed" while disallowing the user

"freddy". Depending on the default value for that action, as specified in

the mechanism's configuration, users not listed could be denied,

allowed, or be required to authenticate.

Configuration for mechanisms, which lists the supported actions along with a default policy, is specified in a separate XML file. The mechanism could generate the file on startup and remove it on exit, completely removing the action from the system when it is inactive. Action configuration also includes the message strings that will be displayed by the UI when authenticating or denying users.

PolicyKit has been added to Fedora 8, but is, as yet, mostly unused. There are plans to integrate various GNOME configuration tools with PolicyKit for Fedora 9 and a GNOME API has been created to assist with that. One would guess that a KDE API is in the wings as well.

PolicyKit is not meant to replace SELinux or other security mechanisms, it is simply a means to allow users more privileges in a centralized, easily audited, way. PolicyKit works within the existing access control framework, using whatever privileges have been given to the mechanism. In the end, PolicyKit just provides advice to another program, it is up to that program to enforce the decision, while the OS can and does enforce its own rules upon that process.

New vulnerabilities

3proxy: denial of service

| Package(s): | 3proxy | CVE #(s): | CVE-2007-5622 | ||||

| Created: | November 9, 2007 | Updated: | November 14, 2007 | ||||

| Description: | Double-free vulnerability in the ftpprchild function in ftppr in 3proxy 0.5 through 0.5.3i allows remote attackers to cause a denial of service (daemon crash) via multiple OPEN commands to the FTP proxy. | ||||||

| Alerts: |

| ||||||

Django: denial of service

| Package(s): | Django | CVE #(s): | CVE-2007-5712 | ||||||||||||

| Created: | November 12, 2007 | Updated: | September 22, 2008 | ||||||||||||

| Description: | From the CVE notice: The internationalization (i18n) framework in Django 0.91, 0.95, 0.95.1, and 0.96, and as used in other products such as PyLucid, when the USE_I18N option and the i18n component are enabled, allows remote attackers to cause a denial of service (memory consumption) via many HTTP requests with large Accept-Language headers. | ||||||||||||||

| Alerts: |

| ||||||||||||||

emacs: command execution via local variables

| Package(s): | emacs | CVE #(s): | CVE-2007-5795 | ||||||||||||||||||||

| Created: | November 14, 2007 | Updated: | February 5, 2008 | ||||||||||||||||||||

| Description: | From the original Debian problem report: "In Debian's version of GNU Emacs 22.1+1-2, the `hack-local-variables' function does not behave correctly when `enable-local-variables' is set to :safe. The documentation of `enable-local-variables' states that the value :safe means to set only safe variables, as determined by `safe-local-variable-p' and `risky-local-variable-p' (and the data driving them), but Emacs ignores this and instead sets all the local variables." When this setting (which is not the default) is in effect, opening a hostile file could lead to the execution of arbitrary commands. | ||||||||||||||||||||||

| Alerts: |

| ||||||||||||||||||||||

gforge: multiple vulnerabilities

| Package(s): | gforge | CVE #(s): | CVE-2007-3921 | ||||

| Created: | November 8, 2007 | Updated: | November 14, 2007 | ||||

| Description: | The GForge collaborative development tool uses temp files in an insecure manner. Local users can use this to truncate files with the privileges of the gforge user, they can also use this to cause a denial of service. | ||||||

| Alerts: |

| ||||||

glib2: multiple vulnerabilities

| Package(s): | glib2 | CVE #(s): | |||||

| Created: | November 8, 2007 | Updated: | November 14, 2007 | ||||

| Description: | The glib2 library has multiple (currently unspecified) vulnerabilities in PCRE. | ||||||

| Alerts: |

| ||||||

horde3: multiple vulnerabilities

| Package(s): | horde3 | CVE #(s): | CVE-2006-3548 CVE-2006-3549 CVE-2006-4256 CVE-2007-1473 CVE-2007-1474 | ||||

| Created: | November 12, 2007 | Updated: | November 14, 2007 | ||||

| Description: | From the Debian advisory: Several remote vulnerabilities have been discovered in the Horde web application framework. The Common Vulnerabilities and Exposures project identifies the following problems: CVE-2006-3548: Moritz Naumann discovered that Horde allows remote attackers to inject arbitrary web script or HTML in the context of a logged in user (cross site scripting). CVE-2006-3549: Moritz Naumann discovered that Horde does not properly restrict its image proxy, allowing remote attackers to use the server as a proxy. CVE-2006-4256: Marc Ruef discovered that Horde allows remote attackers to include web pages from other sites, which could be useful for phishing attacks. CVE-2007-1473: Moritz Naumann discovered that Horde allows remote attackers to inject arbitrary web script or HTML in the context of a logged in user (cross site scripting). CVE-2007-1474: iDefense discovered that the cleanup cron script in Horde allows local users to delete arbitrary files. | ||||||

| Alerts: |

| ||||||

inotify-tools: arbitrary code execution

| Package(s): | inotify-tools | CVE #(s): | CVE-2007-5037 | ||||||||

| Created: | November 12, 2007 | Updated: | December 28, 2007 | ||||||||

| Description: | From the Fedora advisory: A vulnerability has been reported in inotify-tools, which can potentially be exploited by malicious users to compromise an application using the library. Successful exploitation may allow the execution of arbitrary code with privileges of the application using the affected library. NOTE: The programs shipped with inotify-tools are reportedly not affected. The vulnerability is reported in versions prior to 3.11. | ||||||||||

| Alerts: |

| ||||||||||

kernel: remote denial of service

| Package(s): | kernel | CVE #(s): | CVE-2006-6058 CVE-2007-4997 | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Created: | November 9, 2007 | Updated: | June 13, 2008 | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Description: | The Minix filesystem code in Linux kernel 2.6.x up to 2.6.18, and possibly

other versions, allows local users to cause a denial of service (hang) via

a malformed minix file stream that triggers an infinite loop in the

minix_bmap function. NOTE: this issue might be due to an integer overflow

or signedness error.

Integer underflow in the ieee80211_rx function in net/ieee80211/ieee80211_rx.c in the Linux kernel 2.6.x before 2.6.23 allows remote attackers to cause a denial of service (crash) via a crafted SKB length value in a runt IEEE 802.11 frame when the IEEE80211_STYPE_QOS_DATA flag is set, aka an "off-by-two error." | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Alerts: |

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

link-grammar: stack-based buffer overflow

| Package(s): | link-grammar | CVE #(s): | CVE-2007-5395 | ||||||||||||||||||||

| Created: | November 13, 2007 | Updated: | December 17, 2007 | ||||||||||||||||||||

| Description: | Stack-based buffer overflow in the separate_word function in tokenize.c in Link Grammar 4.1b and possibly other versions, as used in AbiWord Link Grammar 4.2.4, allows remote attackers to execute arbitrary code via a long word, as reachable through the separate_sentence function. | ||||||||||||||||||||||

| Alerts: |

| ||||||||||||||||||||||

madwifi: denial of service

| Package(s): | madwifi | CVE #(s): | CVE-2007-5448 | ||||||||

| Created: | November 8, 2007 | Updated: | January 11, 2008 | ||||||||

| Description: | The MadWifi driver for Atheros Wireless Lan cards does not process beacon frames correctly. This can be used by a remote attacker to cause a denial of service. | ||||||||||

| Alerts: |

| ||||||||||

openldap: denial of service

| Package(s): | openldap | CVE #(s): | CVE-2007-5707 | ||||||||||||||||||||||||||||||||||||||||

| Created: | November 8, 2007 | Updated: | April 9, 2008 | ||||||||||||||||||||||||||||||||||||||||

| Description: | The OpenLDAP Lightweight Directory Access Protocol suite has a problem with handling of malformed objectClasses LDAP attributes by the slapd daemon. Both local and remote attackers can use this to crash slapd, causing a denial of service. | ||||||||||||||||||||||||||||||||||||||||||

| Alerts: |

| ||||||||||||||||||||||||||||||||||||||||||

php: denial of service

| Package(s): | php | CVE #(s): | CVE-2007-4887 | ||||||||||||

| Created: | November 12, 2007 | Updated: | November 20, 2007 | ||||||||||||

| Description: | From the CVE entry: The dl function in PHP 5.2.4 and earlier allows context-dependent attackers to cause a denial of service (application crash) via a long string in the library parameter. NOTE: there are limited usage scenarios under which this would be a vulnerability. | ||||||||||||||

| Alerts: |

| ||||||||||||||

poppler and xpdf: multiple vulnerabilities

| Package(s): | poppler xpdf | CVE #(s): | CVE-2007-4352 CVE-2007-5392 CVE-2007-5393 | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Created: | November 8, 2007 | Updated: | February 26, 2008 | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Description: | The xpdf and poppler PDF libraries contain several vulnerabilities which can lead to arbitrary command execution via hostile PDF files. Numerous other applications which use these libraries (PDF viewers, CUPS, etc.) will be affected by the vulnerabilities as well. | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Alerts: |

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

tomboy: execution of arbitrary code

| Package(s): | tomboy | CVE #(s): | CVE-2005-4790 | ||||||||||||||||||||||||||||||||||||||||||||

| Created: | November 9, 2007 | Updated: | February 22, 2011 | ||||||||||||||||||||||||||||||||||||||||||||

| Description: | Jan Oravec reported that the "/usr/bin/tomboy" script sets the

"LD_LIBRARY_PATH" environment variable incorrectly, which might result

in the current working directory (.) to be included when searching for

dynamically linked libraries of the Mono Runtime application.

Note that the tomboy vulnerability was added in 2007. | ||||||||||||||||||||||||||||||||||||||||||||||

| Alerts: |

| ||||||||||||||||||||||||||||||||||||||||||||||

zope-cmfplone: arbitrary code execution

| Package(s): | zope-cmfplone | CVE #(s): | CVE-2007-5741 | ||||||||||||

| Created: | November 12, 2007 | Updated: | December 28, 2007 | ||||||||||||

| Description: | From the Debian advisory: It was discovered that Plone, a web content management system, allows remote attackers to execute arbitrary code via specially crafted web browser cookies. | ||||||||||||||

| Alerts: |

| ||||||||||||||

Page editor: Jake Edge

Kernel development

Brief items

Kernel release status

The current 2.6 prepatch remains 2.6.24-rc2. Quite a few patches have found their way into the mainline git repository since -rc2 was released; they are mostly fixes but there's also some ongoing CIFS ACL support work and the removal of a number of obsolete documents. Expect the -rc3 release sometime in the very near future.The current -mm tree is 2.6.24-rc2-mm1 - the first -mm release since 2.6.23-mm1 came out on October 11. Recent changes to -mm include a number of device mapper updates, a big driver tree update (which has broken a number of things), a lot of IDE updates, bidirectional SCSI support, a large set of SLUB fixes and other "mammary manglement" patches, 64-bit capability support, a number of ext4 enhancements, and the PCI hotplug development tree.

The -mm of the minute tree

In the introduction to 2.6.24-rc2-mm1 (the first -mm tree in some time), Andrew Morton noted that some people want something even more bleeding-edge. So he has created the -mm of the minute tree, which is updated a few times every day. "I will attempt to ensure that the patches in there actually apply, but they sure as heck won't all compile and run." The tree is exported as a patch series, so Quilt is needed to turn it into something which can be compiled. Have fun.

Kernel development news

Quotes of the week

LKML has 10-20x the traffic of linux-scsi and a much smaller signal to noise ratio. Having a specialist list where all the experts in the field hang out actually enhances our ability to fix bugs.

Various topics related to kernel quality

Discussions of kernel quality are not a new phenomenon on linux-kernel. It is, indeed, a topic which comes up with a certain regularity, more so than with many other free software projects. The size of the kernel, the rate at which its code changes, and the wide range of environments in which the kernel runs all lead to unique challenges; add in the fact that kernel bugs can lead to catastrophic system failures and you have the material for no end of debate.The latest round began when Natalie Protasevich, a Google developer who spends some time helping Andrew Morton track bugs, posted this list of a few dozen open bugs which seemed worthy of further attention. Andrew responded with his view of what was happening with those bug reports; that view was "no response from developers" in most cases:

A number of developers came back saying, in essence, that Andrew was employing an overly heavy hand and that his assertions were not always correct. Regardless of whether his claims are correct, Andrew has clearly touched a nerve.

He defended his posting by raising his often-expressed fear that the quality of the kernel is in decline. This is, he says, something which requires attention now:

But is the kernel deteriorating? That is a very hard question to answer for a number of reasons. There is no objective standard by which the quality of the kernel can be judged. Certain kinds of problems can be found by automated testing, but, in the kernel space, many bugs can only be found by running the kernel with specific workloads on specific combinations of hardware. A rising number of bug reports does not necessarily indicate decreasing quality when both the number of users and the size of the code base are increasing.

Along the same lines, as Ingo Molnar pointed out, a decreasing number of bug reports does not necessarily mean that quality is improving. It could, instead, indicate that testers are simply getting frustrated and dropping out of the development process - a worsening kernel could actually cause the reporting of fewer bugs. So Ingo says we need to treat our testers better, but we also need to work harder at actually measuring the quality of the kernel:

It is generally true that problems which can be measured and quantified tend to be addressed more quickly and effectively. The classic example is PowerTop, which makes power management problems obvious. Once developers could see where the trouble was and, more to the point, could see just how much their fixes improved the situation, vast numbers of problems went away over a short period of time. At the moment, the kernel developers can adopt any of a number of approaches to improving kernel quality, but they [PULL QUOTE: In the absence of objective measurements, developers trying to improve kernel quality are really just groping in the dark. END QUOTE] will not have any way of really knowing if that effort is helping the situation or not. In the absence of objective measurements, developers trying to improve kernel quality are really just groping in the dark.

As an example, consider the discussion of the "git bisect" feature. If one is trying to find a regression which happened between 2.6.23 and 2.6.24-rc1, one must conceivably look at several thousand patches to find the one which caused the problem - a task which most people tend to find just a little intimidating. Bisection helps the tester perform a binary search over a range of patches, eliminating half of them in each compile-and-boot cycle. Using bisect, a regression can be tracked down in a relatively automatic way with "only" a dozen or so kernel builds and reboots. At the end of the process, the guilty patch will have been identified in an unambiguous way.

Bisection works so well that developers will often ask a tester to use it to track down a problem they are reporting. Some people see this practice as a way for lazy kernel developers to dump the work of tracking down their bugs on the users who are bitten by those bugs. Building and testing a dozen kernels is, they say, too much to ask of a tester. Mark Lord, for example, asserts that most bugs are relatively easy to find when a developer actually looks at the code; the whole bisect process is often unnecessary:

On the other hand, some developers see bisection as a powerful tool which has made it easier for testers to actively help the process. David Miller says:

Returning to original bug list: another issue which came up was the use of mailing lists other than linux-kernel. Some of the bugs had not been addressed because they had never been reported to the mailing list dedicated to the affected subsystem. Other bugs, marked by Andrew as having had no response, had, in fact, been discussed (and sometimes fixed) on subsystem-specific lists. In both situations, the problem is a lack of communication between subsystem lists and the larger community.

In response, some developers have, once again, called for a reduction in the use of subsystem-specific lists. We are, they say, all working on a single kernel, and we are all interested in what happens with that kernel. Discussing kernel subsystems in isolation is likely to result in a lower-quality kernel. Ingo Molnar expresses it this way: