Leading items

Welcome to the LWN.net Weekly Edition for September 11, 2025

This edition contains the following feature content:

- Introducing Space Grade Linux: an effort to create a distribution suited to the harsh environment beyond the atmosphere.

- KDE launches its own distribution (again): a new vehicle to showcase the KDE desktop.

- Rug pulls, forks, and open-source feudalism: Dawn Foster on how tactics aimed at shifting power within open-source projects work out.

- The dependency tracker for complex deadlock detection: a more sophisticated tool for the identification of potential kernel deadlocks.

- How many ways are there to configure the Linux kernel?: the kernel's configuration options allow for an intimidating number of possibilities.

- Testing the 2-in-1 Framework 12 Laptop: a device intended to be open, user-upgradeable, and user-repairable.

This week's edition also includes these inner pages:

- Brief items: Brief news items from throughout the community.

- Announcements: Newsletters, conferences, security updates, patches, and more.

Please enjoy this week's edition, and, as always, thank you for supporting LWN.net.

Introducing Space Grade Linux

A new project, targeting Linux for the proverbial final frontier—outer space—was the subject of a talk (YouTube video) at the Embedded Linux Conference, which was held as part of Open Source Summit Europe in Amsterdam in late August. Ramón Roche introduced Space Grade Linux (SGL), which is currently incubating as a special interest group (SIG) of the Embedding Linux in Safety Applications (ELISA) project. The idea is to create a distribution with a base layer that can be used for off-planet missions of various sorts, along with other layers that can be used to customize it for different space-based use cases.

By way of an introduction, Roche said that he is from Mexico and is the maintainer of a few open-source-robotics platforms; he comes from many years in the robotics industry. He is the general manager of the Dronecode project and is the co-lead of its Aerial Robotics interest group. Beyond that, he and Ivan Perez are co-leading the Space Grade Linux SIG.

Linux and space

Roche asked the audience a series of questions about space, ending with:

"is Linux in space a worthwhile dream?

" The audience responded

affirmatively, but it was a trick question. "The answer is 'no', it's

actually a present reality, not a dream.

" He then went through a

list of projects using Linux in space including the James Webb

Space Telescope, SpaceX Starlink satellites, the

Ingenuity

helicopter on Mars, the International

Space Station (ISS), and more. He noted that Starlink is the largest

deployment of Linux in space, already, with a plan for up to 30,000 Linux-based

satellites in its constellation.

From his slides,

he showed an exploded view of the different parts and pieces of the ISS

(seen above), which demonstrated that it consists of "tons of modules,

from different agencies distributed across the world, much like open

source

". That kind of project requires a bunch of standards so that

the various modules can fit and work together. The result can look rather

chaotic, as his slide of the ISS interior with cables and gear seemingly

haphazardly scattered about (seen below), showed. "There's a lot of

Linux running in there

", he said.

He asked if anyone knew what was driving the increase in space activity

recently; Tim Bird, who is part of the project, suggested "lower cost to orbit

", which Roche

said was "exactly right

". He put up a "flywheel" diagram and a graph

of payload cost by kilogram that came from a blog post by

Max Olson. The former shows how all of the different improvements in the

process—increased safety, lower development costs, cheaper launches,

higher customer confidence, and so on—feed right back into increasing the

number of launches.

But the main factor driving launches is the decreased cost to deliver

payloads to space. In the 1960s, the cost for a kilogram to orbit was up

to

$20,000, in a 20-ton rocket. In 2020, the SpaceX Starship, at 17 tons,

was estimated to be able to deliver a kilogram for $190; if Starship ends

up being mass produced, so there are, say, 50 of them, that estimate drops

to around $60. "That means that anyone can, eventually, deploy to space,

which will mean that the industry will only grow.

"

Linux in space

"If Linux is already in space, and deployments will only increase, what

are we doing here?

" One of the problems is that there is a lack of

standards—which Linux is being used—that is hurting the industry,

Roche said. The SIG did an informal survey of the distributions being used

in space missions, which showed the fragmentation; most were using Yocto-based distributions, but

there were many others, including Android, Arch Linux, Red Hat, and Ubuntu,

as well as non-Linux solutions such as RTEMS, Zephyr, FreeRTOS, and others.

![Ramón Roche [Ramón Roche]](https://static.lwn.net/images/2025/elce-roche-sm.png)

In truth, most of the deployed missions are one-off designs that are not

being reused; "we're not yet in an era where there are mass-produced

deployments out there

". There is almost no portability of code (or

hardware) between missions, nor is there any "cross-vendor knowledge

transfer

". Vendors are not working out standards between themselves to

make space-mission designs reusable in various ways.

There are a number of space-environment considerations to keep in mind. For example, there is no traditional networking in space, he said. Communication is slow with periodic loss-of-signal events. Right now, those overseeing devices in space need to have system-administration skills in order to send commands and retrieve telemetry from them. Going forward, that should be fixed, he said, because not everyone in mission control will have those skills. There are hardware-related concerns, as well, including radiation-induced failures of various sorts, NAND memory bit flips, and power consumption.

The idea behind SGL is to harness the "main characteristic

of open source

", community, in order to build a Linux distribution for

space. Fostering an ecosystem for Linux in space is also planned; the first

steps are to gather the relevant stakeholders to ensure that all of the

important use cases are known. The plan is for SGL to be

modeled on Automotive Grade

Linux (AGL).

The approach is to have a Yocto base layer with the base configuration, then add other layers on top. SGL uses the MIT license for its code, with a developer certificate of origin required for contributions. It is planned to support specific hardware platforms as well.

The primary objectives of the project include reducing both costs and errors in building space-based devices. Instead of reinventing the wheel for each new mission, SGL will provide reusable software that will help minimize the development time, thus getting devices to space more quickly. Many space missions have similar requirements; they need an operating system that meets safety requirements, is radiation-resistant, and so on.

There is also a goal of reducing resistance to using Linux in space. Part of why the Linux Foundation is involved is to help overcome concerns about using open-source software for space missions. Beyond that, the project wants to facilitate high-performance workloads in space by ensuring that the base layer will work on next-generation boards and processors for spacecraft.

Status

Currently, the meta-sgl repository has a skeleton layer for Yocto that is focused on configuration of the kernel and other components for handling single-event upsets (or errors). Some of the areas that are being investigated and addressed are watchdog timers and fault detection, filesystem reliability with respect to radiation and power loss, over-the-"air" (really space) updates, bootloader and startup integrity, and others. The project is also working with other groups that are already deploying Linux in space to gather real-world requirements, which will be used to set priorities.

The three main user-space applications that are used in space right now are the

NASA Core Flight System

(cFS), NASA F Prime, and Open

Robotics Space Robot Operating System

(Space ROS), Roche said. They each have a "collection of middleware modules

that the different missions utilized to be able to achieve autonomy

".

SGL is deriving requirements from those other platforms in order to ensure

that its base layer covers those needs.

The hope is that the first demos for SGL will be given at the next Open Source Summit, he said, which will show one or more of those applications running on actual hardware. In addition, there were will be simulations of spacecraft based on SGL running on that hardware.

The BeagleV-Fire

board is the current target hardware. It is similar to a Raspberry Pi,

he said,

but is based on the RISC-V architecture. It is "very affordable and we're

choosing this so that everyone on the team can have it and we can deploy

and test easily

".

Looking at the roadmap, the first two items, the Yocto skeleton layer and the continuous-integration/continuous-development (CI/CD) pipeline, have both been completed. The initial documentation is a work in progress, while software bill of materials (SBOM) creation is awaiting some other work before it will be generated along with other build artifacts. Then there are a number of areas that are currently under investigation, including hardware testing, using fault injection in QEMU to test radiation resistance, the user-space layers, and the SGL demo.

The ELISA project is hosting the SGL SIG and is helping to provide it with the structure of a Linux Foundation project. There are monthly Zoom meetings, a mailing list, a Discord channel, and an organization GitHub repository for meeting minutes and the like. There are over 20 companies and organizations that have participated in the project so far, he said.

The NASA Goddard Space Flight Center hosted an ELISA workshop in December 2024. There were 30 in-person attendees and 40 virtual participants. The presentations and slides are available from the workshop. The initial direction of the SGL project was formulated during the workshop. The intent is to spin out SGL into its own foundation, with its own governance, but there is a need for funding in order to make that happen, Roche said.

He fairly quickly went through the responses to a survey that the project had completed around the time of the workshop. It only had 20 responses, so there are plans to do another sometime soon. It showed that half the respondents were from the space industry, around 32% were from aerospace, while automotive and other were both around 9%. Two-thirds of the respondents were using Linux for space-based projects. The survey questions covered areas like development tools, init daemons, flight software, security practices and standards, CPU architectures, hardware platforms, and more. The graphs of the responses can be seen in the "bonus content" in his slides.

Q&A

In response to a question from someone who was somewhat surprised about

mid-mission software updates, Roche said that some projects are definitely

doing that. Bird described some of the current practices, noting that

spacecraft in low-earth orbit are often not updated, since they only have

around a five-year lifespan due to orbital decay. On the other hand,

"SpaceX notoriously does a lot of updating of their software

".

Beyond that, "we haven't seen Linux in deep space a whole lot yet

";

only four or five projects have gone beyond low-earth orbit with Linux, he

said.

Another question was about how SGL could provide software for wildly different mission types, such as for both the Ingenuity helicopter and a module for the ISS. Roche said that the idea is that the base layer will provide things that every mission type needs, such as radiation hardening, and then optional layers providing functionality like autonomy and automation services. Projects would put together whichever layers they need to fulfill their mission objectives.

It would seem that AGL and SGL might be able to work together and even share code, another attendee suggested. Roche said there was some overlap between the projects, so it would make sense to work together. The base requirements and configuration are different between the two, however. SGL is definitely trying to follow the model that AGL used, both to develop the distribution and to help create an ecosystem around it.

Safety certification for space was the topic of another question. It is

something the SGL project would like to pursue, Roche said; if there is a

mission that is interested in getting certified, SGL would like to work

with it on that. Bird noted that there are tiers of safety certification

for space, with manned missions having the most stringent requirements.

"Somehow those tiers get relaxed in the face of cost.

" SpaceX is

running Linux on manned missions, and Linux does not meet "all of the

safety protocols that NASA has had for decades

"; somehow the company is

making that work, he said.

There was some palpable excitement in the room; Linux and free software in space is something that resonated with many attendees. The SGL project is in the early stages and will need contributions, both of time and money, to further its goals. After achieving world domination, Linux seems poised to continue its expansion: to low-earth orbit, the moon, planets, asteroids—and beyond. Can the 8th dimension be all that far behind?

[I would like to thank the Linux Foundation, LWN's travel sponsor, for supporting my trip to Amsterdam for the Embedded Linux Conference Europe.]

KDE launches its own distribution (again)

At Akademy 2025, the

KDE Project released an

alpha version of KDE Linux, a

distribution built by the project to "include the best

implementation of everything KDE has to offer, using the most advanced

technologies

". It is aimed at providing an operating system

suitable for home use, business use, OEM installations, and more

"eventually

". For now there are many rough edges and missing

features that users should be aware of before taking the plunge; but

it is an interesting look at the kind of complete Linux system that

KDE developers would like to see.

Development and goals

KDE contributor Nate Graham wrote an announcement blog post on September 6 to accompany the release of KDE Linux. Harald Sitter had introduced the project as "Project Banana" during a talk (video, slides) at Akademy in 2024, and has been leading its development along with major contributions from Hadi Chokr, Lasath Fernando, Justin Zobel, Graham, and others.

KDE Linux is an immutable distribution that uses Arch Linux

packages as its base, but Graham notes that it is "definitely not

an 'Arch-based distro!'

" Pacman is not included, and Arch is used

only for the base operating system. Everything else, he said, is

either compiled from source using KDE Builder or installed

using Flatpak.

Some may wonder why another Linux distribution is needed; Graham said that he has expressed that sentiment himself in the past regarding other distributions, but he thinks that KDE Linux is justified:

KDE is a huge producer of software. It's awkward for us to not have our own method of distributing it. Yes, KDE produces source code that others distribute, but we self-distribute our apps on app stores like Flathub and the Snap and Microsoft stores, so I think it's a natural thing for us to have our own platform for doing that distribution too, and that's an operating system. I think all the major producers of free software desktop environments should have their own OS, and many already do: Linux Mint and ElementaryOS spring to mind, and GNOME is working on one too.

Besides, this matter was settled 10 years ago with the creation of KDE neon, our first bite at the "in-house OS" apple. The sky did not fall; everything was beautiful and nothing hurt.

Speaking of neon, Graham points

out that it is "being held together by a heroic volunteer

"

(singular) and that no decision has been made as of yet about its

future. Neon has "served admirably for a decade

", he said, but

it "has somewhat reached its limit in terms of what we can do with

it

" because of its Ubuntu base. According to the wiki

page, neon's Ubuntu LTS base is built on old technology and

requires "a lot of packaging busywork

". It also becomes less

stable as time goes on, "because it needs to be tinkered with to

get Plasma to build on it, breaking the LTS promise

".

Architecture and plans

KDE Linux, on the other hand, is designed to be a greenfield

project that allows KDE to make use of newer technologies and more

modern approaches to a Linux distribution unhampered by the needs of a

general-purpose distribution. If KDE Linux's technology choices are

not appealing, Graham says, "feel free to ignore KDE Linux and

continue using the operating system of your choice. There are plenty

of them!

"

KDE Linux is Wayland-only; there is no X.org session and no plan to add one. Users with some of the older NVIDIA cards will need to manually configure the system to work properly with KDE Linux. The distribution also only supports UEFI systems, and there are no plans to add support for BIOS-only systems.

The root filesystem (/) is a read/write Btrfs volume, while /usr is a read-only Enhanced Read-Only File System (EROFS) volume backed by a single file. The system is updated atomically by swapping out the EROFS volume; currently KDE Linux caches up to five of the files to allow users to roll back to previous versions if the most recent updates are broken.

The files have names like kde-linux_202509082242.erofs and are stored in /system. The most recent releases are about 4.8GB in size. The project uses systemd-sysupdate under the hood, which does not have support for delta updates yet. Users should expect to set aside at least 30GB just to cache the EROFS files for now.

Unlike Fedora's image-based Atomic Desktops, KDE Linux does not supply a way for users to add packages to the base system. So, for example, users have no way to add packages with additional kernel modules. Users can add applications packaged as Flatpaks using KDE's Discover graphical software manager; the Snap format is also supported, but it is not integrated with Discover—the snap command-line utility can be used to do install Snaps for now. KDE Linux also includes Distrobox, which allows users to set up a container with the distribution of their choice and install software in the container that is integrated with the system. LWN touched on Distrobox in our coverage of the Bluefin image-based operating system in December 2023.

Unfortunately, it looks like users are not set up correctly for Podman, which Distrobox needs, on KDE Linux; trying to set up a new container gives a "potentially insufficient UIDs or GIDs available in user namespace" error when trying to test Distrobox on the latest KDE Linux build. This comment in the Podman repository on GitHub set me on the right path to fix the problem. This kind of bug is to be expected in an alpha release; no doubt it will be ironed out in the coming weeks or months.

System updates are also performed using Discover: when a new system image is available, it will show up in the Updates tab and can be installed from there. (Or using "sudo updatectl update" from the command line, for those who prefer doing it that way.) Likewise, installed Flatpaks with updates will show up in the Updates tab. For now, at least, users will have to manually manage any applications installed in a Distrobox container.

The default software selection is a good start for a desktop distribution; it includes the Gwenview image viewer, Okular document viewer, Haruna media player, Kate text editor, and Konsole for terminal emulation. Firefox is the only prominent non-KDE application included with the default install. The base system currently includes GNU Bash 5.3.3, curl 8.15, Linux 6.16.5, GCC 15.2.1, Perl 5.42, Python 3.13.7, Vim 9.1, and wget 1.25. It does not include some utilities users might want or expect, such as GNU Screen, Emacs, tmux, pip, or alternate shells like Fish.

KDE Linux's base packages are not meant to be user-customizable, but it should be possible to create custom images using systemd's mkosi tool, which is what is used by the project itself. The mkosi.conf.d directory in the KDE Linux repository contains the various configuration files for managing the packages included in the system image.

Development and what's next

The plan, longer term, is to have three editions of KDE Linux: the

testing edition, which is what is available now, an enthusiast

edition, and a stable edition. The testing edition is meant for

developers and quality assurance folks; it is to be built daily from

Git and to be similar in quality to KDE neon's unstable release. The

enthusiast edition will include beta or released software, depending

on the status of a given application at the time; this edition is

aimed at "KDE enthusiasts, power users, and influencers

". The

stable edition, as the name suggests, will include only released

software that meets quality metrics (which are not yet defined),

indicating it's ready for users not in the other categories.

KDE Linux can be installed on bare metal or in a virtual machine using virt-manager. Support for UEFI Secure Boot is currently missing. Since KDE Linux uses a lot of space for cached images, users should provision more disk space for a virtual machine than they might ordinarily; I allocated 50GB, but probably should have gone with 75GB or more.

Those wishing to follow along with KDE Linux development can check out the milestone trackers for the enthusiast and stable editions. All of the milestones have been reached for the testing edition. There are quite a few items to complete before KDE Linux reaches beta status; for example, the project is currently using packages from the Arch User Repository (AUR) but the plan is to move away from using AUR soon. The project also needs to move production to official KDE infrastructure rather than depending on Sitter's personal systems.

At the moment, the project does not have a security announcement mailing list or other notification mechanism; those using KDE Linux for more than testing should keep an eye on Arch's security tracker and KDE security advisories. Since KDE Linux is an immutable derivative of Arch Linux, with no way to immediately pull updated Arch packages, users should remember that they will be at a disadvantage when there are security vulnerabilities in the base operating system. Any security update would need to be created by Arch Linux, pushed out as an Arch package, and then incorporated into a build for KDE Linux. Conservatively, that will add at least a day for any security updates to reach KDE Linux users.

One of the downsides of having no package manager is that there is no easy way to take stock of what is installed on the system. Normally, one might do an inventory of software using a package manager's query tools; a quick "rpm -qa" shows all of the system software on my desktop's Fedora 42 install. There is no such mechanism for KDE Linux, and it's not clear that there are any plans for that type of feature long term. To be suitable for some of the target audiences, KDE Linux will need (for example) ways to manage the base operating system and easily query what is installed.

The project's governance is described

as a "'Council of elders' model with major contributors being

the elders

". Sitter has final decision-making authority in cases

of disagreement.

Obviously the team working on KDE Linux wants the project to

succeed, but it has put some thought into what will happen if the

distribution is put out to pasture at some point. There is an end-of-life

contingency plan to "push a final update shipping an OS image

that transforms the system into a completely different

distro

". The successor distribution has not been chosen yet; it

would be picked based on the KDE Linux team's relationship with the other

distribution and its ability to take on all of the new users.

Part of the rationale for KDE Linux is to satisfy an impulse that is common to many open-source developers: the desire to ship software directly to users without an intermediary tampering with it. The process of creating and refining KDE Linux will satisfy that for KDE developers, but it may also serve another purpose: to demonstrate just how difficult it is to create and maintain a desktop distribution for widespread use. Whether KDE Linux succeeds as a standalone distribution or not, it may be a useful exercise to illustrate why projects like Debian, Fedora, openSUSE, Ubuntu, and others make choices that ultimately frustrate application developers.

Rug pulls, forks, and open-source feudalism

Like almost all human endeavors, open-source software development involves a range of power dynamics. Companies, developers, and users are all concerned with the power to influence the direction of the software — and, often, to profit from it. At the 2025 Open Source Summit Europe, Dawn Foster talked about how those dynamics can play out, with an eye toward a couple of tactics — rug pulls and forks — that are available to try to shift power in one direction or another.

Power dynamics

Since the beginning of history, Foster began, those in power have tended to use it against those who were weaker. In the days of feudalism, control of the land led to exploitation at several levels. In the open-source world, the large cloud providers often seem to have the most power, which they use against smaller companies. Contributors and maintainers often have less power than even the smaller companies, and users have less power yet.

![Dawn Foster [Dawn Foster]](https://static.lwn.net/images/conf/2025/osseu/DawnFoster-sm.png) We have built a world where it is often easiest to just use whatever a

cloud provider offers, even with open-source software. Those providers may

not contribute back to the projects they turn into services, though,

upsetting the smaller companies that are, likely as not, doing the bulk of

the work to provide the software in question in the first place. Those

companies can have a power of their own, however: the power to relicense

the software. Pulling the rug out from under users of the software in this

way can change the balance of power with regard to cloud providers, but it

leaves contributors and users in a worse position than before. But

there is a power at this level too: the power to fork the software,

flipping the power balance yet again.

We have built a world where it is often easiest to just use whatever a

cloud provider offers, even with open-source software. Those providers may

not contribute back to the projects they turn into services, though,

upsetting the smaller companies that are, likely as not, doing the bulk of

the work to provide the software in question in the first place. Those

companies can have a power of their own, however: the power to relicense

the software. Pulling the rug out from under users of the software in this

way can change the balance of power with regard to cloud providers, but it

leaves contributors and users in a worse position than before. But

there is a power at this level too: the power to fork the software,

flipping the power balance yet again.

Companies that control a software project have the power to carry out this sort of rug pull, and they are often not shy about exercising it. Single-company projects, clearly, are at a much higher risk of rug pulls; the company has all the power in this case, and others have little recourse. So one should look at a company's reputation before adopting a software project, but that is only so helpful. Companies can change direction without notice, be acquired, or go out of business, making previous assessments of their reputation irrelevant.

The problem often comes down to the simple fact that companies have to answer to their investors, and that often leads to pressure to relicense the software they have created in order to increase revenue. This is especially true in cases where cloud providers are competing for the same customers as the company that owns the project. The result can be a switch to a more restrictive license aimed at making it harder for other companies to profit from the project.

A rug pull of this nature can lead to a fork of the project — a rebellious, collective action aimed at regaining some power over the code. But a fork is not a simple matter; it is a lot of work, and will fail without people and resources behind it. The natural source for that is a large company; cloud providers, too, can try to shift power via a fork, and they have the ability to back their fork up with the resources it needs to succeed.

A relicensing event does not always lead to a popular fork; that did not happen with MongoDB or Sentry, for example. Foster said she had not looked into why that was the case. Sometimes rug pulls take other forms, such as when Perforce, after acquiring Puppet in 2022, moved its development and releases behind closed doors, with a reduced frequency of releases back to the public repository. That action kicked off the OpenVox fork.

Looking at the numbers

Foster has spent some time analyzing rug pulls, forks, and what happens thereafter; a lot of the results are available for download as Jupyter notebooks. For each rug-pull event, she looked at the contributor makeup of the project before and after the ensuing fork in an attempt to see what effects are felt by the projects involved.

In 2021, Elastic relicensed Elasticsearch under the non-free Server Side Public License (SSPL). Amazon Web Services then forked the project as OpenSearch. Before the fork, most of the Elasticsearch contributors were Elastic employees; that, unsurprisingly, did not change afterward. OpenSearch started with no strong contributor base, so had to build its community from scratch. As a result, the project has been dominated by Amazon contributors ever since; the balance has shifted slowly over time, but there was not a big uptick in outside contributors even after OpenSearch became a Linux Foundation project in 2024. While starting a project under a neutral foundation can help attract contributors, she said, moving a project under a foundation's umbrella later on does not seem to provide the same benefit.

Terraform was developed mostly by Hashicorp, which relicensed the software under the non-free Business Source License in 2023. One month later, the OpenTofu fork was started under the Linux Foundation. While the contributor base for Terraform, which was almost entirely Hashicorp employees, changed little after the fork, OpenTofu quickly acquired a number of contributors from several companies, none of whom had been Terraform contributors before. In this case, users drove the fork and placed it under a neutral foundation, resulting in a more active developer community.

In 2024, Redis was relicensed under the SSPL; the Valkey fork was quickly organized, under the Linux Foundation, by Redis contributors. The Redis project differed from the others mentioned here in that, before the fork, it had nearly twice as many contributors from outside the company as from within; after the fork, the number of external Redis contributors dropped to zero. All of the external contributors fled to Valkey, with the result that Valkey started with a strong community representing a dozen or so companies.

Looking at how the usage of these projects changes is harder, she said, but there appears to be a correlation between the usage of a project and the number of GitHub forks (cloned repository copies) it has. There is typically a spike in these clones after a relicensing event, suggesting that people are considering creating a hard fork of the project. In all cases, the forks that emerged appeared to have less usage than the original by the "GitHub forks" metric; both branches of the fork continue to go forward. But, she said, projects that are relicensed do tend to show reduced usage, especially when competing forks are created under foundations.

What to do

This kind of power game creates problems for both contributors and users, she said; we contribute our time to these projects, and need them to not be pulled out from under us. There is no way to know when a rug pull might happen, but there are some warning signs to look out for. At the top of her list was the use of a contributor license agreement (CLA); these agreements create a power imbalance, giving the company involved the power to relicense the software. Projects with CLAs more commonly are subject to rug pulls; projects using a developers certificate of origin do not have the same power imbalance and are less likely to be rug pulled.

One should also look at the governance of a project; while being housed under a foundation reduces the chance of a rug pull, that can still happen, especially in cases where the contributors are mostly from a single company. She mentioned the Cortex project, housed under the Cloud Native Computing Foundation, which was controlled by Grafana; that company eventually forked its own project to create Mimir. To avoid this kind of surprise, one should look for projects with neutral governance, with leaders from multiple organizations.

Projects should also be evaluated on their contributor base; are there enough contributors to keep things going? Companies can help, of course, by having their employees contribute to the projects they depend on, increasing influence and making those projects more sustainable. She mentioned the CHAOSS project, which generates metrics to help in the judgment of the viability of development projects. CHAOSS has put together a set of "practitioner guides" intended to help contributors and maintainers make improvements within a project.

With the sustained rise of the big cloud providers, she concluded, the power dynamics around open-source software are looking increasingly feudal. Companies can use relicensing to shift power away from those providers, but they also take power from contributors when they pull the rug in this way. Those contributors, though, are in a better position than the serfs of old, since they have the ability to fork a project they care about, shifting power back in their direction.

Hazel Weakly asked if there are other protections that contributors and users might develop to address this problem. Foster answered that at least one company changed its mind about a planned relicensing action after seeing the success of the Valkey and OpenTofu forks. The ability to fork has the effect of making companies think harder, knowing that there may be consequences that follow a rug pull. Beyond that, she reiterated that projects should be pushed toward neutral governance. Dirk Hohndel added that the best thing to do is to bring more outside contributors into a project; the more of them there are, the higher the risk associated with a rug pull. Anybody who just sits back within a project, he said, is just a passenger; it is better to be driving.

Foster's slides are available for interested readers.

[Thanks to the Linux Foundation, LWN's travel sponsor, for supporting my travel to this event.]

The dependency tracker for complex deadlock detection

Deadlocks are a constant threat in concurrent settings with shared data; it is thus not surprising that the kernel project has long since developed tools to detect potential deadlocks so they can be fixed before they affect production users. Byungchul Park thinks that he has developed a better tool that can detect more deadlock-prone situations. At the 2025 Open Source Summit Europe, he presented an introduction to his dependency tracker (or "DEPT") tool and the kinds of problems it can detect.

![Byungchul Park [Byungchul Park]](https://static.lwn.net/images/conf/2025/osseu/ByungchulPark-sm.png) Park began by presenting a simple ABBA deadlock scenario. Imagine two

threads running, each of which makes use of two locks, called A

and B. The first thread acquires A, then B,

ending up holding both locks; meanwhile, the second thread acquires the

same two locks but in the opposite order, taking B first. That

can work, until the bad day when each thread succeeds in taking the first

of its two locks. Then one holds A, the other holds B,

and each is waiting for the other to release the second lock it needs.

They will wait for a long time.

Park began by presenting a simple ABBA deadlock scenario. Imagine two

threads running, each of which makes use of two locks, called A

and B. The first thread acquires A, then B,

ending up holding both locks; meanwhile, the second thread acquires the

same two locks but in the opposite order, taking B first. That

can work, until the bad day when each thread succeeds in taking the first

of its two locks. Then one holds A, the other holds B,

and each is waiting for the other to release the second lock it needs.

They will wait for a long time.

Deadlocks like this can be hard to reproduce, and thus hard to track down and fix; it is far better to detect the possibility of such a deadlock ahead of time. The kernel has a tool called "lockdep", originally written by Ingo Molnar, that can perform this detection. Whenever a thread acquires a lock, lockdep remembers that acquisition, along with the context — specifically, any other locks that are currently held. It will thus notice that, in the first case, A is acquired ahead of B. When lockdep observes the second thread acquiring the locks in the opposite order, it will raise the alarm. The problem can then be fixed, hopefully before this particular deadlock ever occurs on a production system.

Park continued with a more complex example, presented in this form:

Thread X Thread Y Thread Z mutex_lock(A) mutex_lock(C) mutex_lock(A) mutex_lock(C) wait_for_event(B) mutex_unlock(A) mutex_unlock(C) mutex_unlock(A) mutex_unlock(C) event B

Thread Y, holding lock C, will be blocked waiting for A to become available. Thread Z, meanwhile, is blocked waiting for C. Thread X is waiting for some sort of event to happen; it is up to Z to produce that event, but that will never happen because Z is waiting on a chain of locks leading back to A, held by X. Park's point here is that deadlocks are not driven only by locks, other types of events can be part of deadlock scenarios as well. Lockdep, being focused on locks, is unable to detect these cases.

Park's DEPT tool, currently in its 16th revision, is meant to address this type of problem. The core idea behind DEPT is a new definition of a dependency; while lockdep sees dependencies as lock acquisitions, DEPT looks at events more generally. If one event occurs prior to another, DEPT will notice the dependency between them. In the example above, the release of A depends on the occurrence of B, which in turn depends on the release of C, which itself depends on the release of A. That is a dependency loop that can cause a deadlock; DEPT will duly report it.

Back in 2023, kernel developers were beating their heads against a complex deadlock problem, and Linus Torvalds asked whether DEPT might be able to track the problem down. Using DEPT, Park was able to pinpoint the problem, which involved a wait on a bit lock; events involving bit locks are invisible to lockdep. Earlier this year, Andrew Morton was struggling with a similar problem:

Nostalgia. A decade or three ago many of us spent much of our lives staring at ABBA deadlocks. Then came lockdep and after a few more years, it all stopped. I've long hoped that lockdep would gain a solution to custom locks such as folio_wait_bit_common(), but not yet.

He, too, asked if DEPT could find this problem; once again, DEPT located the deadlock so that it could be fixed.

DEPT, Park said, has a number of benefits, starting with the fact that it uses a more generalized concept of dependencies rather than just looking at specific locks. It can handle situations involving folio locks, completions, or DMA fences that lockdep cannot. DEPT is also able to properly deal with reader-writer locks. It is, he said, less likely than lockdep to generate false-positive reports, and it is able to continue operating after the first report (unlike lockdep, which shuts down once a problem is found).

Lockdep, he allowed, is better in that it produces far fewer false-positive reports now; he noted that kernel developers have spent 20 years achieving that result. It will, though, miss some deadlock situations. In cases where the deadlock scenario is more complex than lockdep can handle, he concluded, DEPT should be used instead.

I could not resist asking why DEPT is not upstream after 16 revisions and more than three years (the first version was posted in February 2022). Park answered that the real source of resistance is the number of false positives. Lockdep is just a lot quieter.

Another attendee said that, since DEPT is a build-time option, it is hard to use with problems that affect production systems in the field; he suggested that perhaps an approach using BPF would be useful. Park agreed, but seemed to not have considered the BPF option until now; he said that he would look into it.

Finally, another audience member said that he did not think that the false-positive problem should block the merging of this code; he asked what the real problem is. Park did not answer that question, but declared that he would keep working on the code until he manages to get it upstream.

After the talk, with beer in hand, I asked a core-kernel developer about why DEPT has run into resistance. The answer was indeed false positives; the tool generates so many reports that it is difficult to get a real signal out of the noise. The root of the problem, they said, was that Park is attempting to replace lockdep all at once with a large patch set. Something like DEPT could be useful and welcome, but it would need to go upstream as a set of smaller changes that evolve lockdep into something with DEPT's view of dependencies, making the kernel better with every step. Until that approach is taken, attempts to merge DEPT into the mainline are unlikely to be successful.

The slides from Park's talk are available for interested readers.

[Thanks to the Linux Foundation, LWN's travel sponsor, for supporting my travel to this event.]

How many ways are there to configure the Linux kernel?

There are a large number of ways to configure the 6.16 Linux kernel. It has 32,468 different configuration options on x86_64, and a comparable number for other platforms. Exploring the ways the kernel can be configured is sufficiently difficult that it requires specialized tools. These show the number of possible configurations that options can be combined in has 6,550 digits. How has that number changed over the history of the kernel, and what does it mean for testing?

Analyzing Kconfigs

With so many configuration options to consider, it can be difficult to even figure out which options are redundant, unused, or otherwise amenable to simplification. For our problem of counting the number of ways to configure the kernel, we need to consider the relationships between different configuration options as well. Happily, there is some software to manage the growing complexity.

The kernel uses a custom configuration language, Kconfig, to describe how the build can be customized. While Kconfig has spread to a handful of related projects, it remains a mostly home-grown, Linux-specific system. Paul Gazzillo, along with a handful of other contributors, wrote kmax, a GPL-2.0-licensed software suite for analyzing Kconfig declarations. That project includes tools to determine which configuration options need to be enabled in order to test a patch, to find configuration bugs, and to export Kconfig declarations in a language that can be understood by more tools. It has been integrated into the Intel 0-day test suite, finding a number of bugs in the kernel's configuration in the process.

One of the project's programs, klocalizer, can output the set of relationships between different options in SMT-LIB 2 or DIMACS format — the standard input formats for modern SAT solvers. This makes it much simpler to write custom ad-hoc analysis of the kernel's configuration options. For example, one could use Z3's Python bindings to load the output from klocalizer and explore how options relate to one another. A SAT solver such as Z3 produces (when possible) a single solution to a set of constraints — in this case, a single valid way to configure the kernel.

Calculating the number of valid solutions is a related but much harder problem called #SAT. While there are a number of approximation techniques that can be used to solve #SAT probabilistically, in this case the constraints in the kernel's configuration are simple enough for a precise counter called ganak to solve the problem exactly.

The numbers

So, with these tools in hand, one can analyze how many ways to configure the kernel there really are. The growth in the number of configuration parameters in the kernel has been roughly linear, leading to exponential growth in the number of ways the kernel can be configured. The following chart shows two trend lines: the logarithm of the actual number of ways to configure the kernel in blue, and the number of configuration options (scaled appropriately to sit on the same axis; see below) in red. The red line represents the theoretical maximum that the blue line could obtain. Plotting 6,000 digit numbers directly proved to be too much for both LibreOffice Calc and Google Sheets, hence the logarithms.

There are a few things that can be immediately seen from this chart. For example, there are more than twice as many configuration options available in version 6.16 compared to version 2.6.37 (the earliest version that kmax can understand). In some sense, this is not surprising — the Linux kernel keeps adding support for new features and new hardware; the number of configuration options is just growing along with the code. But there is a subtler trend that is arguably more important: configuration options are becoming more interdependent over time.

If every configuration option could be enabled independently of every other configuration option, there would be 2N ways to configure the kernel given N configuration options. The red line is the number of configuration options scaled by ln(2)⁄ln(10) — that is, it represents the logarithm of 2N. That way the two lines are directly comparable. The actual number of ways to configure the kernel sits below this theoretical maximum because configuration options can depend on or exclude each other. This chart shows the difference between the red and blue lines:

Note that this result is still on a logarithmic scale, so this value is the logarithm of the number of ways to configure the kernel that are ruled out by dependencies between different options. As the difference grows, it shows that more configuration options are interacting with each other. This is not, in itself, a problem — but those interactions are likely associated with interdependent pieces of code. The number of places where kernel code depends on multiple related configuration options is growing.

With the number of possible configurations, it is certain that not all of them have been tested individually. If the configuration options were independent, testing a kernel with everything enabled and one with everything disabled would theoretically suffice. Many options, especially ones that are specific to a particular hardware driver, are genuinely independent. But when options can influence each other and interact (even outside of Kconfig interactions), the number of scenarios that need to be tested grows quickly.

Linux's testing is largely distributed — it is done by individual patch contributors and reviewers, several different automatic unit testing and fuzzing robots, distribution QA teams, companies, and normal Linux users. With the decentralized nature of open source, and the large number of possible configurations, it's hard to tell exactly how many configurations are being covered. The kernels used by popular distributions are well-tested, but enabling other configuration options is a fast route into uncharted territory. The Linux kernel isn't going to stop getting new features — so the only real solution to the problem is to try to simplify existing configuration options when possible. The recent work to remove special uniprocessor support from the scheduler is an example of that.

Ultimately, among the many challenges of kernel development, this is not the most urgent or important. It is worth keeping an eye on, though, for the sake of the sanity of anyone trying to configure a kernel, if nothing else.

Testing the 2-in-1 Framework 12 Laptop

Framework Computer is a US-based

computer manufacturer with a line of Linux-supported, modular, easily

repairable and upgradeable laptops. In February, the company announced

a new model, the Framework Laptop 12,

an "entry-level

" 12.2-inch convertible notebook that can be

used as a laptop or tablet. The systems were made available for pre-order

in April, I received mine in mid-August. Since then, I have been

putting it through its paces with Debian 13 ("trixie") and

Fedora Linux 42. It's a good choice for users who want a

Linux-friendly, lightweight, 2-in-1

device—if they are willing to make a few concessions on storage

capacity, RAM, and CPU/GPU choices.

The company

Framework is a relatively new company; it was founded in January 2020 with an eye to providing laptops that align with the right to repair movement. In an article for Make:, Nirav Patel, Framework's founder, said:

Some of the most advanced outputs of human civilization were built in ways that meant they would end up as expensive paperweights after a few years, or worse, part of the growing global e-waste crisis. This sounds like an impossibly large mission, but I started Framework to fix the consumer electronics industry.

Five years later, it's fair to say that the industry is still broken: but the Framework line of computers does at least provide an alternative to disposable devices that resist upgrades. Framework sells fully assembled laptops, do-it-yourself (DIY) laptop kits that require some assembly, and individual parts. For example, the company's first 13-inch model laptop shipped in mid-2021 with mainboards using 11th-generation Intel CPUs. Since then, Framework has shipped mainboards with 12th- and 13th-generation Intel CPUs, boards with AMD CPUs, and a RISC-V board that was developed by a third party.

Those who purchased the first-generation model can buy a newer mainboard and upgrade the system without needing to buy a whole new laptop—as long as they're willing to do the disassembly and reassembly. Framework has shipped upgrades for other parts too, such as the display, that users can swap in relatively easily.

Leftover parts need not wind up in the junk pile; the company sells

third-party

cases that hold just the mainboard for the 13-inch laptop. People

who have upgraded their laptop can repurpose the old mainboards as a

kind of mini PC. The company also publishes CAD designs and

reference documentation using open licenses (3-clause

BSD or Creative

Commons Attribution 4.0) for the "key parts

" of their

laptops on GitHub. One

enterprising person is selling a conversion kit on

eBay to turn parts from the 13-inch laptop into an iMac-like

desktop system.

Modular

Ordinarily, one is stuck with whatever ports the manufacturer has deemed necessary for a system, but Framework systems have expansion bays that take expansion cards instead. The cards are basically USB-C adapters for HDMI, DisplayPort, USB-A, Ethernet, or a 3.5mm audio input/output jack. There are also cards that simply act as a passthrough for USB-C, as well as cards that have 250GB or 1TB of storage. These are interchangeable—they work in all of the Framework laptops, the third-party case, and the company's desktop computer.

The USB-C ports also double as inputs to charge the laptop, so one of the ports will be occupied and unusable at least part of the time. Perhaps at some point the company will sell an expansion port that has two USB-C connectors: one for power and one for data. One can, of course, buy a dongle that serves this purpose—but an expansion card would be far more convenient.

Laptop 12

Framework's new 12-inch laptop follows the company's naming conventions for other systems. It is simply called the "Framework Laptop 12"; while descriptive, the name does lack a certain flair. It can be configured with either the Intel i3-1315U or i5-1334U, which were released in early 2023. These are a few generations behind current mobile CPUs from Intel, but that is to be expected; Framework is a small company, and it's going to take longer for it to design, develop, and manufacture a system than it would for a Dell or Lenovo. Currently there is no AMD mainboard option for this model.

The laptop has a choice of a variety of keyboard language layouts, including US English, British English, French, German, Belgian, Traditional Chinese, Czech/Slovak, and several others. There is not an option, at least currently, for backlit keyboards.

The company's release announcement talks about the Laptop 12

being for "students and young people

"—a demographic that

is not well-known for being overly careful or gentle with laptops (or

anything else, for that matter). I have seen firsthand the way teens

and young adults treat personal computing devices; it's not

pretty. This laptop is rigid plastic over metal, and it is supposed to

be especially durable. The Laptop 12 does feel quite solid; the

hinge seems well-designed for converting from laptop mode to tablet

mode, and the plastic surface has a matte texture that's easy to keep

a grip on. It's too early to say how it will hold up long-term, of

course, but I have yet to encounter any problems.

To further appeal to the younger crowd, users can choose from one of five two-tone color schemes: black, grey, lavender, bubblegum, or (my choice) sage green. This is the company's first outing with color choices for laptops—previous models are a uniform metal grey.

The system's mainboard has a single memory slot that supports up to 48GB of DDR5 RAM, and a single M.2 2230 NVMe slot for storage. Most NVMe drives are the larger 2280 form factor, often referred to as a "gumstick" size. The 2230 is more of a gumdrop size; it is 30mm long, versus 80mm for the 2280. The maximum capacity for 2230 drives, at least currently, is 2TB.

As befits the "right to repair" ethos, users can purchase storage and RAM from the company or bring their own. When configuring the Laptop 12, I chose the DIY edition with the i5 CPU and supplied my own 48GB RAM stick and 1TB of NVMe storage, which was much cheaper than buying direct from Framework.

The rest of the system components are one-size-fits-all; the battery, WiFi, touchscreen display, and the rest are non-configurable. They are meant to be upgradeable or replaceable down the road, though. For instance, users may be able to swap in a WiFi 7 card at some point to replace the default WiFi 6E card. If the battery starts to lose capacity, it can be replaced, and so on. Note that the internal system components are generally system-specific; a WiFi card for the Laptop 12 is not usable with the 13, for instance.

The total cost of the base system, without expansion cards, RAM, or storage, was $774. The pre-built model is about $250 more than the DIY model, but it comes with Windows pre-installed, so much of that cost is the Windows license itself.

Supported distributions

Framework supports Linux, but it does not sell systems with it

pre-installed, so DIY is the best choice unless one needs Windows.

Its computers should work well with most Linux distributions, but the

company has a list of distributions that are either officially

supported or community supported. Official support means that

Framework coordinates with the distribution, produces install guides

for recent releases, and provides support-ticket assistance. Community

support means that Framework publishes install guides provided by the

distributions, and offers "best efforts assistance

" through its

community forums. If a user opens a ticket for a problem with

Framework hardware with a community-supported distribution, they will

likely be asked to test or reproduce the problem with an officially

supported distribution.

Framework's Linux compatibility guide lists three distributions as officially supported for the 12-inch model: Bazzite, Fedora Linux 42, and Ubuntu 25.04. The company also lists four distributions as "community supported": Arch Linux, Bluefin, Linux Mint, and NixOS 25.05. It is interesting that three of the seven are, essentially, the same distribution—Bazzite and Bluefin are both maintained by the Universal Blue project, using Fedora packages as the base for each distribution. LWN covered Bluefin in December 2023.

DIY

The self-assembly option sounds far more difficult than it actually is; the DIY model arrives mostly assembled, but the system's keyboard cover is packed separately. The Framework packaging includes a QR code to scan for a URL to the quick start guide for assembly instructions. The system comes with a combination tool that has a double-sided bit with a Torx head and a Phillips head, with a spudger end for prying components apart.

All that is required to assemble the DIY model is to pop in the RAM and NVMe drive, then attach the input cover to the laptop chassis and screw it on. The screws are "captive"; they are meant to stay attached to the chassis, which is a plus for those of us with fat fingers and a tendency to lose small screws. Anyone who has put together IKEA furniture can certainly manage putting this together.

The Laptop 12 is actually my second Framework laptop; I bought a Laptop 13 last year. The 13-inch model requires users to attach a plastic bezel part that is somewhat fiddly to place on the system. The Laptop 12's bezel is already attached. That model was easy to put together, but the 12 is even easier. I didn't even bother to pull up the manual this time around—everything was fully self-explanatory. From start to finish, the assembly took about 10 minutes. Add in time to install Linux, and it might have taken as much as 35 minutes from opening the box to using the computer.

User experience

So far I've tested the laptop with Debian 13 ("trixie") and Fedora 42 extensively, and installed a development release of openSUSE Leap 16 on a 250GB expansion card. I haven't spent much time in openSUSE yet, but it was clear that the external storage card was fast enough to be used to host the operating system. It might be a good option for folks who want to run Tails OS in addition to another Linux distribution.

Most of the time when I am using a laptop, it sits on a riser connected by USB-C to a 38-inch Dell display and an external keyboard and mouse. There is little of interest to report when using the Laptop 12 in that mode; everything works as expected, and it performs well enough for basic desktop usage as well as light gaming. I'm not much of a gamer, but I tested it a bit with SuperTuxCart and OpenArena; the performance was more than adequate for older 3D games.

While the Laptop 12 is a tiny machine, it features a full-size keyboard layout, and a 4.5-inch-wide trackpad.

People have strong opinions about keyboards; what constitutes a good keyboard for one person can be terrible for another person. That said, I've found this one to be enjoyable to use, particularly compared to most of the recent-model laptop keyboards that I've tried. The keys have good travel, and are properly spaced. It is much like typing on the ThinkPad X280 12-inch laptop that I have kept around for conferences, just because I prefer its keyboard to that of the 13-inch Framework laptop.

The Laptop 12 is also a travel-friendly weight. It weighs in at 1.3kg, while a current 13-inch MacBook Air is just a bit lighter at 1.24kg. It is a little heavy as a tablet, more than twice the weight of a 13-inch iPad Pro, but still quite usable in my opinion.

One of the things that I usually have problems with when typing on laptop keyboards is triggering the trackpad with the base of my thumb; that is especially true with Framework's 13-inch system, and it appears others have the same problem. The Laptop 12 has different touchpad hardware, with a matte mylar surface rather than the matte glass surface of the touchpad used with the 13. It is also slightly smaller, and placed a little bit farther from the keyboard than the trackpad on the 13. So far, I've found the palm-rejection to be far better on the Laptop 12 than the 13, though I'm not sure if that's due to its hardware or placement. Both touchpads report as a PixArt PIXA3854, so it does not appear to be a driver issue.

Since the system doubles as a tablet computer, users will likely want to have a stylus to use with it. The touchscreen display supports the Microsoft Pen Protocol (MPP) 2.0 and Universal Stylus Initiative (USI) 2.0, but not at the same time. By default, the touchscreen is set to MPP, but this can be changed in the UEFI settings. Framework is supposed to release a USI 2.0 stylus with two programmable buttons "soon", but it is not yet available for purchase. I bought two third-party styluses, a Metapen Surface Pen M2 that supports MPP 2.0 and a Metapen G1 that supports USI 2.0.

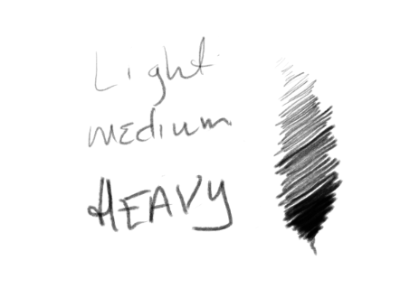

Both work well with the tablet, but I would recommend the M2 because it has a few features that the G1 does not. Namely, turning the pen over toggles eraser mode in Xournal++ and Krita. It also has a button that acts as a right-click mouse button. I've done some writing in Xournal++ and some doodling in Krita. I've been pleased with the experience of writing in Xournal++; my handwriting looks just as good (or bad) when taking notes on the Laptop 12 as when I take notes on paper. In my Krita experiments, I've found the pressure sensitivity to be pretty good as well; it allows drawing faint, sketchy lines, medium lines, or thick, bold lines depending on how hard one applies the stylus tip to the screen. It's not quite a substitute for drawing on paper, but it's good enough for my purposes.

It's possible that the official Framework stylus will provide a better experience than the third party equipment I tried; but impatient users can get something that works right away.

The palm rejection for drawing on the display is good, but not perfect. I've had to be careful when drawing in Krita, lest I unintentionally draw lines with the side of my hand. To be fair, I have the same problem when working with graphite or charcoal on paper, but it's far easier to clean up on the laptop.

The power-button placement is one of the few design choices that seems poorly thought out. It is on the right-hand side of the laptop, near the port bays, rather than on the keyboard as with the 13-inch model. The power button is badly placed and fairly sensitive; it takes little pressure to trigger it, making it far too easy to accidentally hit the button and put the laptop into suspend mode. The first day of testing, I managed to press the power button accidentally at least three times.

There are a few rough edges when it comes to display orientation. For example, in GNOME and KDE, the display rotates as one might expect when using the system in tablet mode; the camera, on the other hand, has no clue about the laptop's orientation. If one joins a video call while the laptop is rotated 180 degrees, then the video feed is upside down. I've noticed that, when it's connected to an external monitor, the laptop display does not always rotate as expected. That can be a minor hassle if using it as a drawing pad while connected to another monitor. It is not a huge problem, but it would have been nice if it worked automatically.

On the topic of the laptop's camera; all framework laptops have built-in hardware switches to turn the camera and/or microphone off. The design is a little confusing, in that when the switch is toggled to "off" it reveals a red dot that signifies that it is off. Normally, one would expect red to indicate that a camera or microphone is live rather than indicating that it is off. Despite the color confusion, this is a useful privacy feature that should be present on every laptop.

Battery life is decent; it has a 50Wh battery, and in a few weeks of testing I've found that it's good for six or seven hours of moderate use when set to "balanced" mode. It is also possible to configure the laptop to only charge to a certain percentage, say 85%, when it is frequently connected to AC power. This is supposed to help reduce wear and extend the battery life.

While much of the system's design is open, including the embedded controller firmware, it uses a proprietary UEFI BIOS from Insyde Software. There has been discussion in the Framework community forum about using or supporting coreboot, but so far little progress has been made. Apparently, Matthew Garrett had a go at porting coreboot to the machine in 2022 but had little luck.

The laptop's speakers are so-so, a bit tinny without much punch to the bass; they are definitely not speakers that will please audiophiles. They are adequate for watching shows or movies via streaming services, for example, but it would not make a good system exclusively for listening to music.

I've also spent a bit of time using KDE Plasma Mobile and Phosh when using the device in tablet mode. Those interfaces seem to be more targeted to phones than to tablets, though—standard KDE and GNOME have worked well enough that I will probably stick with one or the other when using it as a tablet.

Final thoughts

When I got the notification in August that the system was about to ship, I nearly canceled the order since I didn't really need a new laptop. After a few weeks with it, I'm glad that I did not; I really enjoy having a single system that can serve as a desktop, laptop, and tablet. It has less RAM and fewer CPU cores than the 13-inch Framework I've been using, but I don't really notice the difference.

There is one downside of the fact that Framework is a small company; it seems to be operating without a lot of stock on hand. Even though their systems are meant to be repaired and upgraded, the parts that one might need are not always in stock. For example, the 13-inch laptop's WiFi antenna module is out of stock, and a number of other replacement parts are "coming soon" in Framework's store. I expect that all of these parts will be restocked eventually, but there may be some waiting involved if one needs to replace a part. That does beat not being able to replace parts at all, however.

Folks who are in the market for a new Linux laptop might want to consider this model. It is worth noting that, currently, Framework is still shipping out pre-orders from earlier this year; there will likely be a wait of a month or more for any orders placed now, which might be a problem if one requires a new laptop immediately.

Page editor: Jonathan Corbet

Next page:

Brief items>>

![ISS exploded view [ISS exploded view]](https://static.lwn.net/images/2025/elce-iss-explode-sm.png)

![ISS interior view [ISS interior view]](https://static.lwn.net/images/2025/elce-iss-interior-sm.png)

![KDE Linux desktop [KDE Linux desktop]](https://static.lwn.net/images/2025/kde-linux-alpha-sm.png)

![A chart of the number of possible configurations versus version [A chart of the number of possible configurations versus version]](https://static.lwn.net/images/2025/configurations-chart.png)

![A chart showing the difference between actual and theoretical numbers of configurations [A chart showing the difference between actual and theoretical numbers of configurations]](https://static.lwn.net/images/2025/configurations-chart-diff.png)

![Framework 12 Keyboard [Framework 12 Keyboard]](https://static.lwn.net/images/2025/framework-keyboard.png)