Leading items

Welcome to the LWN.net Weekly Edition for May 15, 2025

This edition contains the following feature content:

- A kernel developer plays with Home Assistant: general impressions: a look at what the Home Assistant home-automation software has to offer.

- The last of YaST?: SUSE is pulling the plug on YaST development—will the community pick it up?

- Faster firewalls with bpfilter: speeding up packet filtering with BPF.

- The future of Flatpak: Sebastian Wick discusses the state of the Flatpak project, and a vision for its future.

- More LSFMM+BPF 2025 coverage:

- A FUSE implementation for famfs: John Groves led a discussion about famfs and whether its use of the kernel's direct-access (DAX) mechanism could be replaced with other kernel features.

- A look at what's possible with BPF arenas: BPF developers discuss how to store kernel pointers in BPF arenas.

This week's edition also includes these inner pages:

- Brief items: Brief news items from throughout the community.

- Announcements: Newsletters, conferences, security updates, patches, and more.

Please enjoy this week's edition, and, as always, thank you for supporting LWN.net.

A kernel developer plays with Home Assistant: general impressions

Those of us who have spent our lives playing with computers naturally see the appeal of deploying them though the home for both data acquisition and automation. But many of us who have watched the evolution of the technology industry are increasingly unwilling to entrust critical household functions to cloud-based servers run by companies that may not have our best interests at heart. The Apache-licensed Home Assistant project offers a welcome alternative: locally controlled automation with free software. This two-part series covers roughly a year of Home Assistant use, starting with a set of overall observations about the project.This is not the first time that LWN has looked at this project, of course; this review gives a snapshot of what Home Assistant looked like five years ago, while this 2023 article gives a good overview of the project's history, governance, and overall direction. I will endeavor to not duplicate that material here.

Project health

At a first glance, Home Assistant bears some of the hallmarks of a company-owned project. The company in question, Nabu Casa, was formed around the project and employs a number of its key developers. One of the ways in which the company makes money is with a $65/year subscription service, providing remote access to Home Assistant servers installed on firewalled residential networks. Home Assistant has support for that remote option, and no others. It would be interesting to see what would happen to a pull request adding support for, say, OpenThings Cloud as an alternative. The fate of that request would say a lot about how open the project really is.

(For the record, I have bought the Nabu Casa subscription rather than, say, using WireGuard to make a port available on an accessible system; it is a hassle-free way to solve the problem and support the development of this software).

That said, most of the warning signs that accompany a corporate-controlled project are not present with Home Assistant. The project's contributor license agreement is a derivative of the kernel's developer certificate of origin; contributors retain their copyright on their work. Since the 2024.4 release, the Home Assistant core repository has acquired over 17,000 changesets from over 900 contributors. While a number of Nabu Casa employees (helpfully listed on this page) appear in the top ten contributors, they do not dominate that list.

Home Assistant is clearly an active project with a wide developer base. In 2024, overall responsibility for this project was transferred to the newly created Open Home Foundation. This project is probably here to stay, and seems unlikely to take a hostile turn in the future. For a system that sits at the core of one's home, those are important characteristics.

Installation and setup

Linux users tend to be somewhat spoiled; installing a new application is typically a matter of a single package-manager command. Home Assistant does not really fit into that model. The first three options on the installation page involve dedicated computers — two of which are sold by Nabu Casa. For those wanting to install it on a general-purpose computer, the recommended course is to install the Home Assistant Operating System, a bespoke Linux distribution that runs Home Assistant within a Docker container. There is also a container-based method that can run on another distribution, but this installation does not support the add-ons feature.

Home Assistant, in other words, is not really set up to be just another

application on a Linux system. If one scrolls far enough, though, one will

find, the instructions to install onto a "normal" Linux system, suitably

guarded with warnings about how it is an "advanced

" method.

Of course, that is what I did, putting the software onto an existing system

running Fedora. The whole thing subsequently broke when a

distribution upgrade replaced Python, but that was easily enough repaired.

As a whole, the installation has worked as expected.

Out of the box, though, a new Home Assistant installation does not do much. Its job, after all, is to interface with the systems throughout the house, and every house is different. While Home Assistant can find some systems automatically (it found the Brother printer and dutifully informed me that the device was, inevitably, low on cyan toner), it usually needs to be told about what is installed in the house. Thus, the user quickly delves into the world of "integrations" — the device drivers of Home Assistant.

For each remotely accessible device in the house, there is, hopefully, at least one integration available that allows Home Assistant to work with it. Many integrations are packaged with the system itself, and can be found by way of a simple search screen in the Home Assistant web interface. A much larger set is packaged separately, usually in the Home Assistant Community Store, or HACS; it is fair to say that most users will end up getting at least some integrations from this source. Setting up HACS requires a few steps and, unfortunately, requires the user to have a GitHub account for full integration. It is possible to install HACS integrations without that account, but it is a manual process that loses support for features like update tracking.

Most integrations, at setup time, will discover any of the appropriate devices on the network — if those devices support that sort of discovery, of course. Often, using an integration will require the credentials to log into the cloud account provided by the vendor of the devices in question. When possible, integrations mostly strive to operate entirely locally; some only use the cloud connection for the initial device discovery. When there is no alternative, though, integrations will remain logged into the cloud account and interact with their devices that way; this mode may or may not be supported (or condoned) by the vendor. There are, of course, some vendors that are actively hostile to integration with Home Assistant.

As might be expected, the quality of integrations varies widely. Most of the integrations I have tried have worked well enough. The OpenSprinkler (reviewed here in 2023) integration, instead, thoroughly corrupted the device configuration, exposing me to the shame of being seen with a less-than-perfect lawn; it was quickly removed. It is an especially nice surprise when a device comes with Home Assistant support provided by the vendor, but that is still a relatively rare occurrence. Home Assistant now is in a position similar to Linux 25 years ago; many devices are supported, but often in spite of their vendor, and one has to choose components carefully.

Security

Home Assistant sits at the core of the home network; it has access to sensors that can reveal a lot about the occupants of the home, and it collects data in a single location. An installation will be exposed to the Internet if its owner needs remote access. There is clearly potential for a security disaster here.

The project has a posted

security policy describing the project's stance; it asks for a 90-day

embargo on the reporting of any security issues. Authors writing about the

project's security are encouraged to run their work past the project "so

we can ensure that all claims are correct

". The security policy

explicitly excludes reports regarding third-party integrations (the core

project cannot fix those, after all). The project is also uninterested in

any sort of privilege escalation by users who are logged into Home

Assistant, assuming that anybody who has an account is fully trusted.

The project has only issued one security advisory since the beginning of 2024. There were several in 2023, mostly as the result of a security audit performed by GitHub.

There is no overall vetting of third-party integrations, which are, in the end, just more Python code. So loading an unknown integration is similar to importing an unknown module from PyPI; it will probably work, but the potential for trouble is there. The project has occasionally reported security problems in third-party integrations, but such reports are rare. I am unable to find any reports of actively malicious integrations in the wild, but one seems destined to appear sooner or later.

Actually doing something with Home Assistant

The first step for the owner of a new Home Assistant installation is, naturally, to seek out integrations for the devices installed in the home. On successful installation and initialization, an integration will add one or more "devices" to the system, each of which has some number of "sensors" for data it reports, and possible "controls" to change its operating state. A heat-pump head, for example, may have sensors for the current temperature and humidity, and controls for its operating mode, fan speed, vane direction, and more.

It is worth noting that the setup of these entities seems a bit non-deterministic at times. My solar system has 22 panels with inverters, each of which reports nearly a dozen parameters (voltage, current, frequency, temperature, etc.). There is no easy way to determine which panel is reporting, for example, sensor_amps_12, especially since sensor_frequency_12 almost certainly corresponds to a different panel. My experience is that Home Assistant is a system for people who are willing to spend a lot of time fiddling around with things to get them to a working state. Dealing with these sensors was an early introduction to that; it took some time to figure out the mapping between names and rooftop positions, then to rename each sensor to something more helpful.

The next level of fiddling around is setting up dashboards. Home Assistant offers a great deal of flexibility in the information and controls it provides to the user; it is possible to set up screens focused on, say, energy production or climate control. Happily, the days when this configuration had to be done by writing YAML snippets are mostly in the past at this point; one occasionally still has to dip into YAML, but it does not happen often. The interface is not always intuitive, but it is fairly slick, interactive, and functional.

Another part of Home Assistant that I have not yet played with much is automations and scenes. Automations are simple rule-triggered programs that make changes to some controls. They can carry out actions like "turn on the front light when it gets dark" or "play scary music if somebody rings the doorbell and nobody is home". Scenes are sets of canned device configurations. One might create a scene called "in-laws visiting" that plays loud punk music, sets the temperature to just above freezing, disables all voice control, and tunes all of the light bulbs to 6000K, for example.

The good news is that, unless the fiddling itself is the point (and it can be a good one), there comes a time when things just work and the fiddling can stop. A well-configured Home Assistant instance provides detailed information about the state of the home — and control where the devices allow it — to any web browser that can reach it and log in. There are (open-source) apps that bring this support to mobile devices in a way that is nearly indistinguishable from how the web interface works.

All told, it is clear why Home Assistant has a strong and growing following. It is an open platform that brings control to an industry that is doing its best to keep a firm grasp on our homes and the data they create. Home Assistant shows that we can do nicely without all of these fragile, non-interoperable, rug-pull-susceptible cloud systems. Just like Linux proved that we can have control over our computers, Home Assistant shows that we do not have to surrender control over our homes.

This article has gotten long, and is remarkably short on interesting things that one can actually do with Home Assistant. There are some interesting stories to be told along those lines; they will appear shortly in the second, concluding part of this series.

The last of YaST?

The announcement

of the openSUSE Leap 16.0 beta contained something of a

surprise—along with the usual set of changes and updates, it

informed the community of the retirement of "the traditional YaST

stack

" from Leap. The YaST ("Yet another Setup Tool")

installation and configuration utility has been a core part of the

openSUSE distribution since its inception

in 2005, and part of SUSE Linux since 1996. It will not, immediately,

be removed from the openSUSE Tumbleweed rolling-release

distribution, but its future is uncertain and its fate is up to the larger

community to decide.

YaST has undergone a number of revisions and rewrites over the years. The original YaST was replaced by YaST2 in 2002, and the project was released as open source, under the GPLv2, in 2004. YaST features graphical and text-based user interfaces, so it can be used on the desktop or via the shell. "Setup Tool" undersells what YaST does by a significant margin. It is the system installer, used for both interactive and unattended installations. It is also a tool for software management and performs a wide variety of system-management tasks—including user management, security configuration, and setting up printers.

For many years, YaST was implemented in its own programming

language, YCP,

which was phased out and mostly

replaced with Ruby around 2013 as part of the YCP Killer

project. Currently, YaST is written in Ruby, with some of the

graphical stack written in C, as well as some legacy bits in Perl and

YCP. As the contributing page

for the project notes, it is a "complex system consisting of

several components and modules

". Each of YaST's functions, such as

managing users, installing software, and configuring security

settings, is implemented as a modules. The project's GitHub organization has more than

240 repositories.

The decision to move away from YaST was not well-advertised for such a major component of the distribution. On October 7, 2024, Josef Reidinger replied to a question about the YaST:Devel repository with information about plans to develop YaST for SUSE Linux Enterprise Server (SLES) 16. There was, at that time, little indication that SUSE developers were thinking about dropping YaST from SLE 16 or Leap.

There was a mention of a YaST "stack reduction

" in the

openSUSE Release

Engineering minutes for November 27. The minutes note that

YaST modules would be reconsidered on a "per use case

basis

". However, it also linked to a features ticket for

openSUSE LEAP opened by Luboš Kocman in October 2024 that

said SUSE's product management "do not want any YaST in SLES

16

". It seems unlikely that many openSUSE users or contributors

would have discovered this, however.

In January, Andreas Jaeger wrote a blog post about plans for SLES 16, which contained a short section saying that YaST was being put into maintenance mode, though it would continue to be fully supported for the life of SLES 15. According to the SUSE lifecycle page, general support for SLES 15 will end in 2031.

Agama and YaST replacements

Even though the SUSE team has been relatively quiet about the intent to retire YaST, the Agama installer project did foreshadow the move. In 2022, the YaST developers started working on a new installer under the provisional name D-Installer, and the project was eventually named Agama after a type of lizard. The stated goal of this work was to decouple the user interface from YaST's internals, add a web-based user interface that would allow remote installation of the distribution, and more. That work progressed well enough that Agama was tapped to replace YaST as the installer for openSUSE Leap 16's alpha release. LWN covered Agama's development in August 2024.

At the time, the team was open about the fact that YaST was showing its age. That was part of the reason that they embarked on a from-scratch effort to replace it as the installer. However, Agama was more limited in scope than YaST as an installer—it lacked support for some of the AutoYaST unattended deployment features, for example—and there was no indication that the team was considering replacing YaST altogether. All signs pointed to the two tools coexisting.

With the release

of Agama 10, the Agama team announced a standalone documentation

site for the project and started a new blog for Agama-related news

in October 2024 "to avoid confusion between YaST and

Agama

". Since then, the YaST blog has gone silent.

The Leap 16 beta announcement was the first public note

that explicitly stated the new direction for openSUSE. It said that

YaST had been retired in favor of Agama, Cockpit for system management,

and Myrlyn

(formerly YQPkg) for package management. (LWN looked at Cockpit in

March 2024.) The announcement also noted that YaST would

continue to be available in Tumbleweed but would no longer be

developed by SUSE. "If someone is interested in the maintenance of

YaST for further development and bugfixes, the sources are available

on github.

"

The future

I emailed several openSUSE contributors about the YaST retirement

and received a reply from Douglas DeMaio. He acknowledged that earlier

communication about Agama suggested that YaST would continue to be

used as a management tool, but "the pivot toward deprecation came as

Agama and Myrlyn progressed and were deemed more

future-proof

".

DeMaio said that there were a few reasons that the change is

happening in Leap 16. One is that Agama and Cockpit are

"modern, modular, and API-driven

" which are better suited to

multi-distribution environments that users are working in. Adopting Cockpit,

he said, "helps reduce fragmentation and brings alignment with

other Linux distributions

"; that will make life easier for developers

and users alike. The other is that YaST's code base is large and

complex, and it was decided it would be more practical to design a

new, modular structure from the ground up.

He said that no standalone announcements have gone out to the YaST

development list or openSUSE's Factory development list because YaST

still exists in Tumbleweed. It will be phased out slowly, and YaST

packages may be deprecated or picked up by the community "based on

interest, available maintainers, and whether there's a clear use case

or demand within the community to keep them alive

".

Absent any community effort to maintain YaST, it will likely degrade in place until it is removed entirely from Tumbleweed. Dominique Leuenberger said on the openSUSE Factory mailing list that there are already components that no longer work, and more are likely to break soon.

What's likely to hit a big nail in the coffin will be the next Ruby version update: we've seen every year that the update needed 'some work' on the YaST code base. Without anybody looking after it, there is a big chance things will break by then.

Ruby has one major release a year, on December 25. Thus, Tumbleweed users have more than six months before Ruby-related breakage starts to set in.

It would be hard to argue against SUSE's decision to move away from YaST in favor of more modern tooling. At one time, YaST was a selling point for the distributions—it provided a one-stop shop for system management and configuration that was user friendly. Now, however, much of its functionality is present in more modern tools like Cockpit or the configuration tools included with GNOME and KDE. It makes sense to adopt the healthy upstream projects rather than to continue to develop a distribution-specific tool that offers little functionality not found elsewhere.

But the lack of communication about its retirement, even though it was clearly being discussed behind closed doors last year, is unfortunate. If there are openSUSE community members passionate about maintaining YaST, they could have benefited from an early warning about YaST's deprecation. Perhaps openSUSE could take a cue from Fedora's change process, for example, to ensure that major changes are communicated early and the community is given an opportunity to weigh in. If there are no willing maintainers to be found, openSUSE Tumbleweed users would no doubt still appreciate clear communication about the change rather than a slow trickle of information.

YaST may have outlived its usefulness, but it served the SUSE community well for decades and made Linux more approachable for many users. It deserves a better sendoff than a slow fade into obscurity.

Faster firewalls with bpfilter

From servers in a data center to desktop computers, many devices communicating on a network will eventually have to filter network traffic, whether it's for security or performance reasons. As a result, this is a domain where a lot of work is put into improving performance: a tiny performance improvement can have considerable gains. Bpfilter is a project that allows for packet filtering to easily be done with BPF, which can be faster than other mechanisms.

Iptables was the standard packet-filtering solution for a long time, but has been slowly replaced by nftables. The iptables command-line tool communicates with the kernel by sending/receiving binary data using the setsockopt() and getsockopt() system calls. Then, for each network packet, iptables will compare the data in the packet to the matching criteria defined in a set of user-configurable rules. Nftables was implemented differently: the filtering rules are translated into Netfilter bytecode that is loaded into the kernel. When a network packet is received, it is processed by running the bytecode in a dedicated virtual machine.

Nftables was in turn supplanted in some areas by BPF: custom programs written in a subset of the C language, compiled and loaded into the kernel. BPF, as a packet-filtering solution, offers a lot of flexibility: BPF programs can have access to the raw packet data, allowing the packet to continue its path through the network stack, or not. This makes sense, since BPF was originally intended for packet filtering.

Each tool described above tries to solve the same problem: fast and efficient network-traffic filtering and redirection. They all have their advantages and disadvantages. One generally would not write BPF-C to filter traffic on their laptop and trade the performance of BPF for the ease of use of iptables and nftables. In the professional world, though, it can be worth the effort to write the packet-filtering logic to get as much performance as possible out of the network hardware at the expense of maintainability.

Based on this observation, one might think we would welcome an alternative that combines the ease of use of nftables and performance of BPF. This is where bpfilter comes in. In 2018, Alexei Starovoitov, Daniel Borkmann, and David S. Miller proposed bpfilter as a way to transparently increase iptables performance by translating the filtering rules into BPF programs directly in the kernel ... sort of. Bpfilter was implemented as a user-mode helper, a user-space process started from the kernel, which allows for user-space tools to be used for development and prevents the translation logic from crashing the kernel.

Eventually, a proof of concept was merged, and the work on bpfilter continued in a first and second patch series by Dmitrii Banshchikov, and a third patch series that I wrote. Eventually, in early 2023, Starovoitov and I decided to make bpfilter a freestanding project, removing the user-mode helper from the kernel source tree.

Technical architecture

From a high-level standpoint, bpfilter (the project) can be described as having three different components:

- bpfilter (the daemon): generates and manages the BPF programs to perform network-traffic filtering.

- libbpfilter: a library to communicate with the daemon.

- bfcli: a way to interact with the daemon from the command line. That's the tool one would use to create a new filtering rule.

To understand how the three components interact, we'll follow the path of a filtering rule, from its definition by the user, to the filtered traffic.

bfcli uses a custom domain-specific language to define the filtering rules (see the documentation). The command-line interface (CLI) is responsible for taking the rules defined by the user and sending them to the daemon. bfcli was created as a way to easily communicate with the daemon, so new features can be tested quickly without the requirement for a dedicated translation layer. Hence, bfcli supports all of the features bpfilter has to offer.

It's also possible to use iptables, as long as it integrates the patch provided in bpfilter's source tree. This patch adds support for bpfilter (using the –bpf option), so the rules are sent to bpfilter to be translated into a BPF program instead of sending them to the kernel. Currently, when used through the iptables front end, bpfilter supports filtering on IPv4 source and destination address, and IPv4 header protocol field. Modules are not yet supported.

Nftables support is a bit more complicated. A proof of concept was merged last year, but bpfilter has evolved a lot since then to introduce new features, and the translation layer is broken. The challenge comes from the format used to define the rules: nftables sends Netfilter bytecode to the kernel, the proof of concept sends this bytecode to bpfilter instead, which has to transform it into its internal data format. The other bpfilter developers and I expect to refactor it in 2025 and create a generic-enough framework to easily expand nftables support.

Depending on the source of the request, the format of the data is different: iptables sends binary structures, nftables sends bytecode, and bfcli sends different binary structures. The rules need to be translated into a generic format so that bpfilter can process every rule similarly.

The front end is also responsible for bridging gaps between the CLI and the daemon's expectations: iptables has counters for the number of packets and bytes processed by each rule, but bpfilter only creates such counters when requested, so the iptables front end is in charge of doing so.

Not all front ends are created equal, though. Iptables can only use BPF Netfilter hooks, which map to iptables's tables; it can't request for an express data path (XDP) program to be created. bfcli on the other hand, is designed to support the full extent of the daemon's features.

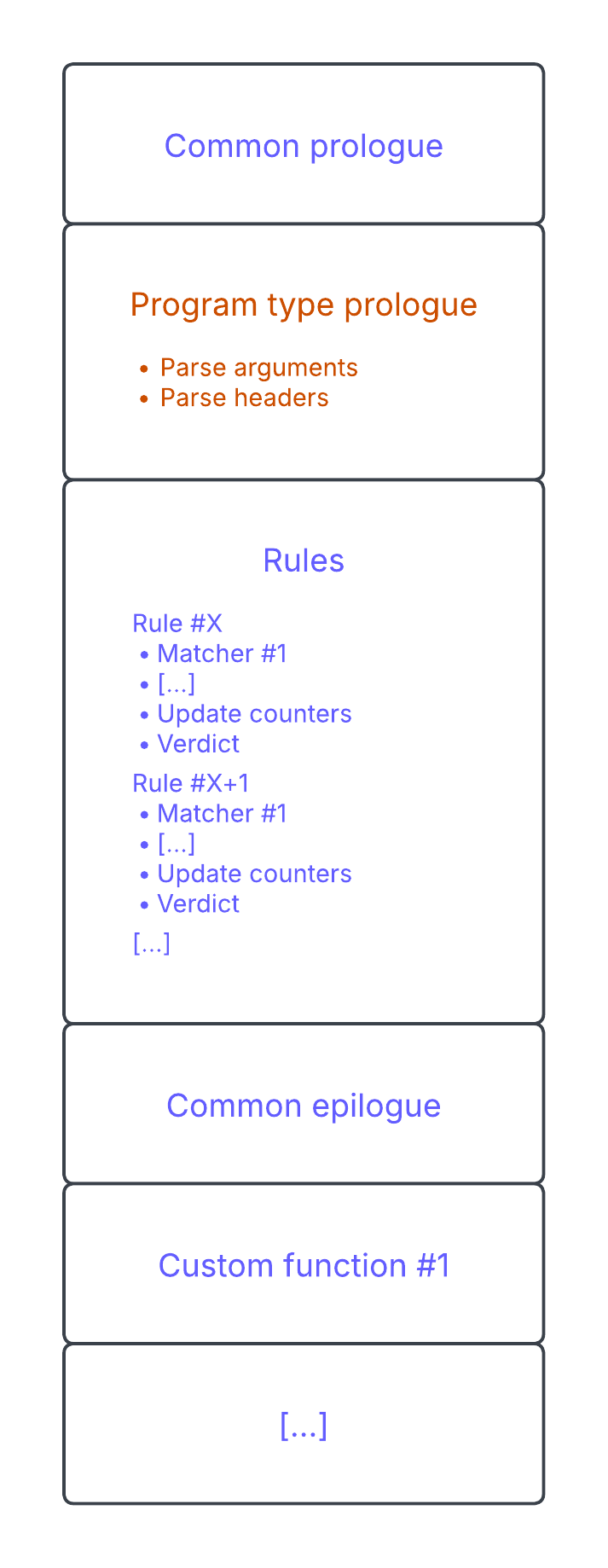

The logical format used by bpfilter to define filtering rules is similar to the one used by iptables and nftables: a chain contains one or more rules to match network traffic against, and a default action if no rule is matched (a "policy"). Filtering rules contain zero or more matching criteria; if all criteria match the processed packet, the verdict is applied. In the daemon, a chain will be translated into a single BPF program, attached to a hook. That program is structured like this:

At the beginning, the BPF program is not much more than a buffer of memory to be filled with bpf_insn structures. All of the generated BPF programs will be structured similarly: they start with a generic prologue to initialize the run-time context of the program and save the program's argument(s) on the stack. Then the program type prologue: depending on the chain, the arguments given to the program are different, and we need to continue initializing the run-time context with program-type-specific information. This section will contain different instructions if the program is meant to be attached to XDP or one of the other places a BPF program can be attached to the kernel's networking subsystem.

Following that are the filtering rules, which are translated into bytecode sequentially. The first matching rule will terminate the program. Finally, there is the common epilogue containing the policy of the chain. Eventually, bpfilter will generate custom functions in the BPF programs to avoid code duplication, for example to update the packet counters. Those functions are added after the program's exit instruction, but only if they are needed.

Throughout the programs, there are times we need to interact with other BPF objects (e.g. a map), even though those objects do not exist yet. For example, a rule might need to update its counters when it matches a packet. While counters are stored in a map, it doesn't exist yet at the time the bytecode is generated. Instead, a "fixup" instruction is added, which is an instruction to be updated later, when the map exists and its file descriptor can be inserted into the bytecode. Fixups are used extensively to update a map file descriptor, a jump offset, or even a call offset.

When the chain has been translated into BPF bytecode, bpfilter will create the required map and update the program accordingly (processing the fixups described above). Different maps are created depending on the features used by the chain: an array to store the packet counters, another array to store the log messages if something goes wrong, a hash map to filter packets based on a set of similarly formatted data, etc.

Then the program is loaded, and the verifier will ensure the bytecode is valid. If the verification fails due to a bug in bpfilter, the program is unloaded, the maps are destroyed, and an error is returned. On success, the program and its maps are now visible on the system.

Finally, bpfilter attaches the program to its hook using a BPF link. Using links allows for atomic replacement of the program if it has to be updated.

Performance

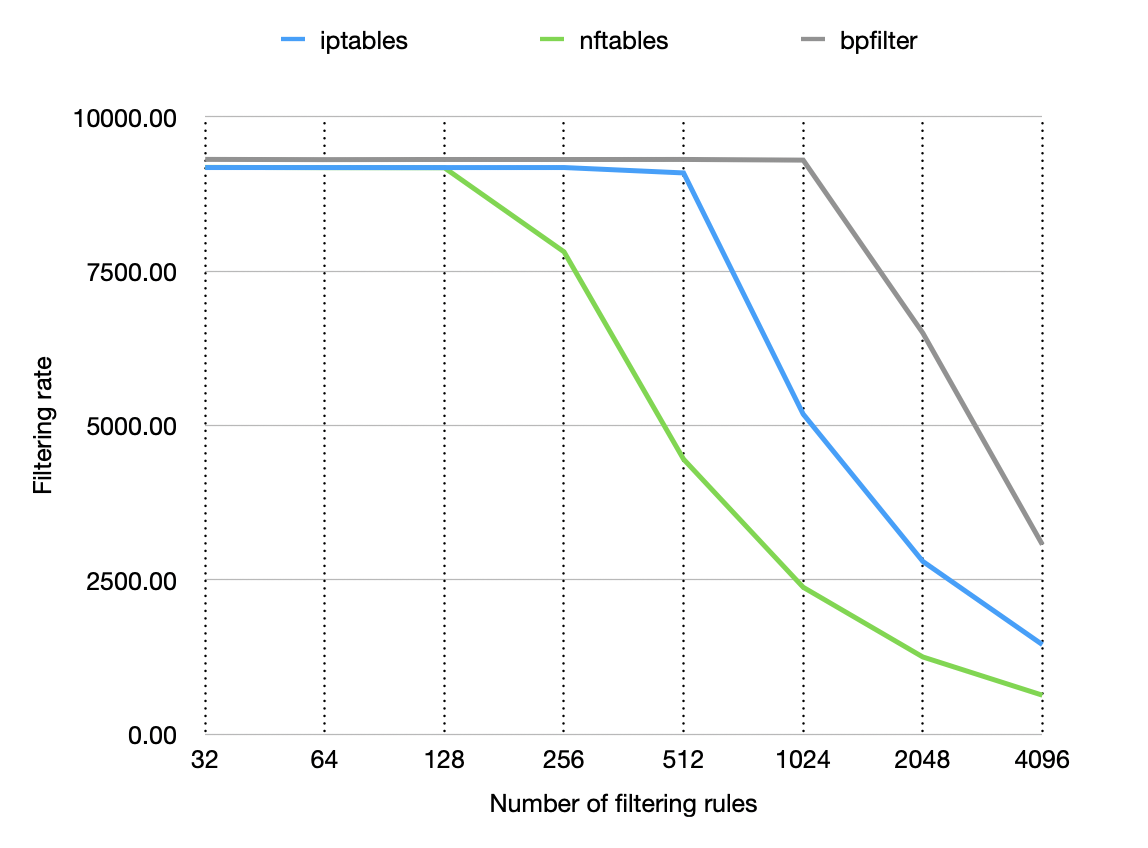

When it comes to filtering performance, the main factor is: how big is the ruleset? In other words, how many rules can we define before the performance deteriorates? There are ways to mitigate this issue, but you can't get away from it completely. Thanks to BPF, bpfilter can raise this limit, allowing bigger rulesets before the performance starts to drop, as we can see below.

The benchmark presented is synthetic, but it demonstrates the impact of the number of rules on the filtering rate for a 10G network link (9300Mb/s baseline). Note the horizontal log scale — bpfilter handles twice as many rules as iptables and eight times as many as nftables before seeing a drop off in performance. All of the filtering rules are attached to the pre-routing hook, and the added rules filter out additional IP addresses.

Future plans

From a user perspective, all of the features currently supported by bpfilter are documented on bpfilter.io. The project is evolving quickly, and hopefully bugs are being fixed faster than they are introduced.

The project roadmap is meant to be as transparent as possible, so it is available on the project's GitHub repository. The main highlights for the first half of 2025 would be proper support for nftables, integration of user-provided (and compiled) BPF programs, and generic sets.

Free feel to try the project and provide feedback, I would happily address it. You can also jump into the code and contribute if that's what you like. I'm always available to answer questions and mentor any contributor.

The future of Flatpak

At the Linux Application Summit (LAS) in April, Sebastian Wick said that, by many metrics, Flatpak is doing great. The Flatpak application-packaging format is popular with upstream developers, and with many users. More and more applications are being published in the Flathub application store, and the format is even being adopted by Linux distributions like Fedora. However, he worried that work on the Flatpak project itself had stagnated, and that there were too few developers able to review and merge code beyond basic maintenance.

I was not able to attend LAS in person or watch it live-streamed,

so I watched the YouTube video of the

talk. The slides are available from the talk

page. Wick is a member of the GNOME Project and a Red Hat employee

who works on "all kinds of desktop plumbing

", including Flatpak

and desktop

portals.

Flatpak basics

Flatpak was originally developed by Alexander Larsson, who had been working on similar projects stretching back to 2007. The first release was as XDG-App in 2015. It was renamed to Flatpak in 2016, a nod to IKEA's "flatpacks" for delivering furniture.

The Flatpak project provides command-line tools for managing and running Flatpak applications, tools for building Flatpak bundles, and runtimes that provide components for Flatpak applications. The project uses control groups, namespaces, bind mounts, seccomp, and Bubblewrap to provide application isolation ("sandboxing"). Flatpak content is primarily delivered using OSTree, though support for using Open Container Initiative (OCI) images has been available since 2018 and is used by Fedora for its Flatpak applications. The "Under the Hood" page from Flatpak's documentation provides a good overview of how the pieces fit together.

Slowing development

Wick started his talk by saying that it looks like everything is

great with the Flatpak project, but if one looks deeper, "you will

notice that it's not being actively developed anymore

". There are

people who maintain the code base and fix security issues, for

example, but "bigger changes are not really happening

anymore

". He said that there are a bunch of merge requests for new

features, but no one feels responsible for reviewing them, and that is

kind of problematic.

The reason for the lack of reviewers is that key people, such as

Larsson, have left the project. Every now and then, Wick said, Larsson

may get involved if it's necessary, but he is basically not part of

the day-to-day development of the project. Wick said that it is hard

to get new Flatpak contributors involved because it can take months to

get feedback on major changes, and then more months to get another

review. "This is really not a great way to get someone up to speed,

and it's not a great situation to be in

."

"Maybe I'm complaining about something that is actually not that

much of an issue

", he said. Flatpak works; it does its job, and

"we just use it and don't think about it much

". In that sense,

the project is in a good spot. But he has still been thinking

about how the project is "living with constraints

" because

contributors do not have the opportunity to go in and make bigger

changes.

As an example, Wick said that Red Hat has been doing work that would allow Flatpaks to be installed as part of a base installation. The vendor or administrator could specify the applications to be installed, and a program called flatpak-preinstall would take care of the rest. The feature has been implemented and is planned for inclusion in Red Hat Enterprise Linux (RHEL) 10. The work was started by Kalev Lember and Owen Taylor last June, but the original pull request was closed by Lember in February as he was leaving Red Hat and would not be working on it anymore. It was picked up by Wick in February as a new request but wasn't reviewed until early May.

OSTree and OCI

Wick's next topic was OCI support in Flatpak. While OSTree has been

a success in some ways, and it is still being maintained, it is not

undergoing active development. He noted that developers have a

"very narrow set of tools

" for working with OSTree, so building

Flatpaks that use OSTree requires non-standard and bespoke tools, but

there is a whole range of utilities available for working with OCI

images. Even better, tools for working with OCI images "are all

developed by people other than us, which means we don't actually have

to do the work if we just embrace them

".

Unfortunately, there are a number of OCI-related improvements that, again, are waiting on review to be merged into Flatpak. For example, Wick mentioned that the OCI container standard has added zstd:chunked support. Instead of the original OCI image format that uses gzipped tarballs, the zstd:chunked images are compressed with zstd and have skippable frames that include additional metadata—such as a table of contents—which allows file-level deduplication. In short, zstd:chunked allows pulling only those files that have changed since the last update, rather than an entire OCI layer, when updating a container image or a Flatpak.

There is a pull request

from Taylor, submitted in September 2023, that would add support

to Flatpak for zstd-compressed layers. It has received little

attention since then and "it's just sitting there,

currently

".

Narrowing permissions

One of the key functions of Flatpak is to sandbox applications and

limit their access to the system. Wick said that the project has added

features to "narrow down

" the sandboxes and provide more

restricted permissions. As an example, Flatpak now has

--device=input to allow an application to access input

devices without having access to all devices.

One problem with this, he said, is that a system's installation of Flatpak may not support the newer features. A user's Linux distribution may still be providing an older version of Flatpak that does not have support for --device=input, or whatever new feature that a Flatpak developer may wish to use. Wick said there needs to be a way for applications to use the new permissions by default, but fall back to the older permission models if used on a system with an older version of Flatpak.

This isn't an entirely new situation, he said. "We had this

before with Wayland and X11

", where if a system is running

Wayland, then Flatpak should not bind-mount an X11 socket. Now, there is a

similar scenario with the xdg-desktop portal

for USB access, which was added

to the xdg-desktop-project in 2021. Support for that portal was merged into

Flatpak in 2024 after several iterations. What is missing is the

ability to specify backward-compatible permissions so that a Flatpak

application can be given USB access (--device=usb) with newer

versions of Flatpak but retain the --device=all permissions

if necessary. Once again, there is a pull request

(from Hubert Figuière) that implements this, but Wick said that

"it's also just sitting there

".

Wick would also like to improve the way that Flatpak handles access

to audio. Currently, Flatpak still uses PulseAudio

even if a host system uses PipeWire. The problem with that is

that PulseAudio bundles together access to speakers and

microphones—you can have access to both, or neither, but not just one. So

if an application has access to play sound, it also has access to

capture audio, which Wick said, with a bit of understatement, is

"not great

". He would like to be able to use PipeWire, which

can expose restricted access to speakers only.

One thing that has been a bit of a pain point, Wick said, is that nested sandboxing does not work in Flatpak. For instance, an application cannot use Bubblewrap inside Flatpak. Many applications, such as web browsers, make heavy use of sandboxing.

They really like to put their tabs into their own sandboxes because it turns out that if one of those tabs is running some code that manages to exploit and break out of the process there, at least it's contained and doesn't spread to the rest of the browser.

What Flatpak does instead, currently, is to have a kind of side

sandbox that applications can call to and spawn another Flatpak

instance that can be restricted even further. "So, in that sense,

that is a solution to the problem, but it is also kind of fragile

."

There have been issues with this approach for quite a while, he said,

but no one knows quite how to solve them.

Ideally, Flatpak would simply support nested namespacing and nested

sandboxes, but currently it does not. Flatpak uses seccomp to prevent

applications in a sandbox from having direct access to user

namespaces. There is an API that can be used to create a sub-sandbox,

but it is more restrictive. He said that the restrictions to user

namespaces are outdated: "for a long time it wasn't really a good

idea to expose user namespacing because it exposed a big kernel API to

user space that could be exploited

". Wick feels that user

namespaces are, nowadays, a well-tested and a much-used interface. He

does not think that there is much of a good argument against user

namespaces anymore.

xdg-dbus-proxy

Flatpak applications do not talk directly to D-Bus. Instead,

flatpak-run spawns an xdg-dbus-proxy

for every Flatpak instance that is "not exactly in the same

sandbox, it's just on the side, basically

". The proxy is

responsible for setting up filtering according to rules that are

processed when flatpak-run is used to start an

application. When setting up the proxy, Flatpak starts with a

deny-all state and then adds specific connections that are

allowed. This is so that applications do not expose things that other

applications are not supposed to use.

Wick said that he would like to move filtering from xdg-dbus-proxy directly to the D-Bus message brokers and provide policy based on a cgroups path. This is not something that has been implemented already, but he said he planned to work on a prototype in busd, which is a D-Bus broker implementation in Rust.

That would also allow for a more dynamic policy, which would allow applications to export services to other applications on the fly. Currently, the policy is set when a Flatpak is run, and can't be modified afterward.

As a side note, that means that Flatpak applications cannot

talk to one another over D-Bus. They can still communicate

with other applications; for example, Wick said that applications can

communicate over the host's shared network namespace, "which means

you can use HTTP or whatever, there are like thousands of side

channels you could use if you wanted to

".

Flatpak's network namespacing is "kind of ugly, and I don't

really have a good solution here

", Wick said, but he wanted to

point out that it is something the project should take a look

at. "Like, you bind something on localhost and suddenly all

applications can just poke at it

". He gave the example of AusweisApp,

which is an official authentication app for German IDs

that can be used to authenticate with government web sites. It

exposes a service on the local host, which makes it available to all

Flatpak applications on the system.

This is some of the stuff that I feel like we really need to take a look at. I'm not sure if this is like directly exploitable, but at the very least it's kind of scary.

Wick said that the project needs to create a network namespace for

Flatpak applications, "but we don't really have any networking

experts around, which is kind of awkward, we really have to find a

solution here

".

Another awkward spot the project finds itself in, he said, is with NVIDIA drivers. The project has to build multiple versions of NVIDIA drivers for multiple runtimes that are supported, and that translates to a great deal of network overhead for users who have to download each of those versions—even if they don't need all of the drivers. (This complaint on the Linux Mint forum illustrates the problem nicely.) It also means that games packaged as Flatpaks need to be continually updated against new runtimes, or they will eventually stop working because their drivers stop being updated and the games will not support current GPUs.

Wick's suggestion is to take a cue from Valve Software. He said

that Valve uses a model similar to Flatpak to run its games, but it

uses the drivers from the host system and loads all of the driver's

dependencies in the sandbox for the game. Valve uses the libcapsule

library to do this, which is "kind of fragile

" and difficult to make

sure that it works well. Instead of using libcapsule, he would like to

statically compile drivers and share them between all Flatpak

applications. This is just in the idea stage at the moment, but

Wick said he would like to solve the driver problem eventually.

Portals

Portals are D-Bus interfaces that provide APIs for things like file

access, printing, opening URLs, and more. Flatpak can grant sandboxed

applications access to portals to make D-Bus calls. Wick noted

that portals are not part of the Flatpak project but they are crucial

to it. "Whatever we do with portals just directly improves

Flatpak, and there are a bunch of portal things we need to

improve

".

He gave the example of the Documents

portal, which makes files outside the sandbox available to Flatpak

applications. The Documents portal is great for sharing single files,

but it is too fine-grained and restrictive for other applications,

such as Blender, GIMP, or music applications, that may need to access

an entire library of files. "You want a more coarse-grained

permission model for files at some point

". There are some

possibilities, he said, such as bind mounting user-selected host

locations into the sandbox.

Wick had a number of ideas that he would like to see implemented

for portals, such as support for autofilling passwords, Fast Identity

Online (FIDO) security keys, speech synthesis, and more. He

acknowledged that it's "kind of hard to write

" code for portals

right now, but there is work to make it easier by using libdex. (See

Christian Hergert's blog

post on libdex for a short look at this.) It might even make sense

to rewrite things in Rust, he said.

Flatpak-next

Assume that it's ten years in the future, Wick said, and no one is

working on Flatpak anymore. "What would you do with Flatpak if you

could just rewrite it? I think the vision where we should go is OCI

for almost everything.

" Larsson's choices in creating Flatpak

were good and sound technical decisions at the time, but they

ended up being "not the thing that everyone else has

". That is

an issue because only a few people understand what Flatpak does, and

the project has to do everything itself.

But, he said, if the project did "everything OCI

", it would

get a lot of things for free, such as OCI registries and tooling. Then

it just comes down to what flatpak-run has to do, and that

would not be very much. Rethinking Flatpak with modern container tools

and aligning with the wider container ecosystem, he said, would make

everything easier and is worth exploring. Once again, he floated the

idea of using Rust for a rewrite.

Q&A

There was a little time for questions at the end of Wick's

session. The first was about what happens to existing Flatpaks if the

project moves to OCI tooling. "Would I need to just throw away

[applications] and download again, or is that too much in the future,

and you haven't thought about that?

" Wick said that it would be an

issue on the client side, but Flathub (for example) has all of the

build instructions for its Flatpaks and could simply rebuild them.

Another audience member was concerned about using container

infrastructure. They said that OCI registries that store images are

missing indexing and metadata that is consumed by applications like

GNOME Software for Flatpaks. What would be the way forward to ensure

that they could preserve the same user experience? Wick said that

there is now a standard for storing non-images in OCI registries,

which would allow storing "the same things we're currently

storing

" for Flatpak, but writing the code to do it and getting it

merged would be the hard part.

The final question was whether there was anything concrete planned

about using PipeWire directly with Flatpak rather than the PulseAudio

routing. Wick said that he had been talking with Wim Taymans, the

creator of PipeWire, about how to add support for it within

Flatpak. It is mostly about "adding PipeWire policy to do the right

thing when it knows that it is a Flatpak instance

", he said.

A FUSE implementation for famfs

The famfs filesystem is meant to provide a shared-memory filesystem for large data sets that are accessed for computations by multiple systems. It was developed by John Groves, who led a combined filesystem and memory-management session at the 2025 Linux Storage, Filesystem, Memory Management, and BPF Summit (LSFMM+BPF) to discuss it. The session was a follow-up to the famfs session at last year's summit, but it was also meant to discuss whether the kernel's direct-access (DAX) mechanism, which is used by famfs, could be replaced in the filesystem by using other kernel features.

Groves said that he works for a company that makes memory; what it is

trying to do is "make bigger problems fit in memory through pools of

disaggregated memory

" as an alternative to sharding. He

comes from a physics background where they would talk about two kinds of

problems: those that fit in memory and those that do not. That's still

true today, even though there is lots more memory available.

For the most part, famfs is targeting read-only or read-mostly use cases, at

least "for sane people

". For crazy people, though, it is possible

to handle shared-writable use cases; the overhead for ensuring

coherency will not make users happy, however. It turns out that many

analytics use cases simply need shared read-only access to the data once it

is loaded.

David Howells asked what he meant by "disaggregated"; Groves said he did not want to use the term "CXL", but that is the best example of disaggregated memory, which means it is not private to a single server. There are other ways to provide that, but CXL is the most practical choice currently. Linux can provide ways to access disaggregated memory, but it cannot manage it like regular system RAM.

![John Groves [John Groves]](https://static.lwn.net/images/2025/lsfmb-groves-sm.png)

There are some other differences between system RAM and "special-purpose

memory" (SPM), which is the category disaggregated memory falls into.

System RAM is not shareable between systems, while SPM is. The reliability,

availability, and serviceability (RAS) "blast radius

" for system

RAM includes the kernel, while, for SPM, it is only the applications that

are affected by an outage, since the kernel does not directly use the

memory. When using system RAM, no device abstraction is needed as the

memory is simply presented as a contiguous range, but he would argue that any

time two systems (or subsystems) need to agree on the handling of the

contents of memory, a device abstraction is required.

Famfs is "an append-only log-structured filesystem

" with a log that

is stored in memory. It prohibits truncate and delete operations, though

filesystems can simply be destroyed if needed; that is done to avoid having

to handle clients with stale metadata. He is currently working on two

separate implementations: one is a kernel filesystem that he talked about

last year and the other is a Filesystem

in Userspace (FUSE) version. The latter was suggested in last year's

session and he has it working, but had not yet posted it; a month later, on

April 20, he posted RFC patches

for FUSE famfs.

Despite the fact that CXL is not commercially available, at least without

having connections in the industry, there are already users of famfs. He

is "getting, sometimes cagey, feedback from hyperscalers

" and

analytics companies are also using it. Famfs allows users to rethink how

much of the data set they can put in memory; putting a 64TB data frame

into memory that can be shared among compute nodes is valuable even if the

access is slower. No one likes to do sharding, Groves said, but they do it

because they have to.

The FUSE port of famfs adds two new FUSE messages. The first is GET_FMAP, which retrieves the full mapping from a file to its DAX extents that gets cached in system memory. That means that all active files can be handled quickly since their metadata is cached in memory. The other new message is GET_DAXDEV, which retrieves the DAX device information. It is used if the file map refers to DAX devices that are not yet known to the FUSE server.

The famfs FUSE implementation in the kernel provides read, write, and mmap() capabilities, along with fault handling. There are some small patches for libfuse to handle the new messages as well. Famfs disables the READDIRPLUS functionality, which returns stat() information for multiple files, because it does not provide the needed file-map information.

He has been asked if famfs could use memfd (from memfd_create())

or guest_memfd instead of DAX; he believes

the answer is "probably not

", but wanted to discuss it with

attendees. Using a DAX device allows errors with the memory to be

returned, which famfs uses, though it is not yet plumbed

into the FUSE version. Previously, he supported both persistent memory (pmem)

and DAX devices as the backing store for famfs, but it was confusing, so he

dropped pmem support. Using memfd is not workable, since it operates on

system RAM and not SPM, he said.

There is some information out there about using guest_memfd with DAX, Groves said, or maybe it is all just an AI hallucination. David Hildenbrand said that there were ideas floating around about that; guest_memfd is just a file, not a filesystem, but perhaps the allocator could be changed to use SPM, instead of system RAM. Groves thought that would defeat the use case for famfs; it is important that the SPM be accessible from all of the systems in a cluster, which a DAX device can provide and it did not sound like guest_memfd could do so.

There are a number of different kinds of use cases that benefit from the

famfs approach, Groves said. For example, providing parallel access to an

enormous RocksDB database. Another,

that kernel developers may not be aware of, is data frames for Apache Arrow, which provides a

memory-efficient layout for data that makes it easily accessible from CPUs

and GPUs for analytics workloads. Famfs can be used for "ginormous

Arrow data frames that multiple nodes want to mmap()

" with

full isolation between the different files in the filesystem.

Matthew Wilcox said that he was not going to repeat his criticisms of CXL, which are well-known, he said and Groves acknowledged with a chuckle. The approach taken with famfs is reasonable for an experiment, Wilcox continued, but his concern is that there are already several shared-storage filesystems in the kernel, such as OCFS2 and GFS2, why not adapt one of those for the famfs use cases? Groves said he is not a cluster-filesystem expert but the main difference is that famfs is backed by memory not storage; reads are done in increments of cache lines, not pages. If, for example, the application is chasing pointers through the data, each new access just requires a read of a cache line.

As the session wound down, there was some discussion of problems that Groves had reported that resulted in spurious warnings from the kernel. He said that he thinks those problems are fixable, and that pmem does not have the same kinds of problems. He hopes to be able to apply the pmem solution to famfs.

A look at what's possible with BPF arenas

BPF arenas are areas of memory where the verifier can safely relax its checking of pointers, allowing programmers to write arbitrary data structures in BPF. Emil Tsalapatis reported on how his team has used arenas in writing sched_ext schedulers at the 2025 Linux Storage, Filesystem, Memory-Management, and BPF Summit. His biggest complaint was about the fact that kernel pointers can't be stored in BPF arenas — something that the BPF developers hope to address, although there are some implementation problems that must be sorted out first.

Tsalapatis started by saying that he and his team have been happy overall with arenas. They have used arenas in several different scheduler experiments, which is how they've accumulated enough feedback to dedicate a session to. In particular, with a few tweaks, he believes that arenas could be useful for allowing the composition of different BPF schedulers.

![Emil Tsalapatis [Emil Tsalapatis]](https://static.lwn.net/images/2025/emil-tsalapatis-lsfsmmbpf-small.png)

Sched_ext is the kernel's framework for writing scheduling policies in BPF. The mechanism is designed to allow scheduler developers to rapidly experiment with alternative approaches, but it has also seen some success in allowing a user-space control plane to communicate important information about processes to the kernel.

In the BPF schedulers Tsalapatis has worked on, the main way they represent

scheduling state is with C structures shared between user space and the kernel.

These structures have statistics, control info, CPU masks, and anything else

necessary to make scheduling decisions about a task. Often, that includes

identifiers that refer to other parts of the scheduler, such as affinity layers

(sets of tasks with similar scheduling needs and characteristics)

or related tasks. None of that is "really possible with map-based

storage

".

Sharing the same information using BPF maps, he explained, would involve a lot

of pointer-chasing between different maps. BPF maps aren't well suited to

representing complex data structures. BPF arenas, on the other hand, are

essentially a "big blob of memory

" that the programmer can do whatever

they would like with. That freedom let Tsalapatis's scheduling code be more

expressive, and in turn enabled him to implement features that would have

previously been infeasible, such as faster

migrations within the last-level cache domain.

Arenas have one big drawback, however: they only store data. Even if, from the point of view of the CPU, there's no real difference between a pointer in the kernel and a pointer in user space, there's a big difference from BPF's point of view. The verifier prevents BPF programs from creating pointers to kernel objects, for security reasons. Even if a program writes a kernel pointer to an arena, the verifier doesn't allow it to be used once it is read back.

So, in practice, sched_ext developers break their data structures into two parts: one part in the arena, for ease of use, and one part stored in a BPF map, to hold references to kernel objects. This works, but it's inconvenient, and obviates many of the advantages of arenas. Tsalapatis and his team partially worked around the problem by creating their own spinlocks backed by the arena, so that they didn't need to manage pointers to kernel spinlocks. That only covers the data structures that they can easily replicate in BPF, however.

Tsalapatis's main question for the BPF developers was: is it possible to allow kernel pointers to be stored in an arena? What needs to be figured out to make that happen?

He also had a handful of related usability concerns. Right now, when they need

to pass data from their arena-backed structures to a kernel function, they have

to copy it to the BPF stack, which Tsalapatis described as "kind of

clunky

". Worse, helper functions can't be written in a way that is indifferent

to whether a structure is stored in an arena or on the stack — the verifier

treats the types differently. This is because the lower bits of arena pointers

are the same between kernel space and user space, to facilitate the writing of

shared data structures. In turn, that imposes special requirements on a BPF

program when it accesses data through an arena pointer. So the helper functions either need to be

duplicated or inlined, neither of which is ideal.

Alexei Starovoitov was grateful for the feedback on arenas, but said that he had

"good and bad news

" about Tsalapatis's request.

Work is already in progress on many of the things he asked for.

Unfortunately, the work is still in progress because there are difficult

problems to solve, so any fixes are going to take a long time, Starovoitov said.

In particular,

it's not clear how to let the verifier mix stack-backed and arena-backed

pointers in a reasonable way.

Tsalapatis said that using inline functions (which let the verifier base the type information on the specific call site in question) does work, it is just less convenient than it could be. Starovoitov agreed that it would be better to fully support mixed stack/arena pointers, but simply didn't have any idea how to make it work at present. The BPF just-in-time (JIT) compiler uses the type information that the verifier attaches to pointers in order to emit correct loads and stores, for one thing.

Starovoitov was curious what kinds of kernel pointers Tsalapatis wanted to store in arenas; thus far, he had mentioned CPU masks and spinlocks, but Starovoitov expected a scheduler to need more than that. Tsalapatis explained that for other data structures, such as the representation of a task, the BPF scheduler often needs its own version anyway, so it's less inconvenient to store an index into a map for the kernel version.

With the problem somewhat defined, the BPF developers launched into an extended

discussion about how best to permit storing kernel pointers in arenas. Options mentioned

included adding an extra level of indirection (essentially automating

the technique of storing kernel pointers in a map and referencing them by index)

and adding a "shadow

page

" to contain kernel pointers.

Depending on how exactly it is implemented, that approach could have substantial implications for the memory use of BPF arenas. One proposed approach was to allocate a separate page, not accessible by user space, for each page of the arena in which kernel pointers are stored. That could result in a situation where reading from an address in an arena returns different data depending on whether the program expects to read a kernel pointer or plain data, which was somewhat unpopular. Another variant of the idea would be to allocate a packed bitmap tracking the validity of stored kernel pointers, a bit like CHERI hardware does. That has its own problems, however, and complicates every access to the arena. Ultimately, the discussion did not come to a conclusion.

Tsalapatis's last piece of feedback on arenas was not directly related to his earlier questions about storing kernel pointers. One of the key advantages of arenas is that the verifier doesn't need to validate operations inside an arena. This makes them flexible, but it can also make it more difficult to track down bugs. Like in a typical C program, and unlike in other kinds of BPF programs, accesses past the end of a structure don't cause an immediate verification failure. Tsalapatis asked everyone to think about ways to help combat this, while still making arenas useful.

Starovoitov explained that several in-progress BPF features would help with that, too. For example, Kumar Kartikeya Dwivedi has been working on adding a standard error output stream for BPF programs that could be used to report problems like page faults in an arena. The ongoing work to allow the cancellation of BPF programs is another thing that could make handling run-time errors in BPF programs more feasible.

BPF arenas are fairly recent; they were introduced in kernel version 6.9. Based on the discussion around Tsalapatis's feedback, it seems like they have not yet achieved their final form, and could end up being a complete alternative to BPF maps, if the kernel developers can overcome some last few hurdles.

Page editor: Joe Brockmeier

Next page:

Brief items>>

![bpfilter's 3 main components [bpfilter's 3 main components]](https://static.lwn.net/images/2025/bpfilter-3-components.png)